Your cart is currently empty!

A Beginner’s Guide to LSTM: Understanding Long Short-Term Memory Networks

Long Short-Term Memory (LSTM) networks are a type of recurrent neural network (RNN) that are designed to overcome the limitations of traditional RNNs in capturing long-term dependencies in sequential data. LSTM networks have gained popularity in recent years due to their ability to effectively model and predict sequences in various domains such as language modeling, speech recognition, and time series forecasting.

If you are new to LSTM networks and want to understand how they work, this beginner’s guide will provide you with a comprehensive overview of LSTM networks and their key components.

1. What is an LSTM Network?

LSTM networks are a special type of RNN that are able to learn long-term dependencies in sequential data by maintaining a memory of past inputs and selectively updating and forgetting information over time. This memory mechanism allows LSTM networks to effectively capture patterns and relationships in sequences that traditional RNNs struggle to learn.

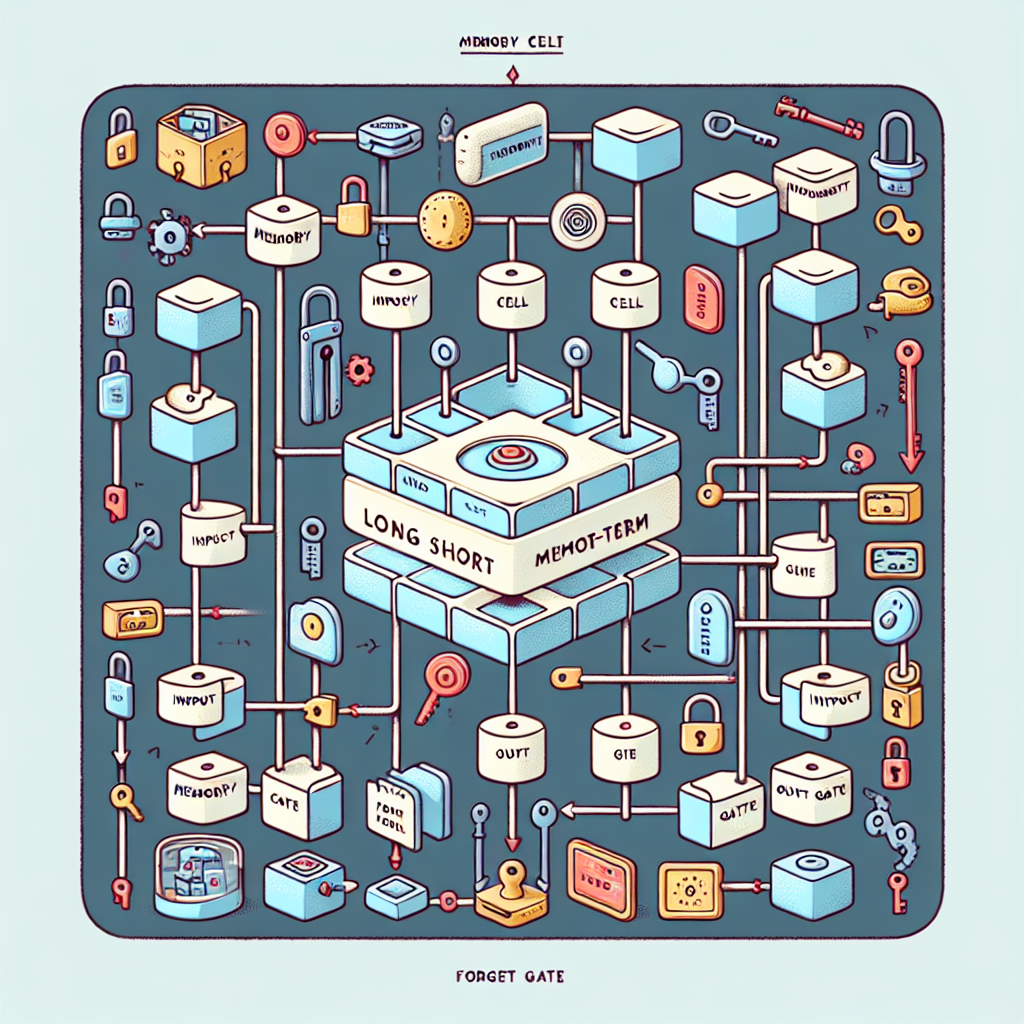

2. Key Components of an LSTM Network

An LSTM network consists of several key components, including:

– Cell State: The cell state is the memory of the LSTM network and is responsible for storing and passing information across time steps. The cell state can be updated, added to, or removed through a series of gates that control the flow of information.

– Input Gate: The input gate regulates the flow of new information into the cell state by controlling how much of the new input should be added to the cell state.

– Forget Gate: The forget gate controls which information in the cell state should be discarded or forgotten. This gate helps the LSTM network to selectively remember or forget past inputs based on their relevance.

– Output Gate: The output gate determines which information in the cell state should be passed on to the next time step as the output of the LSTM network.

3. Training and Tuning an LSTM Network

Training an LSTM network involves optimizing the network’s parameters (weights and biases) to minimize a loss function that measures the difference between the predicted output and the ground truth. This process typically involves backpropagation through time (BPTT) to update the network’s parameters based on the gradient of the loss function.

To improve the performance of an LSTM network, hyperparameters such as the number of hidden units, learning rate, and sequence length can be tuned through experimentation and validation on a separate validation set. Regularization techniques such as dropout and batch normalization can also be used to prevent overfitting and improve generalization.

4. Applications of LSTM Networks

LSTM networks have been successfully applied to a wide range of tasks, including:

– Language Modeling: LSTM networks are commonly used to generate text, translate languages, and perform sentiment analysis.

– Speech Recognition: LSTM networks are used in speech recognition systems to transcribe spoken words into text.

– Time Series Forecasting: LSTM networks are effective in predicting future values of time series data such as stock prices, weather patterns, and sales figures.

In conclusion, LSTM networks are a powerful tool for modeling and predicting sequential data with long-term dependencies. By understanding the key components and training principles of LSTM networks, beginners can start to explore the potential applications of this advanced neural network architecture in various domains.

#Beginners #Guide #LSTM #Understanding #Long #ShortTerm #Memory #Networks,lstm

Leave a Reply