Your cart is currently empty!

A Comprehensive Overview of Different Gated Architectures in RNNs

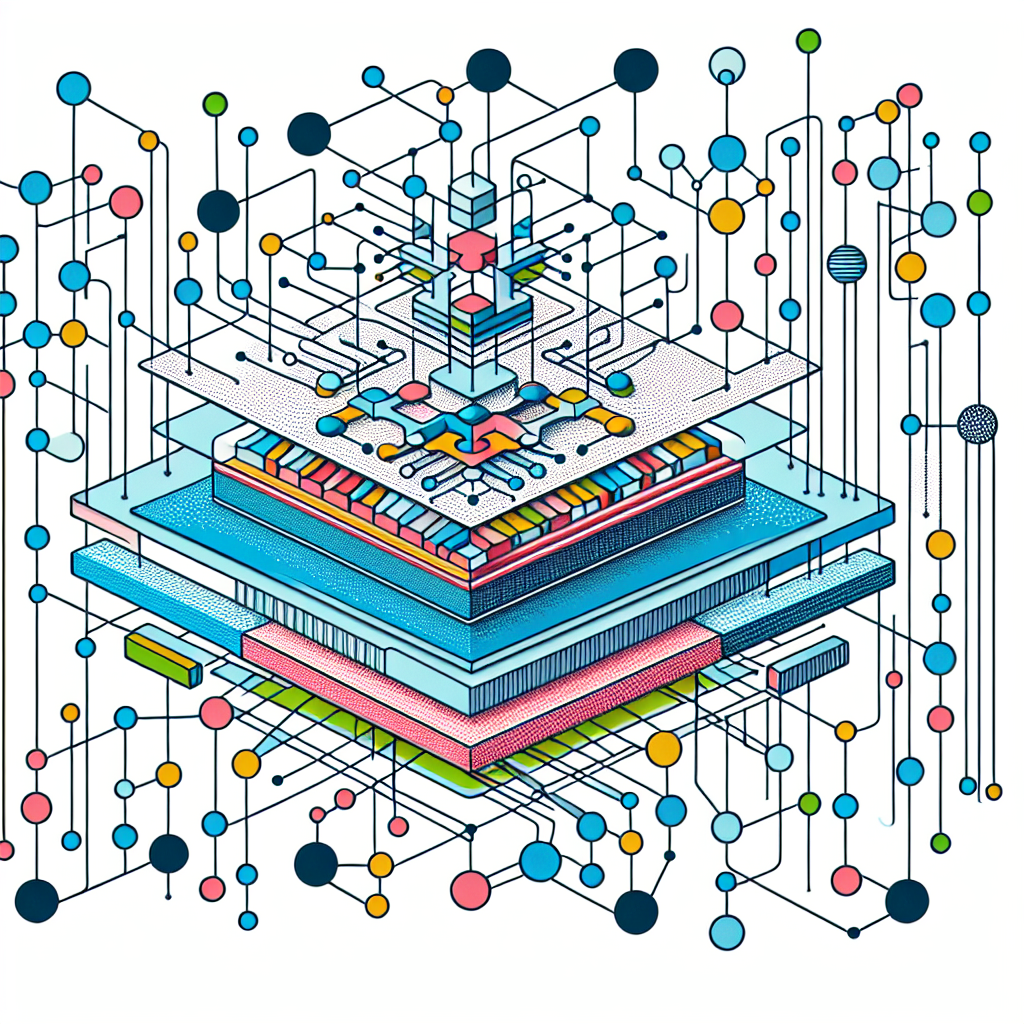

Recurrent Neural Networks (RNNs) have gained immense popularity in recent years for their ability to handle sequential data. However, traditional RNNs suffer from the vanishing gradient problem, which limits their ability to capture long-term dependencies in sequences. To address this issue, various gated architectures have been proposed, each offering unique advantages and applications. In this article, we will provide a comprehensive overview of different gated architectures in RNNs.

1. Long Short-Term Memory (LSTM):

LSTM is one of the most widely used gated architectures in RNNs. It consists of three gates – input gate, forget gate, and output gate – that control the flow of information through the network. The input gate regulates how much new information should be stored in the cell state, the forget gate determines what information should be discarded from the cell state, and the output gate decides what information should be passed to the next time step. LSTM is particularly effective in capturing long-term dependencies in sequences and has been successfully applied in various tasks such as language modeling, speech recognition, and machine translation.

2. Gated Recurrent Unit (GRU):

GRU is a simplified version of LSTM that combines the forget and input gates into a single update gate. It also has a reset gate that controls the flow of information from the previous time step. GRU is computationally more efficient than LSTM and has fewer parameters, making it easier to train on smaller datasets. Despite its simplicity, GRU has been shown to perform on par with LSTM in many tasks and is a popular choice for researchers and practitioners.

3. Clockwork RNN:

Clockwork RNN is a unique gated architecture that divides the hidden units into separate clock partitions, each operating at a different frequency. Each partition is responsible for processing a specific time scale of the input sequence, allowing the network to capture both short-term and long-term dependencies efficiently. Clockwork RNN has been shown to outperform traditional RNNs in tasks requiring hierarchical temporal modeling, such as video analysis and action recognition.

4. Independent Gating Mechanism (IGM):

IGM is a novel gated architecture that introduces independent gating mechanisms for each input dimension in the sequence. This allows the network to selectively attend to different dimensions of the input at each time step, enabling more fine-grained control over the information flow. IGM has shown promising results in tasks involving high-dimensional inputs, such as image captioning and video generation.

In conclusion, gated architectures play a crucial role in enhancing the capabilities of RNNs for handling sequential data. Each architecture offers unique advantages and trade-offs, depending on the task at hand. Researchers continue to explore new variations and combinations of gated mechanisms to further improve the performance of RNNs in various applications. Understanding the strengths and weaknesses of different gated architectures is essential for selecting the most suitable model for a given task and achieving optimal results.

#Comprehensive #Overview #Gated #Architectures #RNNs,recurrent neural networks: from simple to gated architectures

Leave a Reply