Fix today. Protect forever.

Secure your devices with the #1 malware removal and protection software

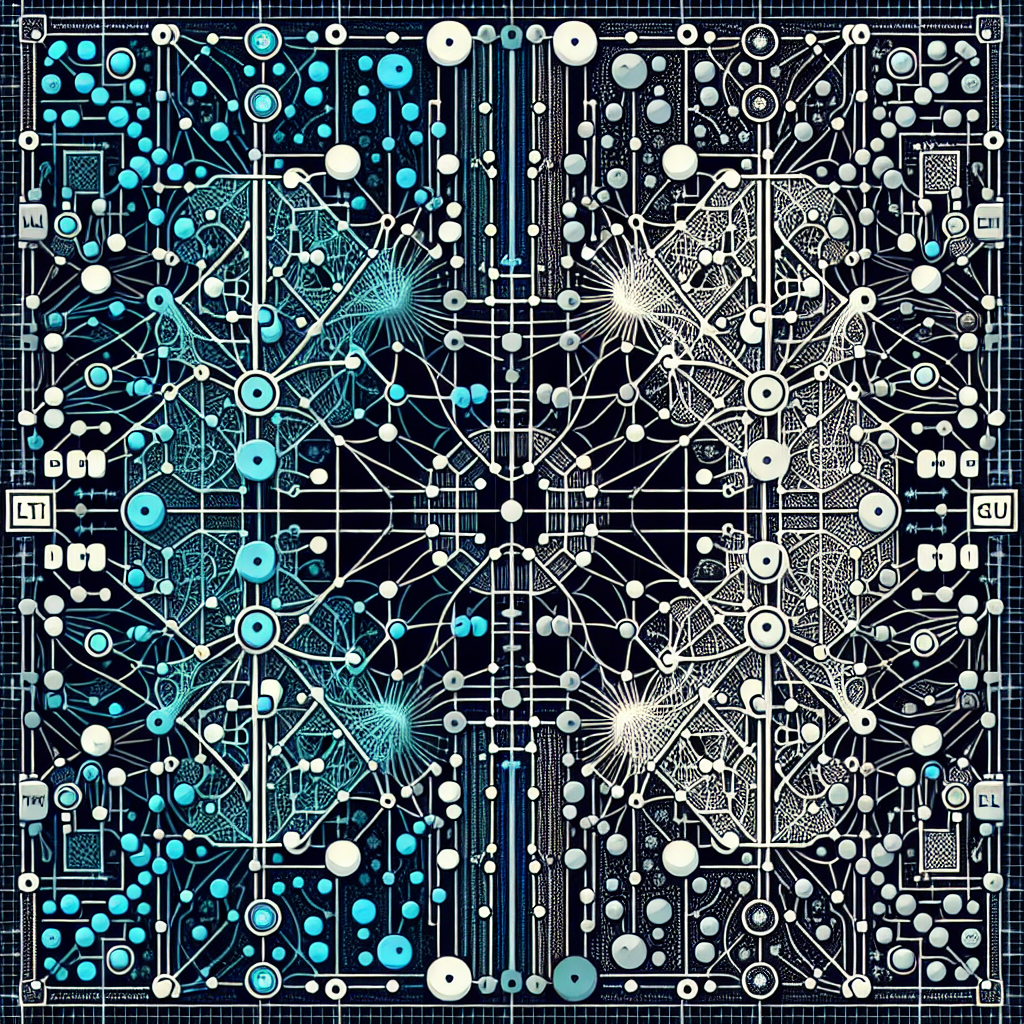

Recurrent Neural Networks (RNNs) have become increasingly popular in the field of deep learning due to their ability to process sequential data. Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) networks are two types of RNN architectures that have been designed to address the vanishing gradient problem that occurs in traditional RNNs.

LSTM networks were first introduced by Hochreiter and Schmidhuber in 1997 as a solution to the problem of vanishing gradients in RNNs. The main idea behind LSTM networks is the inclusion of a memory cell that allows the network to remember information over long periods of time. This memory cell is equipped with three gates: the input gate, the forget gate, and the output gate. These gates control the flow of information into and out of the memory cell, allowing the network to selectively remember or forget information as needed. This enables LSTM networks to effectively capture long-term dependencies in sequential data.

GRU networks were proposed by Cho et al. in 2014 as a simpler alternative to LSTM networks. The main difference between LSTM and GRU networks is that GRU networks only have two gates: the update gate and the reset gate. The update gate controls the flow of information into the hidden state, while the reset gate controls the flow of information from the past hidden state. Despite having fewer gates than LSTM networks, GRU networks have been shown to achieve similar performance in many tasks.

Both LSTM and GRU networks have been widely used in various applications such as natural language processing, speech recognition, and time series prediction. When deciding between LSTM and GRU networks, it is important to consider the trade-offs between complexity and performance. LSTM networks are more complex and have more parameters, which can make them slower to train and more prone to overfitting. On the other hand, GRU networks are simpler and more computationally efficient, making them a good choice for tasks where speed and efficiency are important.

In conclusion, LSTM and GRU networks are powerful tools for processing sequential data in deep learning. While LSTM networks are more complex and have more parameters, GRU networks offer a simpler alternative that can achieve similar performance in many tasks. Both architectures have their strengths and weaknesses, and the choice between them ultimately depends on the specific requirements of the task at hand.

Fix today. Protect forever.

Secure your devices with the #1 malware removal and protection software

#Comprehensive #Overview #LSTM #GRU #Networks #Recurrent #Neural #Networks,recurrent neural networks: from simple to gated architectures

Leave a Reply

You must be logged in to post a comment.