Your cart is currently empty!

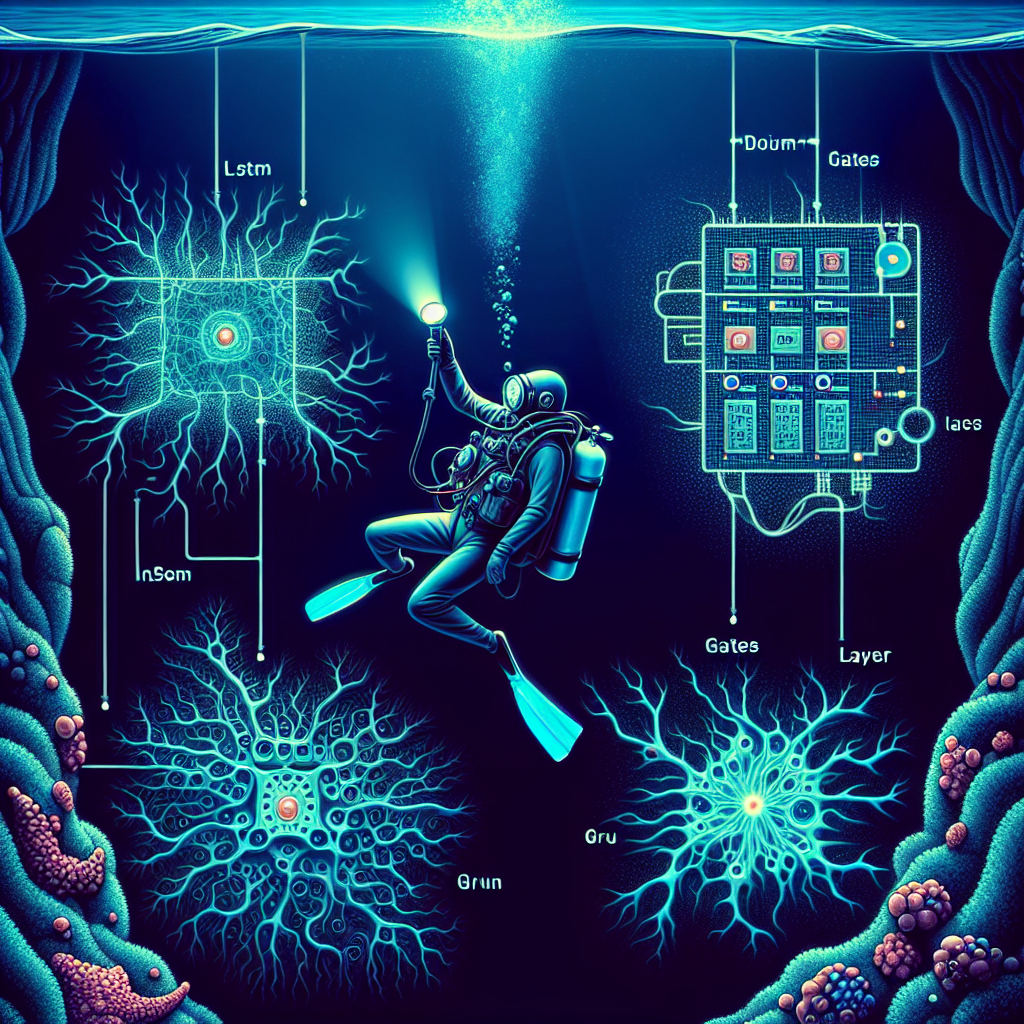

A Deep Dive into Gated Recurrent Neural Networks: LSTM and GRU

Recurrent Neural Networks (RNNs) have become a popular choice for sequential data processing tasks, such as natural language processing and time series analysis. However, traditional RNNs suffer from the vanishing gradient problem, which makes it difficult for them to learn long-term dependencies in the data. To address this issue, researchers have developed Gated Recurrent Neural Networks (GRNNs), which use gating mechanisms to selectively update and pass information through the network.

Two popular variants of GRNNs are Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU). In this article, we will delve deeper into these two architectures and explore their differences and similarities.

LSTM was proposed by Hochreiter and Schmidhuber in 1997 as a solution to the vanishing gradient problem in traditional RNNs. It introduces three gating mechanisms – the input gate, forget gate, and output gate – which control the flow of information in the network. The input gate determines how much new information should be added to the cell state, the forget gate decides which information to discard from the cell state, and the output gate regulates the information that is passed to the next time step.

On the other hand, GRU was introduced by Cho et al. in 2014 as a simpler alternative to LSTM. It combines the forget and input gates into a single update gate and merges the cell state and hidden state into a single state vector. This simplification makes GRU easier to train and faster to compute compared to LSTM.

Despite their differences, both LSTM and GRU have been shown to be effective in capturing long-term dependencies in sequential data. LSTM is known for its ability to store information for longer periods, making it suitable for tasks that require modeling complex and hierarchical relationships. On the other hand, GRU is favored for its simplicity and efficiency, making it a popular choice for applications where speed and resource constraints are important.

In conclusion, LSTM and GRU are two powerful variants of Gated Recurrent Neural Networks that have revolutionized the field of sequential data processing. While LSTM is known for its ability to capture long-term dependencies, GRU offers a simpler and more efficient alternative. Understanding the strengths and weaknesses of each architecture is crucial for selecting the right model for your specific task. By diving deeper into the inner workings of LSTM and GRU, we can gain a better understanding of how these architectures can be leveraged to solve complex sequential data problems.

#Deep #Dive #Gated #Recurrent #Neural #Networks #LSTM #GRU,recurrent neural networks: from simple to gated architectures

Leave a Reply