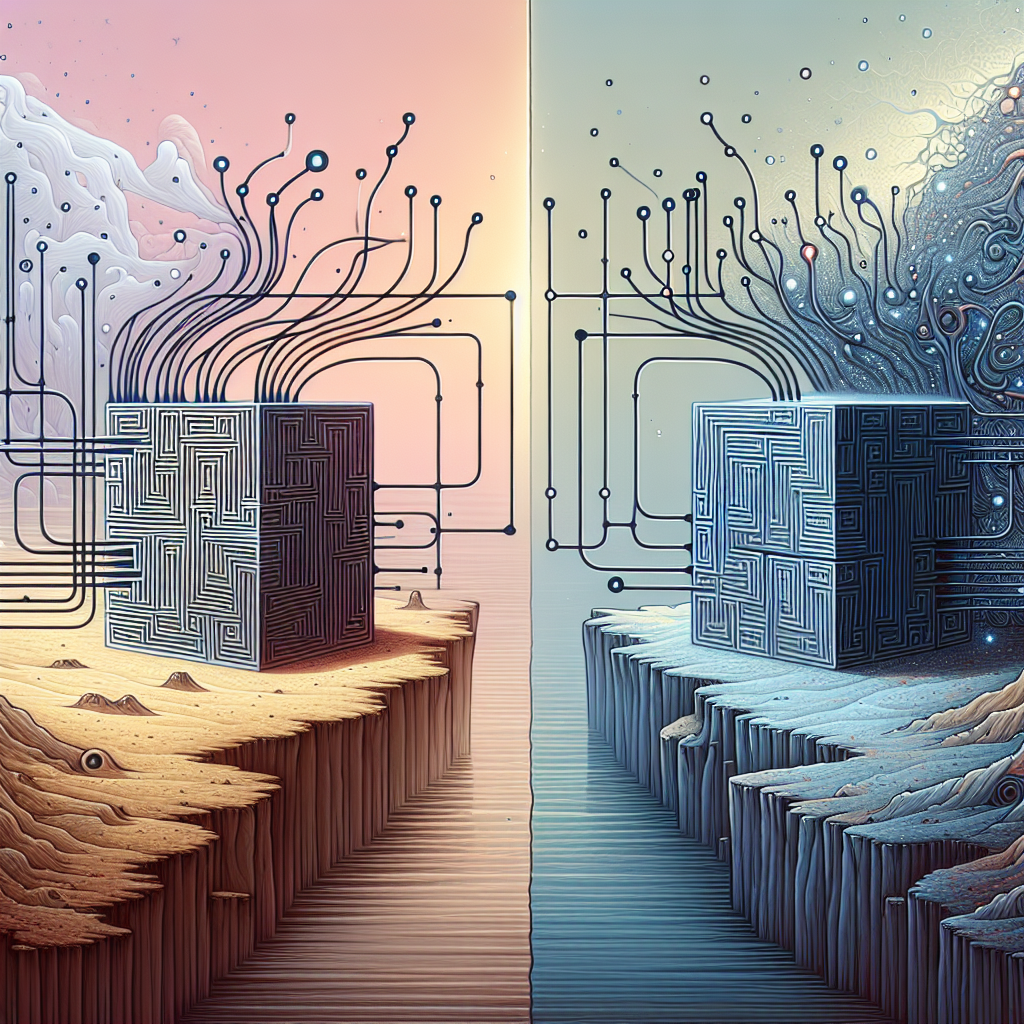

Recurrent Neural Networks (RNNs) are a type of artificial neural network that is designed to handle sequential data. They are widely used in natural language processing, speech recognition, and time series analysis, among other applications. Within the realm of RNNs, there are two main types: simple recurrent neural networks and gated recurrent neural networks. In this article, we will break down the differences between these two types of RNNs.

Simple Recurrent Neural Networks (SRNNs) are the most basic form of RNNs. They work by passing information from one time step to the next, creating a feedback loop that allows them to capture dependencies in sequential data. However, SRNNs have a major limitation known as the vanishing gradient problem. This occurs when the gradients become extremely small as they are backpropagated through time, making it difficult for the network to learn long-term dependencies.

Gated Recurrent Neural Networks (GRNNs) were developed to address the vanishing gradient problem present in SRNNs. The most popular type of GRNN is the Long Short-Term Memory (LSTM) network, which includes gated units called “memory cells” that control the flow of information within the network. These memory cells have three gates – input, forget, and output – that regulate the flow of information by deciding what to store, discard, or output at each time step.

One of the key differences between SRNNs and GRNNs lies in their ability to capture long-term dependencies. While SRNNs struggle with this due to the vanishing gradient problem, GRNNs, particularly LSTMs, excel at learning and remembering long sequences of data. This makes them well-suited for tasks that involve processing and generating sequential data, such as language modeling and speech recognition.

Another difference between SRNNs and GRNNs is their computational complexity. GRNNs, especially LSTMs, are more complex and have more parameters than SRNNs, which can make them slower to train and more resource-intensive. However, this increased complexity allows GRNNs to learn more intricate patterns in the data and achieve better performance on tasks that require capturing long-term dependencies.

In conclusion, while simple recurrent neural networks are a good starting point for working with sequential data, gated recurrent neural networks, particularly LSTMs, offer a more powerful and sophisticated approach for handling long sequences and capturing complex dependencies. By understanding the differences between these two types of RNNs, researchers and practitioners can choose the most appropriate model for their specific tasks and achieve better results in their applications.

#Breaking #Differences #Simple #Gated #Recurrent #Neural #Networks,recurrent neural networks: from simple to gated architectures

Leave a Reply