Your cart is currently empty!

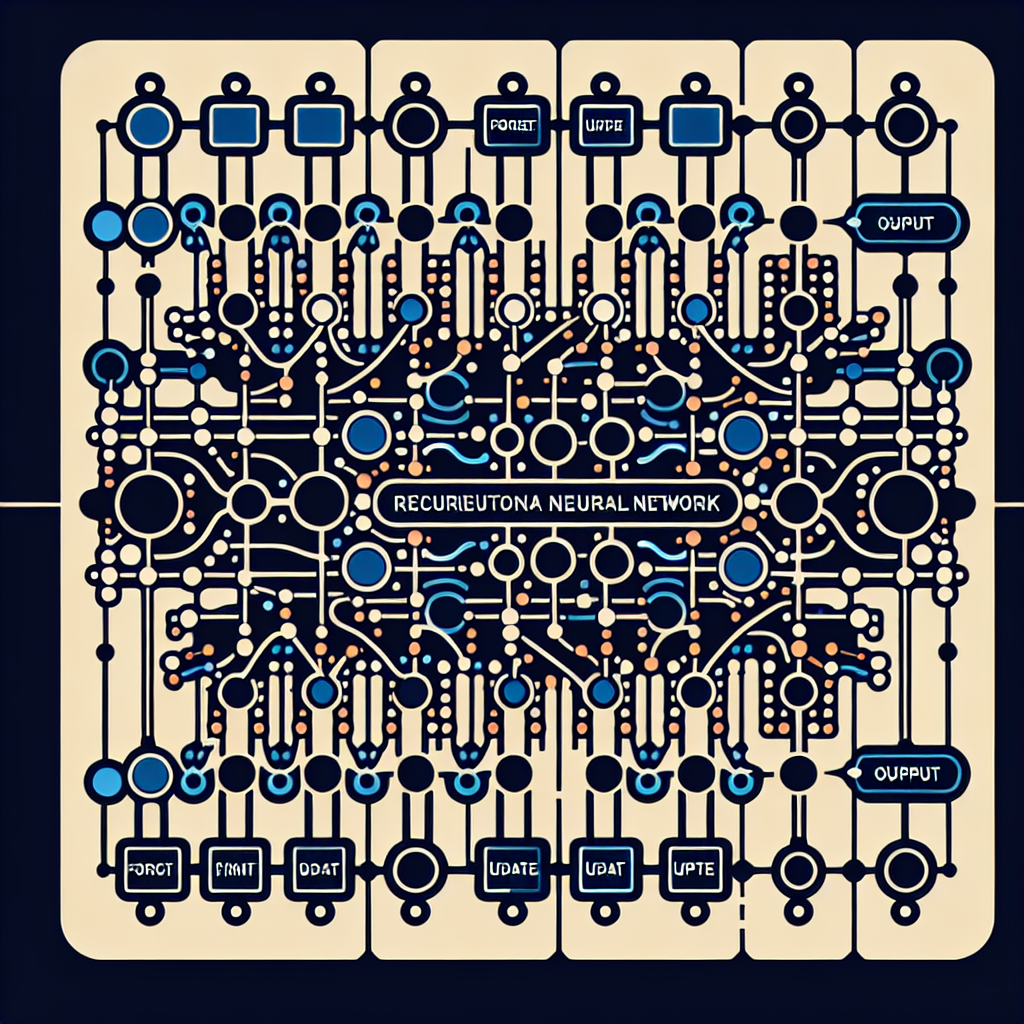

Demystifying the Mechanisms Behind Gated Recurrent Neural Networks

Gated Recurrent Neural Networks (GRNNs) have become a popular tool in the field of deep learning due to their ability to effectively model sequential data. However, the inner workings of these networks can often seem mysterious and complex to those unfamiliar with the underlying mechanisms. In this article, we will demystify the mechanisms behind GRNNs and explain how they work.

At a high level, a GRNN is a type of recurrent neural network (RNN) that includes gating mechanisms to control the flow of information through the network. This gating allows the network to selectively remember or forget information from past time steps, which is crucial for effectively modeling sequential data.

The key components of a GRNN are the input gate, forget gate, and output gate. These gates are responsible for controlling the flow of information through the network at each time step. The input gate determines how much new information should be added to the network, the forget gate decides how much information from the previous time step should be forgotten, and the output gate determines how much information should be passed on to the next time step.

One of the most popular implementations of GRNNs is the Long Short-Term Memory (LSTM) network, which includes additional memory cells and gating mechanisms to address the vanishing gradient problem that often plagues traditional RNNs. The LSTM network has been highly successful in tasks such as speech recognition, language modeling, and machine translation.

Another variant of GRNNs is the Gated Recurrent Unit (GRU), which simplifies the architecture of the LSTM network by combining the input and forget gates into a single update gate. This makes the GRU network more computationally efficient while still achieving similar performance to the LSTM network.

In summary, GRNNs are a powerful tool for modeling sequential data due to their ability to effectively capture long-term dependencies. By incorporating gating mechanisms, GRNNs are able to selectively remember or forget information from past time steps, allowing them to make more accurate predictions on sequential data. While the inner workings of GRNNs may seem complex at first glance, understanding the mechanisms behind these networks can help researchers and practitioners harness their full potential in a variety of applications.

#Demystifying #Mechanisms #Gated #Recurrent #Neural #Networks,recurrent neural networks: from simple to gated architectures

Leave a Reply