Your cart is currently empty!

Enhancing Sequential Data Analysis with Recurrent Neural Networks

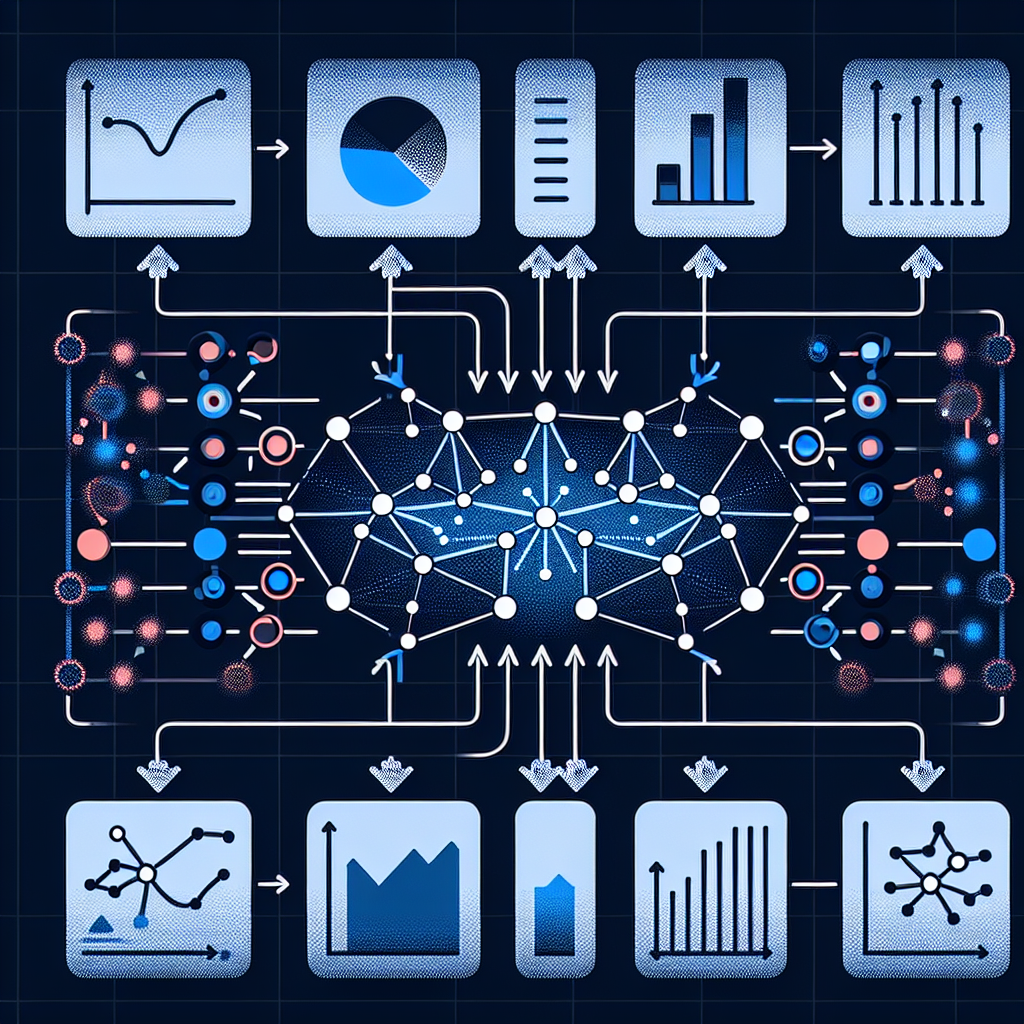

Sequential data analysis is a crucial aspect of machine learning and data science, as many real-world datasets are inherently ordered in time or space. Traditional machine learning models, such as decision trees or support vector machines, struggle to capture the sequential dependencies present in such data. Recurrent Neural Networks (RNNs) have emerged as a powerful tool for analyzing sequential data due to their ability to model temporal dependencies and handle variable-length sequences.

RNNs are a class of neural networks that have connections between units that form directed cycles, allowing them to maintain a memory of past inputs. This memory enables RNNs to process sequential data in a way that traditional feedforward neural networks cannot. RNNs have been successfully applied to a wide range of sequential data analysis tasks, including natural language processing, time series prediction, and speech recognition.

One of the key advantages of RNNs is their ability to handle variable-length sequences. Traditional machine learning models require fixed-size input vectors, making them ill-suited for tasks where the length of the input sequence can vary. RNNs, on the other hand, can process sequences of arbitrary length, making them ideal for tasks such as text generation or sentiment analysis where the length of the input varies.

Another advantage of RNNs is their ability to capture long-term dependencies in sequential data. Traditional models, such as Markov chains, can only capture short-term dependencies due to their limited memory. RNNs, on the other hand, can learn to maintain a memory of past inputs over a longer period, allowing them to capture complex patterns in the data.

However, RNNs have some limitations, such as difficulties in learning long-range dependencies and vanishing or exploding gradients during training. To address these issues, several variants of RNNs have been developed, such as Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs). These variants are specifically designed to address the shortcomings of traditional RNNs and have been shown to outperform them in many tasks.

In conclusion, Recurrent Neural Networks have proven to be a valuable tool for analyzing sequential data due to their ability to capture temporal dependencies and handle variable-length sequences. By leveraging the power of RNNs and their variants, data scientists and machine learning practitioners can enhance their analysis of sequential data and unlock new insights from complex datasets.

#Enhancing #Sequential #Data #Analysis #Recurrent #Neural #Networks,rnn

Leave a Reply