Fix today. Protect forever.

Secure your devices with the #1 malware removal and protection software

Recurrent Neural Networks (RNNs) have become a popular choice for tackling sequential data tasks such as language modeling, speech recognition, and time series prediction. These neural networks have the ability to retain memory of past inputs, making them well-suited for tasks that require context and temporal dependencies.

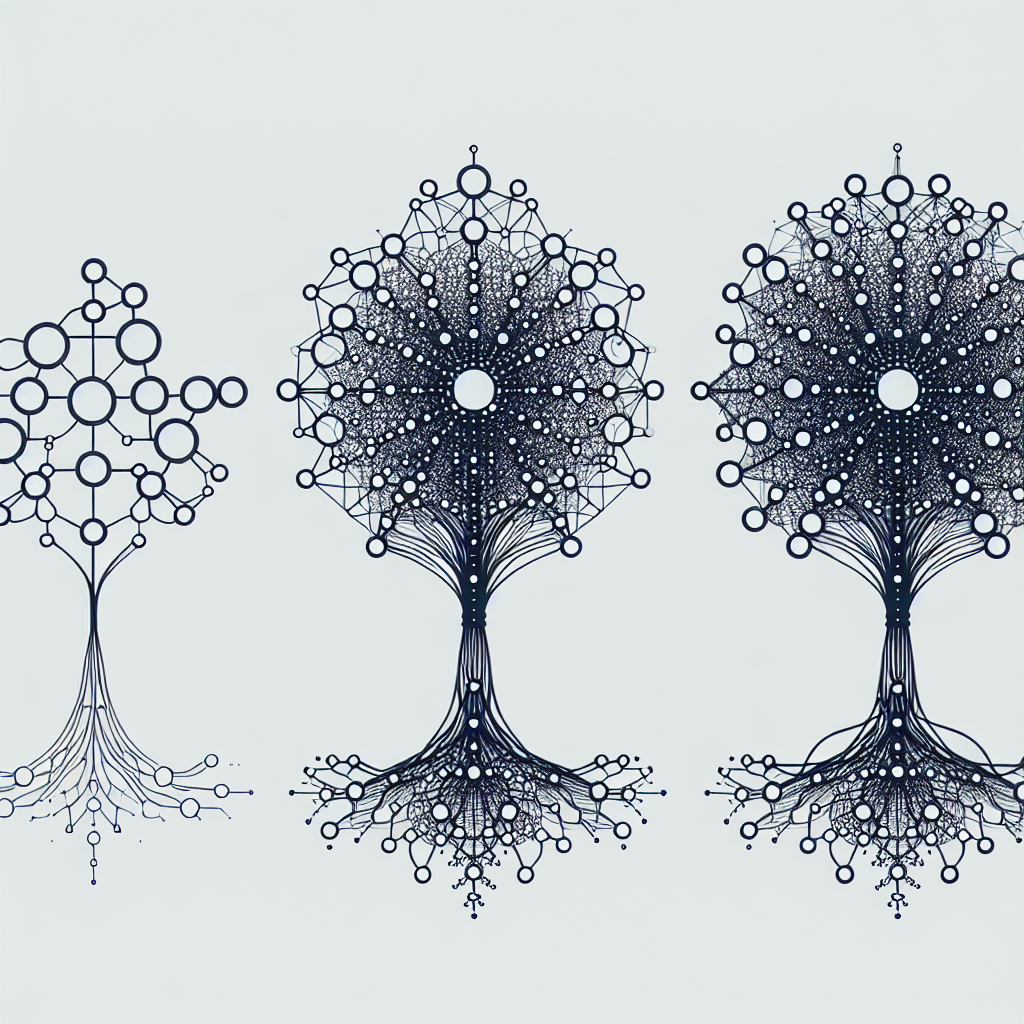

Over the years, researchers have explored various architectures and enhancements to improve the performance and efficiency of RNNs. In this article, we will delve into the evolution of recurrent neural network architectures and discuss some of the key advancements that have shaped the field.

The vanilla RNN architecture, also known as the Elman network, was one of the earliest forms of RNNs. However, it was plagued by the vanishing gradient problem, which made it difficult for the network to learn long-range dependencies. To address this issue, researchers introduced the Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) architectures.

LSTM networks, proposed by Hochreiter and Schmidhuber in 1997, introduced a more sophisticated memory cell that could retain information for longer periods of time. The architecture includes gates that regulate the flow of information, allowing the network to selectively remember or forget information. This improved the ability of the network to learn long-range dependencies and led to significant performance gains in tasks such as language modeling and machine translation.

GRU networks, introduced by Cho et al. in 2014, simplified the architecture of LSTM networks by combining the forget and input gates into a single update gate. This reduced the number of parameters in the network and made it more computationally efficient while still maintaining strong performance on sequential data tasks.

In recent years, researchers have continued to explore novel architectures and enhancements to further improve the capabilities of RNNs. One notable advancement is the introduction of attention mechanisms, which allow the network to focus on specific parts of the input sequence when making predictions. This has been particularly effective in tasks such as machine translation and image captioning, where the network needs to selectively attend to relevant information.

Another area of research is the development of multi-layer RNN architectures, such as stacked LSTMs or GRUs. By stacking multiple layers of recurrent units, researchers have been able to capture more complex patterns in the data and achieve higher levels of performance in tasks such as sentiment analysis and speech recognition.

Overall, the evolution of recurrent neural network architectures has been driven by the need to address the limitations of traditional RNNs and improve their performance on a wide range of sequential data tasks. With ongoing research and advancements in the field, RNNs continue to be a powerful tool for modeling temporal dependencies and handling sequential data effectively.

#Exploring #Evolution #Recurrent #Neural #Network #Architectures,recurrent neural networks: from simple to gated architectures

Leave a Reply

You must be logged in to post a comment.