Your cart is currently empty!

From Basic RNNs to Advanced Gated Architectures: A Deep Dive into Recurrent Neural Networks

Recurrent Neural Networks (RNNs) have become one of the most popular and powerful tools in the field of deep learning. They are widely used in a variety of applications, including natural language processing, speech recognition, and time series analysis. In this article, we will explore the evolution of RNNs from basic architectures to advanced gated architectures, such as Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU).

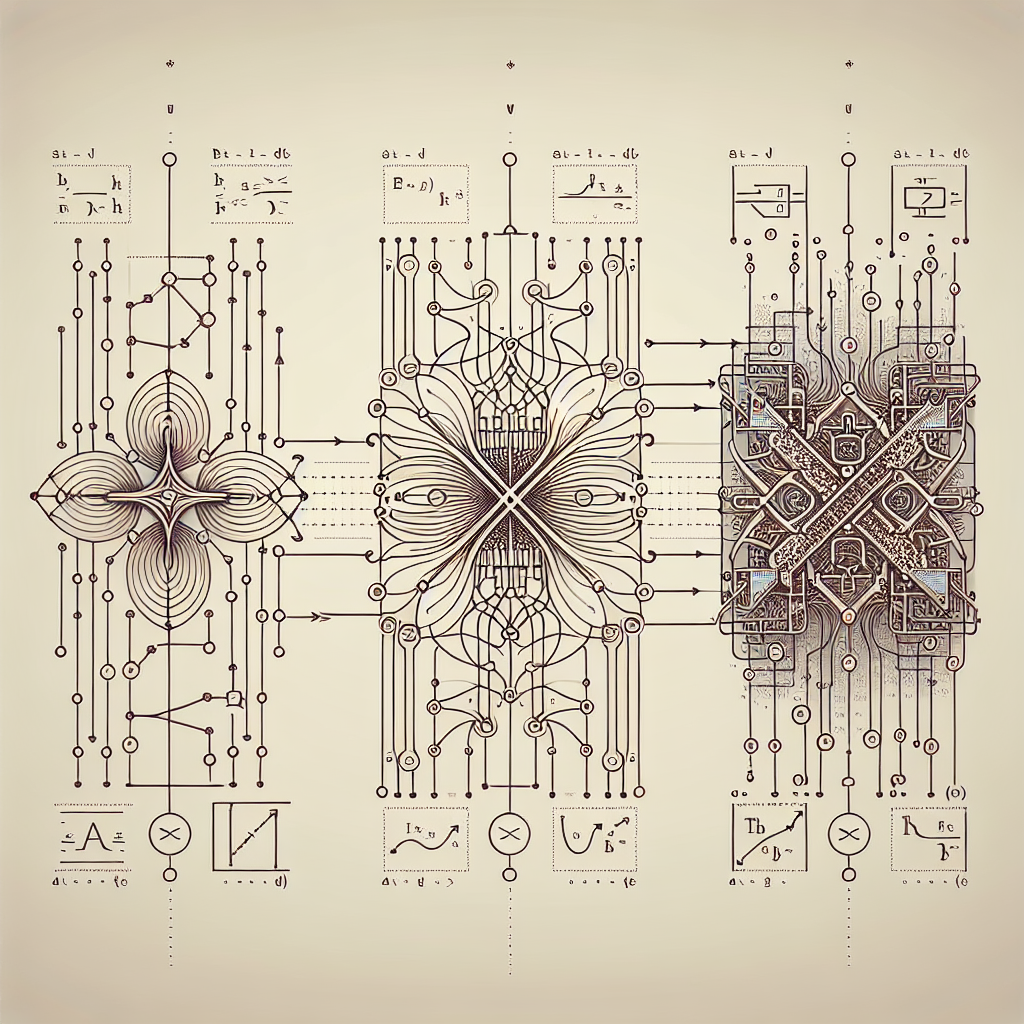

Basic RNNs are the simplest form of recurrent neural networks. They have a single layer of recurrent units that process input sequences one element at a time. Each unit in the network receives input from the previous time step and produces an output that is fed back into the network. While basic RNNs are capable of modeling sequential data, they suffer from the problem of vanishing gradients, which makes it difficult for them to learn long-term dependencies in the data.

To address this issue, more advanced gated architectures, such as LSTM and GRU, have been developed. These architectures incorporate gating mechanisms that allow the network to control the flow of information through the recurrent units. In LSTM networks, each unit has three gates – input gate, forget gate, and output gate – that regulate the flow of information through the cell state. This enables the network to learn long-term dependencies more effectively and avoid the vanishing gradient problem.

Similarly, GRU networks have two gates – reset gate and update gate – that control the flow of information through the network. GRUs are simpler than LSTMs and have fewer parameters, making them faster to train and more efficient in terms of computational resources. However, LSTMs are generally considered to be more powerful and capable of capturing more complex patterns in the data.

In addition to LSTM and GRU, there are other advanced gated architectures, such as Clockwork RNNs and Depth-Gated RNNs, that have been proposed in recent years. These architectures introduce additional mechanisms to improve the performance of RNNs in specific applications, such as modeling hierarchical structures or handling variable-length sequences.

Overall, the evolution of RNNs from basic architectures to advanced gated architectures has significantly improved their ability to model sequential data and learn long-term dependencies. These advanced architectures have become essential tools in the field of deep learning, enabling the development of more powerful and accurate models for a wide range of applications. As research in this area continues to advance, we can expect even more sophisticated and effective architectures to be developed in the future.

#Basic #RNNs #Advanced #Gated #Architectures #Deep #Dive #Recurrent #Neural #Networks,recurrent neural networks: from simple to gated architectures

Leave a Reply