Fix today. Protect forever.

Secure your devices with the #1 malware removal and protection software

Recurrent Neural Networks (RNNs) have been a popular choice for processing sequential data in machine learning applications. However, they have some limitations that make them less effective for long-term dependencies in sequences. Long Short-Term Memory (LSTM) networks were introduced as a solution to these limitations and have since become a widely used model for sequential data processing.

In this comprehensive guide, we will discuss the transition from RNNs to LSTMs and explore the key differences between the two models.

RNNs are designed to process sequential data by maintaining a hidden state that captures information from previous time steps. This hidden state is updated at each time step using the input at that time step and the previous hidden state. While RNNs are effective at capturing short-term dependencies in sequences, they struggle with long-term dependencies due to the vanishing gradient problem. This problem arises when the gradients of the error function with respect to the parameters become very small, making it difficult to update the model effectively.

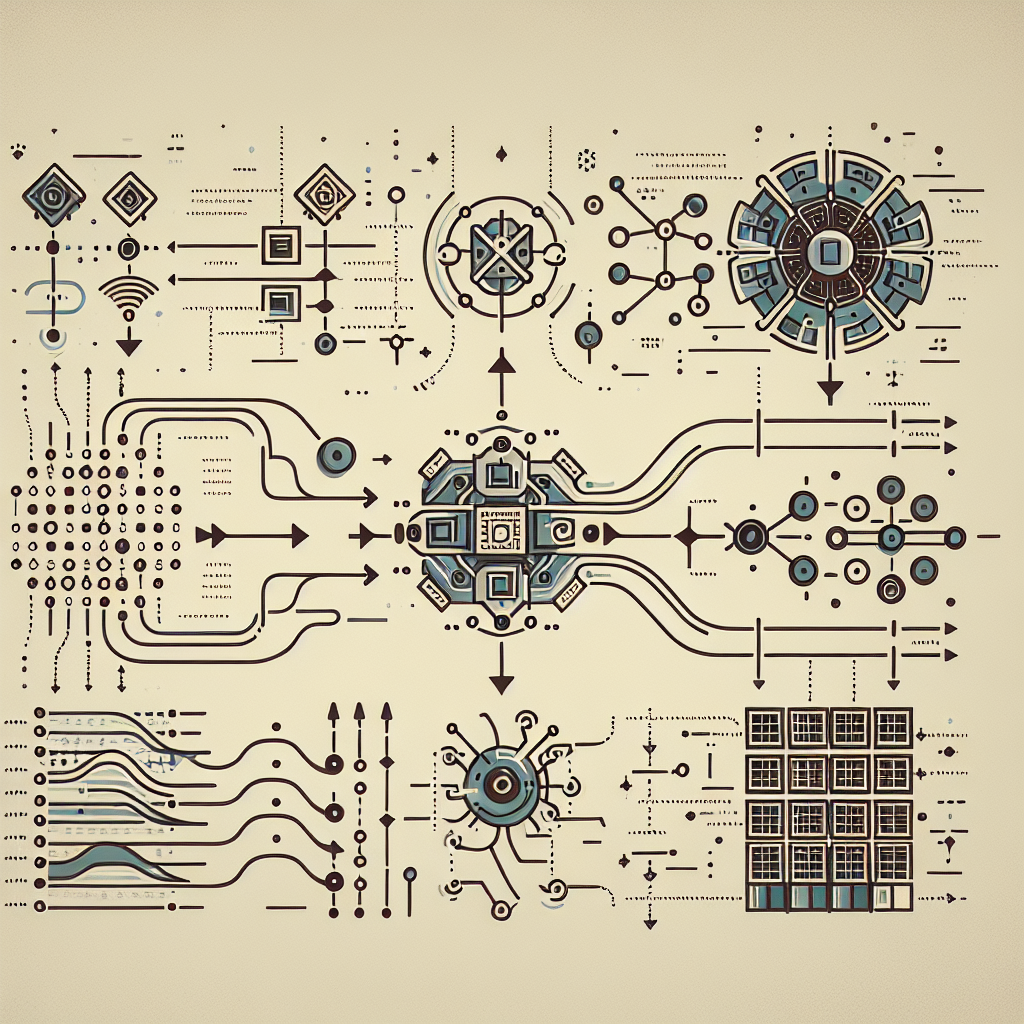

LSTMs were introduced as a solution to the vanishing gradient problem in RNNs. LSTMs have a more complex structure compared to RNNs, with additional gates that control the flow of information within the network. These gates include the input gate, forget gate, and output gate, which regulate the flow of information into, out of, and within the LSTM cell. The key innovation of LSTMs is the cell state, which allows the network to maintain long-term dependencies by selectively updating and forgetting information.

The input gate controls how much of the new input should be added to the cell state, the forget gate determines which information from the previous cell state should be discarded, and the output gate decides how much of the cell state should be used to generate the output at the current time step. By carefully managing the flow of information through these gates, LSTMs can effectively capture long-term dependencies in sequences.

In practice, LSTMs have been shown to outperform RNNs on a wide range of sequential data processing tasks, including speech recognition, language modeling, and time series forecasting. The ability of LSTMs to capture long-term dependencies makes them particularly well-suited for tasks that involve processing sequences with complex temporal structures.

In conclusion, LSTMs have revolutionized the field of sequential data processing by addressing the limitations of RNNs and enabling the modeling of long-term dependencies in sequences. By understanding the key differences between RNNs and LSTMs, you can choose the right model for your specific application and achieve better performance in sequential data processing tasks.

Fix today. Protect forever.

Secure your devices with the #1 malware removal and protection software

#RNNs #LSTMs #Comprehensive #Guide #Sequential #Data #Processing,lstm

Leave a Reply

You must be logged in to post a comment.