Your cart is currently empty!

From RNNs to LSTMs: An Evolution in Neural Networks

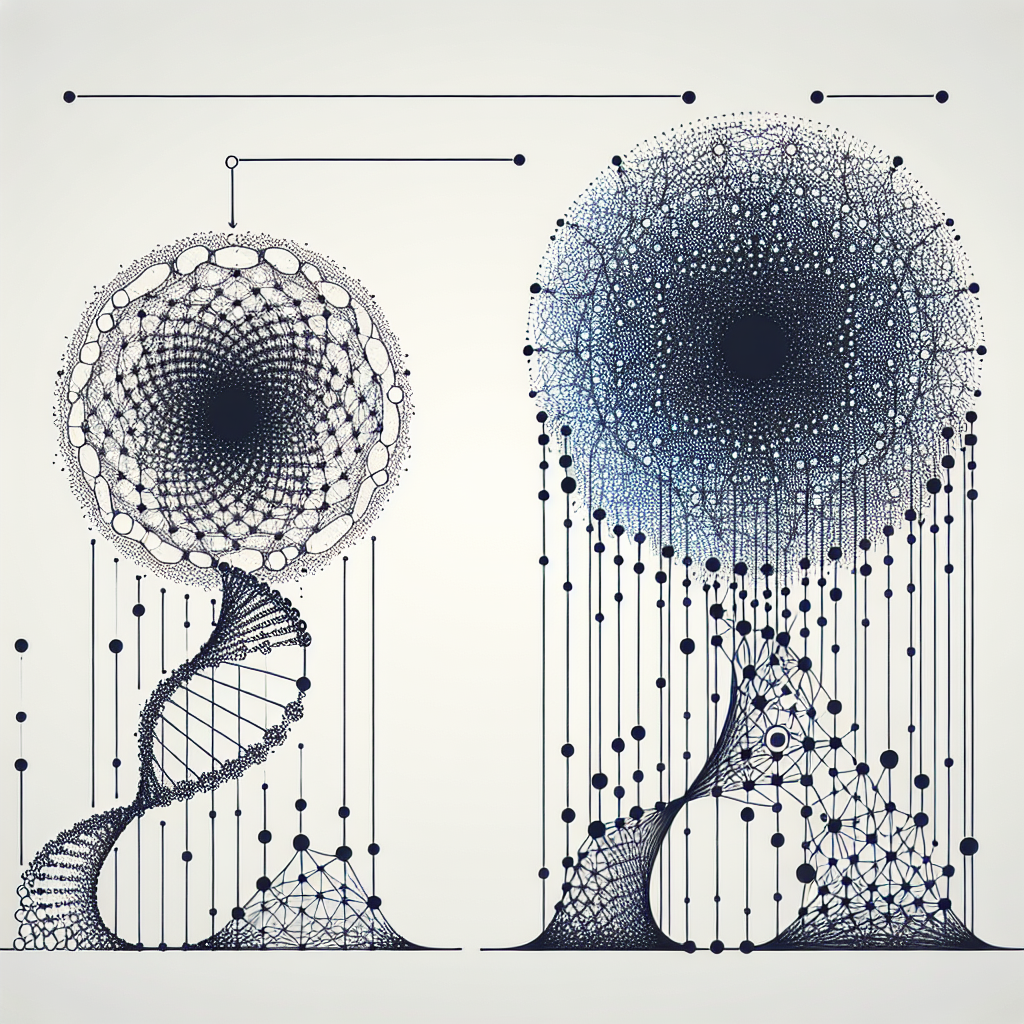

Neural networks have come a long way since their inception, evolving from simple models like Recurrent Neural Networks (RNNs) to more sophisticated architectures like Long Short-Term Memory (LSTM) networks. This evolution has been driven by the need for better performance in tasks such as natural language processing, speech recognition, and image recognition.

RNNs were one of the earliest types of neural networks used for sequential data processing. They are designed to capture temporal dependencies in data by passing information from one time step to the next. While RNNs were effective in many tasks, they suffered from the vanishing gradient problem, which made it difficult for them to capture long-range dependencies in data.

To address this issue, researchers introduced LSTM networks, which are a type of RNN with a more complex architecture. LSTMs have a unique memory cell that allows them to retain information over long periods of time, making them better at capturing long-range dependencies in data. This makes them particularly well-suited for tasks like language modeling, where context from earlier in a sentence is crucial for understanding the meaning of later words.

One of the key innovations in LSTM networks is the introduction of gates, which control the flow of information in and out of the memory cell. These gates include the input gate, forget gate, and output gate, which regulate the amount of information that is stored or discarded at each time step. By carefully controlling the flow of information, LSTMs are able to learn complex patterns in data and make more accurate predictions.

In recent years, LSTMs have become the go-to architecture for many sequential data processing tasks. Their ability to capture long-range dependencies, coupled with their effectiveness in handling vanishing gradients, has made them a popular choice for tasks like machine translation, sentiment analysis, and speech recognition. Researchers continue to explore ways to improve the performance of LSTMs, with ongoing research focusing on optimizing the structure of the memory cell and introducing new types of gates.

Overall, the evolution from RNNs to LSTMs represents a major advancement in the field of neural networks. By addressing the limitations of earlier models and introducing new techniques for capturing long-range dependencies, LSTMs have paved the way for more advanced applications in artificial intelligence. As researchers continue to push the boundaries of neural network design, it is likely that even more sophisticated architectures will be developed in the future, further improving the performance of AI systems.

#RNNs #LSTMs #Evolution #Neural #Networks,lstm

Leave a Reply