Your cart is currently empty!

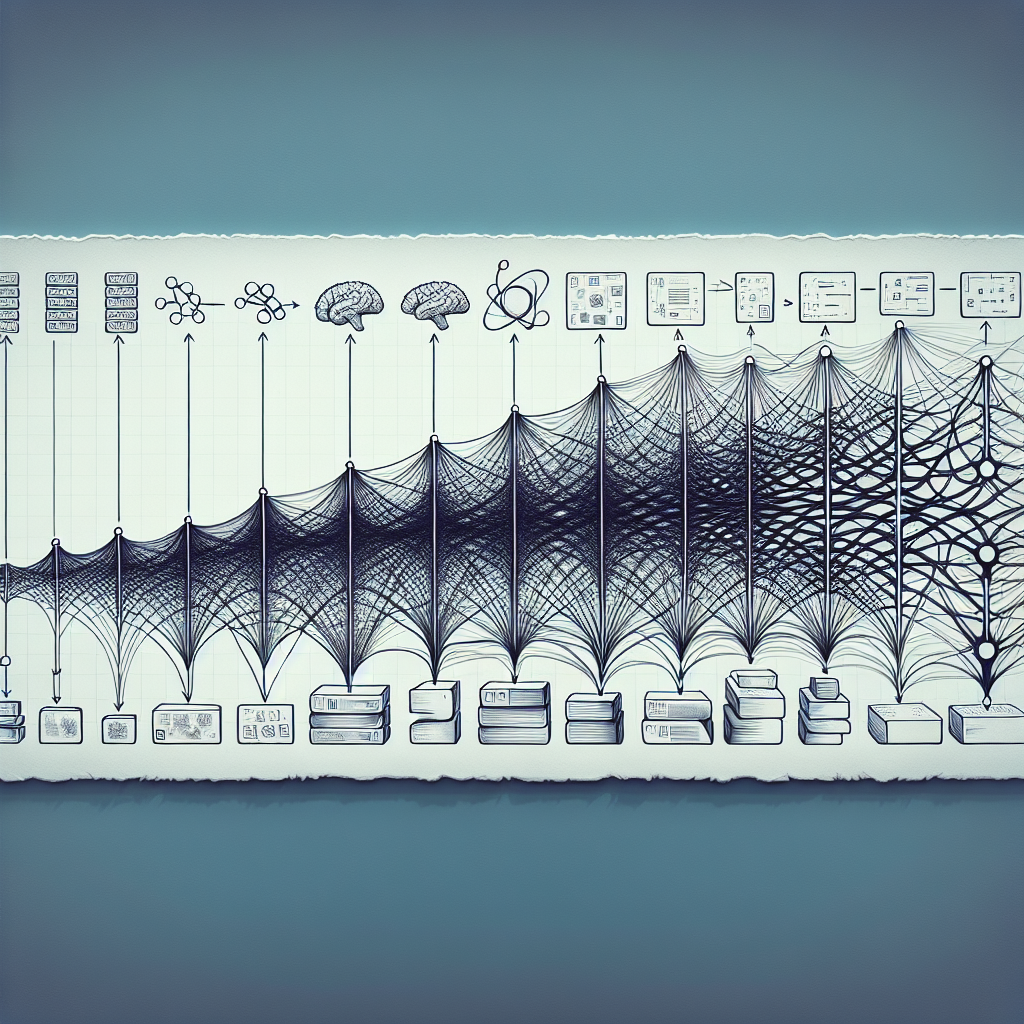

From RNNs to LSTMs: The Evolution of Recurrent Neural Networks

Recurrent Neural Networks (RNNs) have been a staple in the field of deep learning for many years, allowing for the processing of sequential data such as time series or natural language. However, as researchers and practitioners have delved deeper into the capabilities and limitations of RNNs, a new and improved model has emerged: Long Short-Term Memory networks (LSTMs).

RNNs are designed to handle sequential data by maintaining a hidden state that evolves over time as new inputs are fed into the network. This hidden state allows RNNs to capture dependencies between elements in the sequence, making them well-suited for tasks such as time series prediction, language modeling, and machine translation. However, RNNs suffer from the vanishing gradient problem, which hinders their ability to learn long-range dependencies in sequences.

LSTMs were introduced in 1997 by Hochreiter and Schmidhuber as a solution to the vanishing gradient problem in RNNs. LSTMs are a type of RNN architecture that includes a memory cell and three gates: the input gate, forget gate, and output gate. These gates control the flow of information in and out of the memory cell, allowing LSTMs to retain important information over long periods of time and avoid the vanishing gradient problem.

The key innovation of LSTMs lies in their ability to learn long-range dependencies in sequences, making them well-suited for tasks that require modeling complex relationships between elements in the sequence. LSTMs have been successfully applied to a wide range of tasks, including speech recognition, handwriting recognition, and sentiment analysis.

One of the main reasons for the success of LSTMs is their ability to capture long-term dependencies in sequences, which is essential for tasks such as language modeling and machine translation. LSTMs have been shown to outperform traditional RNNs on a variety of benchmarks, making them a popular choice for researchers and practitioners working with sequential data.

In conclusion, the evolution of recurrent neural networks from RNNs to LSTMs represents a significant advancement in the field of deep learning. LSTMs have proven to be highly effective at capturing long-range dependencies in sequences, making them a powerful tool for a wide range of applications. As researchers continue to push the boundaries of deep learning, it is likely that we will see further advancements in the field of recurrent neural networks in the years to come.

#RNNs #LSTMs #Evolution #Recurrent #Neural #Networks,lstm

Leave a Reply