Recurrent Neural Networks (RNNs) have become a popular choice for tasks involving sequential data, such as natural language processing, time series analysis, and speech recognition. One of the key features that sets RNNs apart from traditional feedforward neural networks is their ability to effectively model long-range dependencies in the data. This is achieved through the use of gated architectures, which allow the network to selectively update and forget information over time.

In this article, we will explore the evolution of gated architectures in RNNs, starting from the simplest form of gating mechanisms to more sophisticated and complex variants. We will discuss the motivations behind the development of these architectures and how they have revolutionized the field of deep learning.

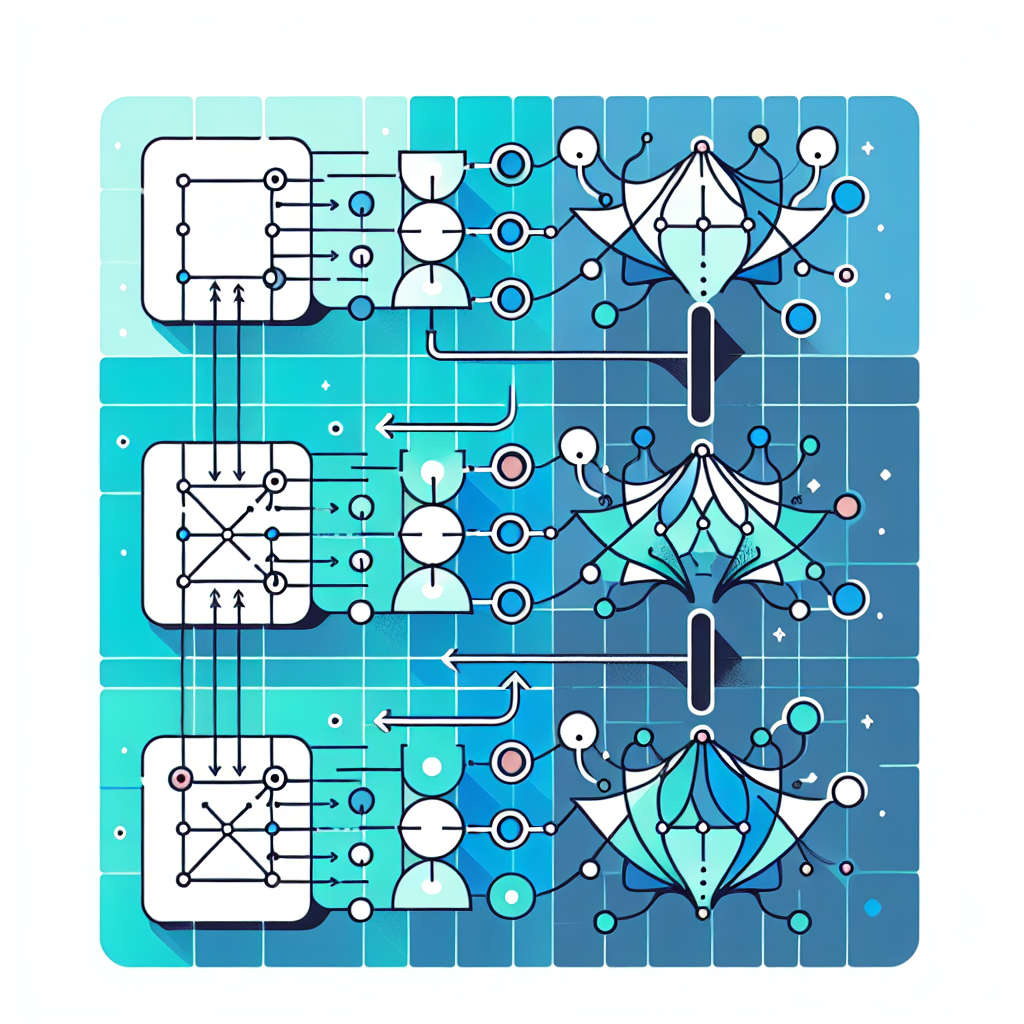

The simplest form of gating mechanism in RNNs is the basic RNN cell, which consists of a single recurrent layer that updates its hidden state at each time step. While this architecture is effective for modeling short-range dependencies, it struggles to capture long-term dependencies due to the vanishing gradient problem. This issue arises when gradients become extremely small as they are backpropagated through time, leading to difficulties in training the network effectively.

To address this problem, researchers introduced the Long Short-Term Memory (LSTM) architecture, which incorporates multiple gating mechanisms to control the flow of information within the network. The key components of an LSTM cell include the input gate, forget gate, output gate, and cell state, which work together to preserve relevant information over long sequences while discarding irrelevant information. This allows the network to learn long-range dependencies more effectively and avoid the vanishing gradient problem.

Building upon the success of LSTM, researchers developed the Gated Recurrent Unit (GRU) architecture, which simplifies the structure of LSTM by merging the forget and input gates into a single update gate. This reduces the computational complexity of the model while still maintaining strong performance on sequential tasks. GRU has been shown to be more efficient than LSTM in terms of training time and memory consumption, making it a popular choice for many applications.

In recent years, there have been further advancements in gated architectures, such as the Transformer model, which utilizes self-attention mechanisms to capture long-range dependencies in a parallelizable manner. Transformers have achieved state-of-the-art performance on a wide range of natural language processing tasks, demonstrating the power of attention-based mechanisms in sequence modeling.

In conclusion, gated architectures have played a crucial role in advancing the capabilities of RNNs in modeling sequential data. From the basic RNN cell to the sophisticated Transformer model, these architectures have enabled deep learning models to learn complex patterns and dependencies in data more effectively. By understanding the evolution of gated architectures in RNNs, researchers and practitioners can leverage these advancements to build more powerful and efficient neural network models for a variety of applications.

#Simple #Complex #Guide #Gated #Architectures #Recurrent #Neural #Networks,recurrent neural networks: from simple to gated architectures

Leave a Reply

You must be logged in to post a comment.