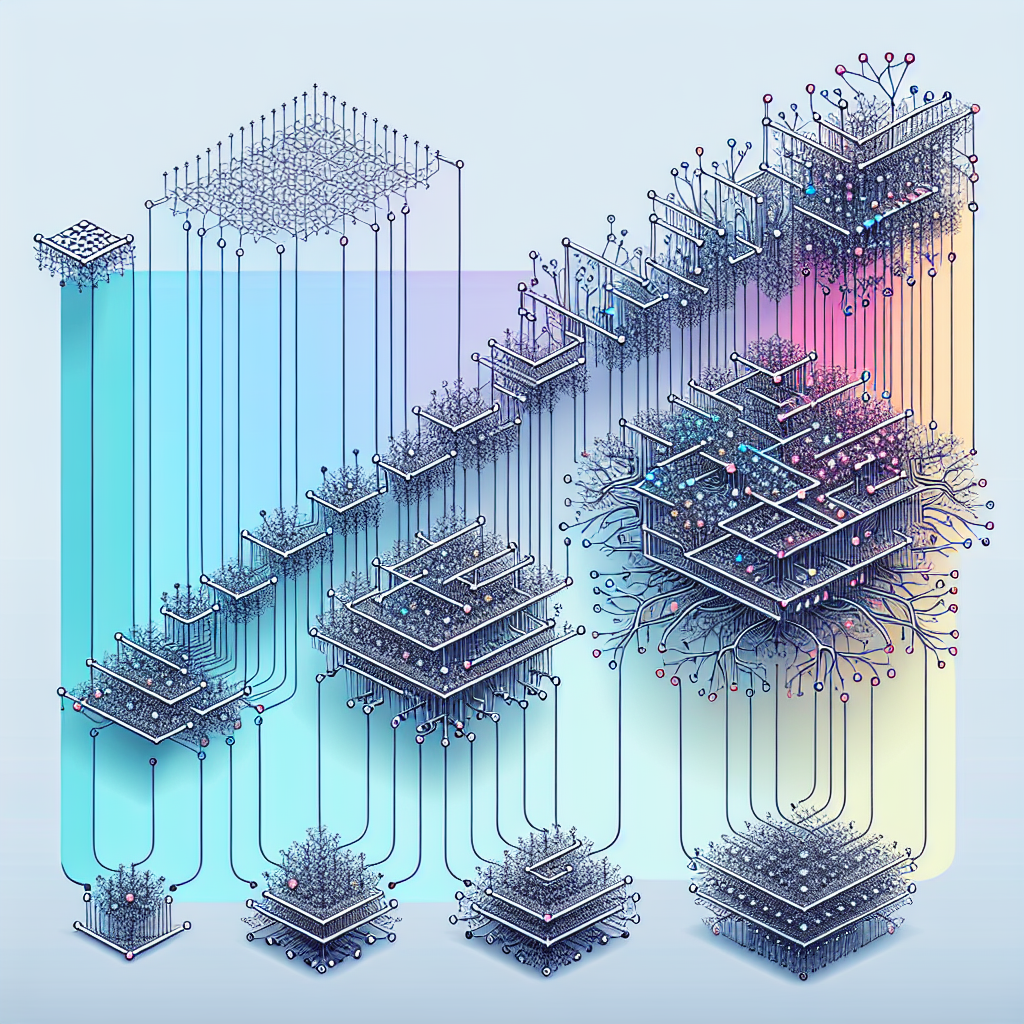

Recurrent Neural Networks (RNNs) have been a crucial development in the field of artificial intelligence, enabling machines to process sequential data and learn from it. Over the years, RNN architectures have evolved from simple structures to more complex and sophisticated designs, allowing for more efficient and accurate processing of sequential data.

The earliest RNN architectures were based on a simple structure known as the Elman network, which consisted of a single hidden layer and a feedback loop that allowed information to persist over time. While these early RNNs were effective in handling simple sequential data, they struggled with long-term dependencies and were prone to the vanishing gradient problem.

To address these limitations, more complex RNN architectures were developed. One of the most notable advancements was the Long Short-Term Memory (LSTM) network, proposed by Hochreiter and Schmidhuber in 1997. LSTM networks introduced a gating mechanism that allowed the network to learn when to forget or remember certain information, enabling them to better handle long-term dependencies.

Another significant development in RNN architectures was the introduction of Gated Recurrent Units (GRUs), which are a simplified version of LSTM networks. GRUs have fewer parameters than LSTM networks, making them more computationally efficient while still maintaining strong performance on sequential data tasks.

More recently, researchers have been exploring even more complex RNN architectures, such as the Attention Mechanism and Transformer networks. These architectures have revolutionized the field of natural language processing by enabling models to focus on specific parts of the input sequence, improving performance on tasks such as machine translation and text generation.

Overall, the evolution of RNN architectures from simple structures to more complex and sophisticated designs has significantly improved the capabilities of these networks in processing sequential data. With ongoing research and advancements in the field, we can expect to see even more innovative RNN architectures in the future, further pushing the boundaries of what these networks can achieve.

#Simple #Complex #Evolution #Recurrent #Neural #Network #Architectures,recurrent neural networks: from simple to gated architectures

Leave a Reply