Fix today. Protect forever.

Secure your devices with the #1 malware removal and protection software

Recurrent Neural Networks (RNNs) have become a popular choice for many tasks in the field of machine learning and artificial intelligence. These networks are designed to handle sequential data, making them ideal for tasks like speech recognition, language modeling, and time series forecasting. Over the years, researchers have developed various architectures to improve the performance and capabilities of RNNs.

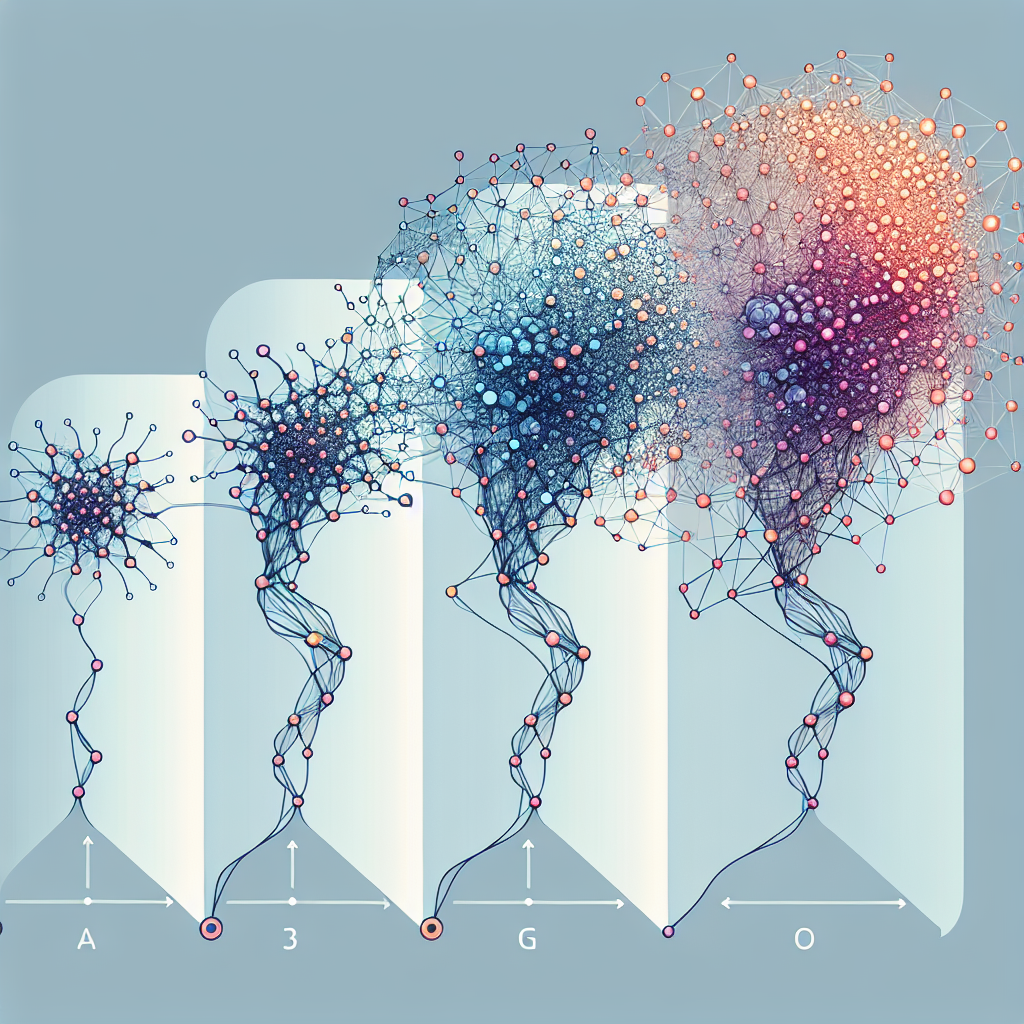

The evolution of RNN architectures can be traced from simple to complex designs that address the limitations of traditional RNNs. One of the earliest RNN architectures is the vanilla RNN, which consists of a single layer of recurrent units. While this architecture is effective for many tasks, it suffers from the problem of vanishing or exploding gradients, which can make training difficult for long sequences.

To address this issue, researchers introduced the Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) architectures. These models incorporate gating mechanisms that allow them to learn long-range dependencies in sequences more effectively. LSTM, in particular, has become a popular choice for many applications due to its ability to store information over long periods of time.

More recently, researchers have developed even more complex RNN architectures, such as the Transformer and the Attention Mechanism. These models use self-attention mechanisms to capture relationships between different parts of a sequence, allowing them to handle long-range dependencies more efficiently. The Transformer architecture, in particular, has achieved state-of-the-art performance in tasks like machine translation and language modeling.

Despite the advancements in RNN architectures, researchers continue to explore new designs and techniques to further improve their capabilities. One promising direction is the use of hybrid architectures that combine RNNs with other types of neural networks, such as convolutional or graph neural networks. These hybrid models can leverage the strengths of different architectures to achieve better performance on a wide range of tasks.

In conclusion, the evolution of RNN architectures has been a journey from simple to complex designs that address the limitations of traditional RNNs. With the development of advanced architectures like LSTM, Transformer, and hybrid models, RNNs have become a powerful tool for handling sequential data in various applications. As researchers continue to push the boundaries of neural network design, we can expect even more exciting advancements in the field of recurrent neural networks.

#Simple #Complex #Evolution #Recurrent #Neural #Network #Architectures,recurrent neural networks: from simple to gated architectures

Leave a Reply

You must be logged in to post a comment.