Your cart is currently empty!

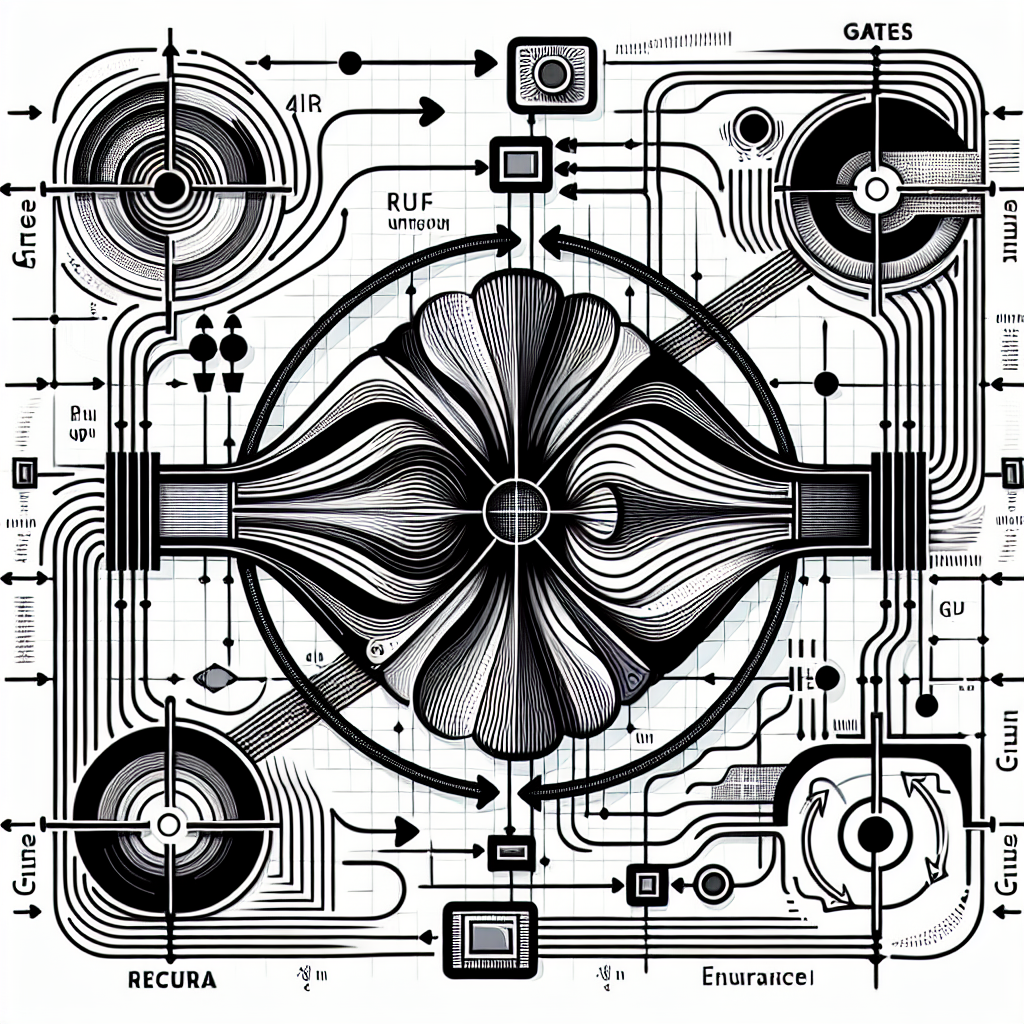

Gated Recurrent Units: Enhancing RNN Performance with GRU Architectures

Recurrent Neural Networks (RNNs) have been widely used in the field of natural language processing, speech recognition, and time series analysis due to their ability to handle sequential data. However, traditional RNNs have limitations in capturing long-range dependencies in sequential data, which can lead to vanishing or exploding gradients during training.

To address these limitations, researchers have developed a new type of RNN architecture called Gated Recurrent Units (GRUs). GRUs are a variation of traditional RNNs that incorporate gating mechanisms to control the flow of information within the network. This allows GRUs to better capture long-range dependencies in sequential data and avoid the vanishing or exploding gradient problem.

One of the key features of GRUs is their ability to selectively update and reset hidden states based on the input data. This gating mechanism allows GRUs to adaptively learn how much information to retain from previous time steps and how much new information to incorporate from the current time step. As a result, GRUs are better equipped to capture complex patterns in sequential data and make more accurate predictions.

In addition to their improved ability to capture long-range dependencies, GRUs are also more computationally efficient than traditional RNNs. The gating mechanisms in GRUs allow them to learn which information is important to retain and which information can be discarded, reducing the amount of computation required during training.

Overall, GRUs have been shown to enhance the performance of RNNs in a variety of tasks, including language modeling, machine translation, and speech recognition. By incorporating gating mechanisms to control the flow of information within the network, GRUs are able to better capture long-range dependencies in sequential data and make more accurate predictions.

In conclusion, Gated Recurrent Units (GRUs) are a powerful extension of traditional RNN architectures that can significantly improve the performance of RNNs in handling sequential data. With their ability to selectively update and reset hidden states based on input data, GRUs are better equipped to capture complex patterns and make more accurate predictions. As the field of deep learning continues to advance, GRUs are likely to play an increasingly important role in a wide range of applications.

#Gated #Recurrent #Units #Enhancing #RNN #Performance #GRU #Architectures,recurrent neural networks: from simple to gated architectures

Leave a Reply