Recurrent Neural Networks (RNNs) have become a popular choice for tasks that involve sequential data, such as natural language processing, speech recognition, and time series prediction. One of the key features that make RNNs effective in handling sequential data is their ability to remember past information and use it to make predictions about future data points.

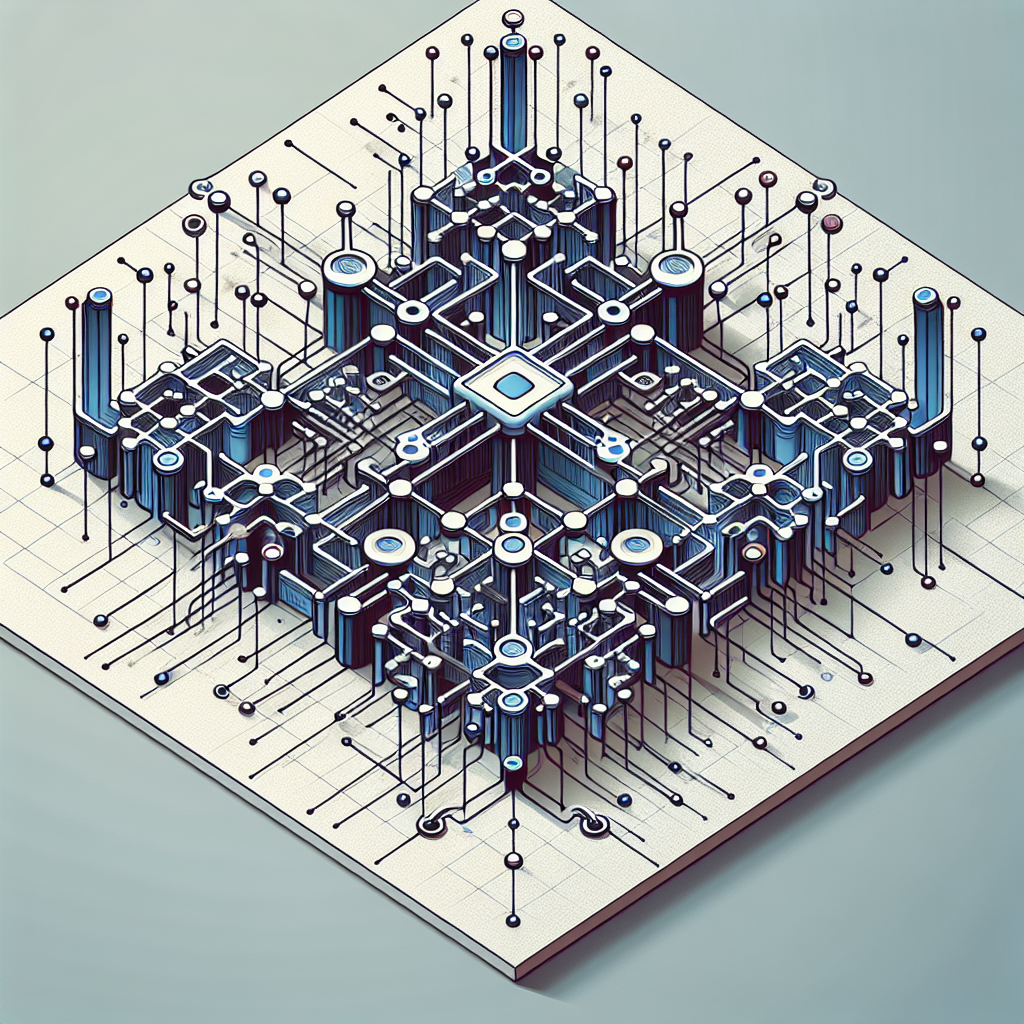

One of the key components of an RNN is the gated architecture, which includes mechanisms such as the Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU). These gated architectures have been shown to be more effective at capturing long-range dependencies in sequential data compared to traditional RNNs.

Leveraging the strength of gated architectures in RNNs involves understanding how these mechanisms work and how they can be optimized for specific tasks. One of the main advantages of gated architectures is their ability to control the flow of information through the network by learning when to update or forget information from the past.

For example, in an LSTM cell, there are three gates – the input gate, forget gate, and output gate – that control the flow of information through the cell. The input gate determines how much new information should be added to the cell state, the forget gate decides what information should be discarded from the cell state, and the output gate determines what information should be outputted to the next cell or the final prediction.

By learning to optimize these gates during training, RNNs with gated architectures can effectively capture long-range dependencies in sequential data and make accurate predictions. This is especially important in tasks such as language modeling, where understanding the context of a word requires information from previous words in the sentence.

In addition to their effectiveness in capturing long-range dependencies, gated architectures in RNNs also help mitigate the vanishing gradient problem that often occurs in traditional RNNs. The gates allow the network to learn to update or forget information in a more controlled manner, preventing gradients from becoming too small during training.

Overall, leveraging the strength of gated architectures in RNNs can lead to improved performance in tasks that involve sequential data. By understanding how these mechanisms work and optimizing them for specific tasks, researchers and practitioners can take advantage of the power of gated architectures to build more accurate and robust models for a variety of applications.

#Leveraging #Strength #Gated #Architectures #Recurrent #Neural #Networks,recurrent neural networks: from simple to gated architectures

Leave a Reply

You must be logged in to post a comment.