Your cart is currently empty!

LSTM Networks: A Deep Dive into the Architecture and Functionality

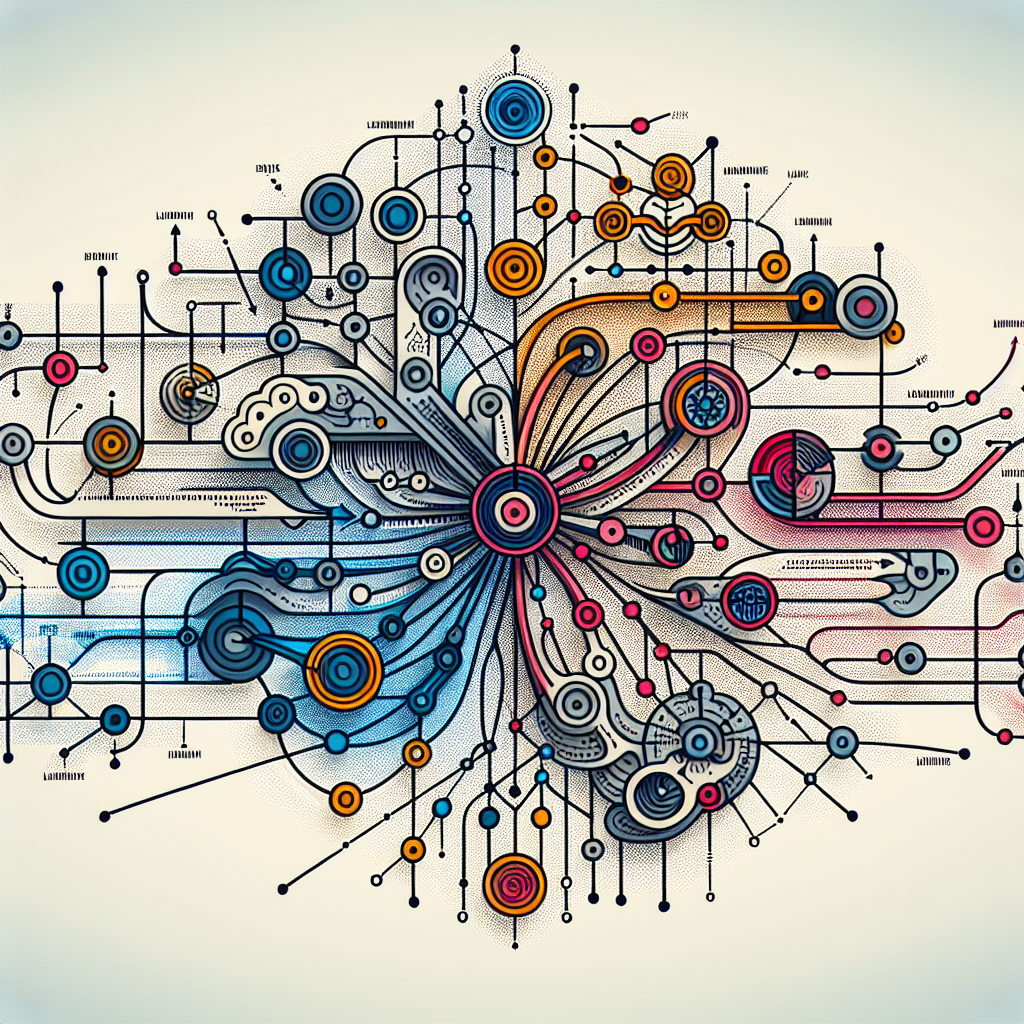

Long Short-Term Memory (LSTM) networks are a type of recurrent neural network (RNN) that is designed to better handle long-term dependencies and memory retention. In this article, we will take a deep dive into the architecture and functionality of LSTM networks, and discuss how they are used in various applications such as natural language processing, time series prediction, and speech recognition.

LSTM networks were first introduced by Sepp Hochreiter and Jürgen Schmidhuber in 1997 as a way to address the vanishing gradient problem that is common in traditional RNNs. The vanishing gradient problem occurs when gradients become very small as they are back-propagated through time, leading to difficulties in training the network effectively. LSTM networks are designed to overcome this issue by introducing a set of gating mechanisms that control the flow of information through the network.

The architecture of an LSTM network consists of several key components: a cell state, an input gate, a forget gate, an output gate, and a hidden state. The cell state is the “memory” of the network and is passed along from one time step to the next. The input gate controls how much new information is added to the cell state, the forget gate determines what information should be discarded from the cell state, and the output gate decides what information should be outputted from the cell state.

The functionality of an LSTM network can be broken down into three main steps: input processing, state updating, and output generation. In the input processing step, the network takes in the input data and computes the input gate and forget gate values based on the current input and the previous hidden state. In the state updating step, the cell state is updated based on the input gate, forget gate, and current input. Finally, in the output generation step, the output gate is used to generate the final output of the network.

LSTM networks have been widely used in various applications due to their ability to effectively capture long-term dependencies and handle sequential data. In natural language processing, LSTM networks have been used for tasks such as language modeling, machine translation, and sentiment analysis. In time series prediction, LSTM networks have been used to forecast stock prices, weather patterns, and other time-dependent data. In speech recognition, LSTM networks have been used to transcribe spoken language with high accuracy.

In conclusion, LSTM networks are a powerful tool for handling sequential data and capturing long-term dependencies. Their unique architecture and functionality make them well-suited for a wide range of applications in fields such as natural language processing, time series prediction, and speech recognition. As the field of deep learning continues to advance, LSTM networks are likely to play an increasingly important role in solving complex real-world problems.

#LSTM #Networks #Deep #Dive #Architecture #Functionality,lstm

Leave a Reply