Fix today. Protect forever.

Secure your devices with the #1 malware removal and protection software

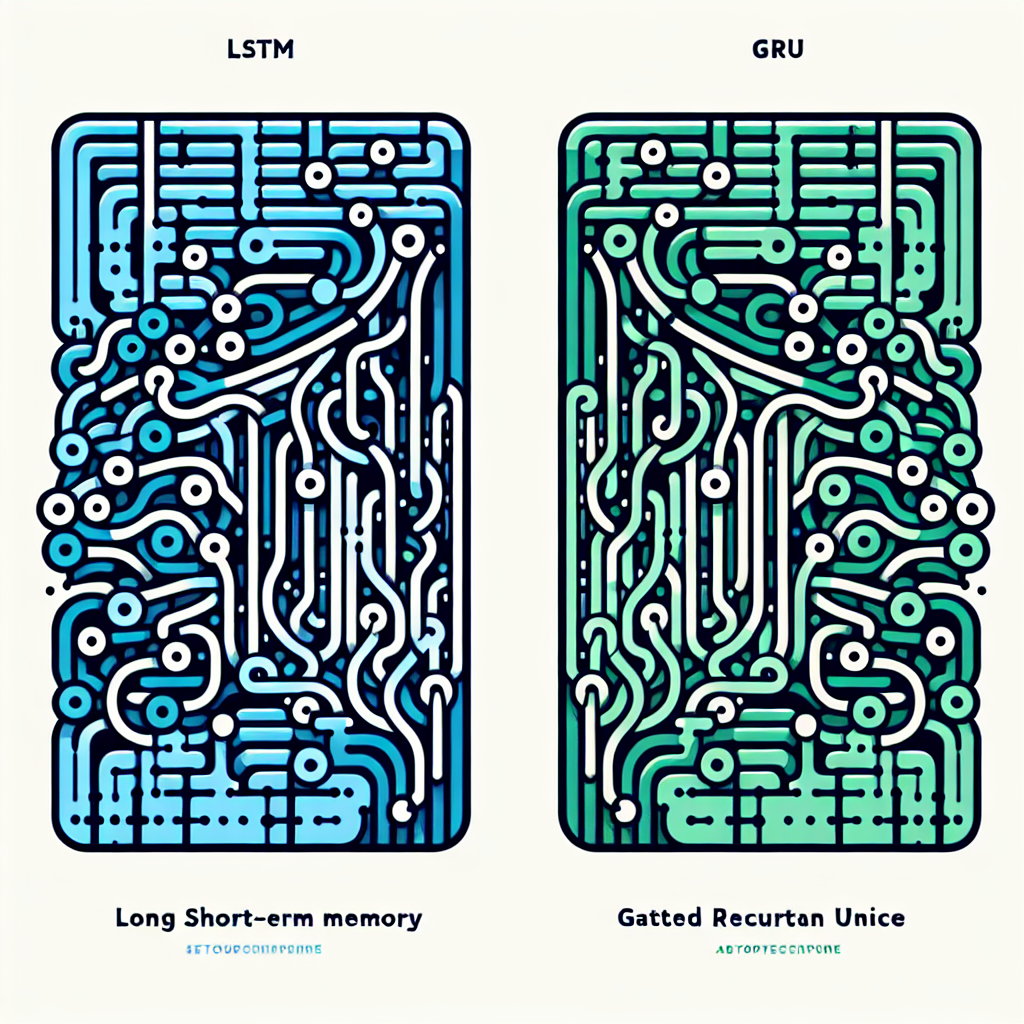

Recurrent Neural Networks (RNNs) have been widely used in various applications such as natural language processing, speech recognition, and time series analysis. Among the different types of RNNs, Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) are two popular choices due to their ability to effectively model long-range dependencies in sequential data.

LSTM and GRU are both types of RNNs that have been designed to address the vanishing gradient problem that arises when training traditional RNNs. This problem occurs when the gradients become too small during backpropagation, making it difficult for the network to learn long-term dependencies in the data.

LSTM, introduced by Hochreiter and Schmidhuber in 1997, is a type of RNN that incorporates memory cells and gating mechanisms to better capture long-range dependencies. The key components of an LSTM cell are the input gate, forget gate, output gate, and memory cell. These gates control the flow of information into and out of the cell, allowing the network to selectively remember or forget information as needed.

On the other hand, GRU, proposed by Cho et al. in 2014, is a simplified version of the LSTM that combines the forget and input gates into a single update gate. This reduces the computational complexity of the network while still enabling it to capture long-term dependencies in the data.

When comparing LSTM and GRU, there are several key differences to consider. One of the main differences is the number of gates in each architecture – LSTM has three gates (input, forget, output) while GRU has two gates (update, reset). This difference in gating mechanisms can affect the network’s ability to capture long-term dependencies and remember important information.

Another difference is the computational complexity of the two architectures. LSTM is generally considered to be more complex than GRU due to its additional gating mechanisms and memory cells. This can result in longer training times and higher memory requirements for LSTM compared to GRU.

In terms of performance, LSTM and GRU have been found to be comparable in many tasks. Some studies have shown that LSTM performs better on tasks that require modeling long-range dependencies, while GRU is more efficient on tasks with shorter dependencies. However, the performance differences between the two architectures are often task-specific and may vary depending on the dataset and the complexity of the problem.

In conclusion, LSTM and GRU are two popular choices for modeling sequential data in RNNs. While LSTM is more complex and capable of capturing long-range dependencies, GRU is simpler and more efficient in some cases. The choice between LSTM and GRU ultimately depends on the specific requirements of the task at hand and the trade-offs between complexity, performance, and efficiency.

Fix today. Protect forever.

Secure your devices with the #1 malware removal and protection software

#LSTM #GRU #Comparing #Popular #Recurrent #Neural #Networks,lstm

Leave a Reply

You must be logged in to post a comment.