Your cart is currently empty!

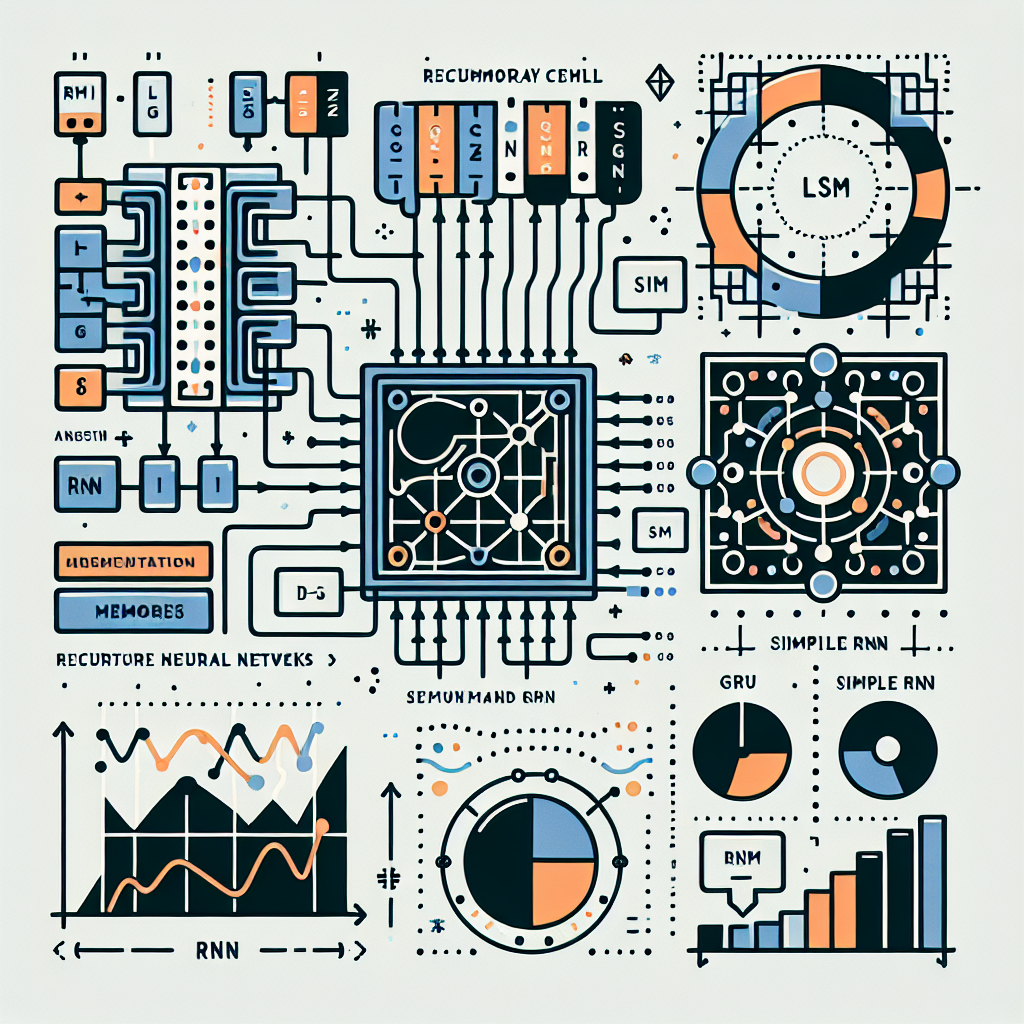

LSTM vs. Other Recurrent Neural Networks: A Comparative Analysis

Recurrent Neural Networks (RNNs) have been widely used in various applications such as natural language processing, speech recognition, and time series prediction. One of the most popular types of RNNs is the Long Short-Term Memory (LSTM) network, which is known for its ability to capture long-term dependencies in sequential data. However, there are also other types of RNNs that are commonly used, such as Gated Recurrent Units (GRUs) and Simple Recurrent Units (SRUs). In this article, we will compare LSTM with other types of RNNs to understand their strengths and weaknesses.

LSTM:

LSTM is a type of RNN that is designed to overcome the vanishing gradient problem that occurs in traditional RNNs. It consists of multiple gates that control the flow of information in the network, allowing it to retain important information over long sequences. LSTM has been shown to be effective in capturing long-term dependencies in sequential data and is widely used in applications where long-range dependencies are crucial.

GRU:

GRU is another type of RNN that is similar to LSTM but has a simpler architecture. It consists of two gates – an update gate and a reset gate – that control the flow of information in the network. GRUs are known for their efficiency and have been shown to perform well in tasks such as speech recognition and machine translation.

SRU:

SRU is a type of RNN that is even simpler than GRU, consisting of only a single recurrent layer. It does not have any gates like LSTM or GRU and relies on a simple recurrent unit to process sequential data. SRUs are known for their simplicity and efficiency but may not perform as well as LSTM or GRU in tasks that require capturing long-term dependencies.

Comparative Analysis:

When comparing LSTM with other types of RNNs, it is important to consider the complexity of the architecture, the ability to capture long-term dependencies, and the efficiency of the network. LSTM is known for its ability to capture long-term dependencies and is widely used in applications where memory retention is crucial. However, LSTM has a more complex architecture compared to GRU and SRU, which may result in longer training times and higher computational costs.

On the other hand, GRU and SRU are simpler in terms of architecture and may be more efficient in certain applications. GRUs are known for their efficiency and have been shown to perform well in tasks such as speech recognition and machine translation. SRUs, on the other hand, are even simpler than GRU but may not perform as well as LSTM or GRU in tasks that require capturing long-term dependencies.

In conclusion, LSTM remains the go-to choice for tasks that require capturing long-term dependencies in sequential data. However, GRU and SRU can be more efficient alternatives in certain applications where simplicity and efficiency are key. Ultimately, the choice of RNN architecture will depend on the specific requirements of the task at hand.

#LSTM #Recurrent #Neural #Networks #Comparative #Analysis,lstm

Leave a Reply