Your cart is currently empty!

Mastering the Art of Recurrent Neural Networks: From Simple Models to Gated Architectures.

Request immediate IT services, talents, equipments and innovation.

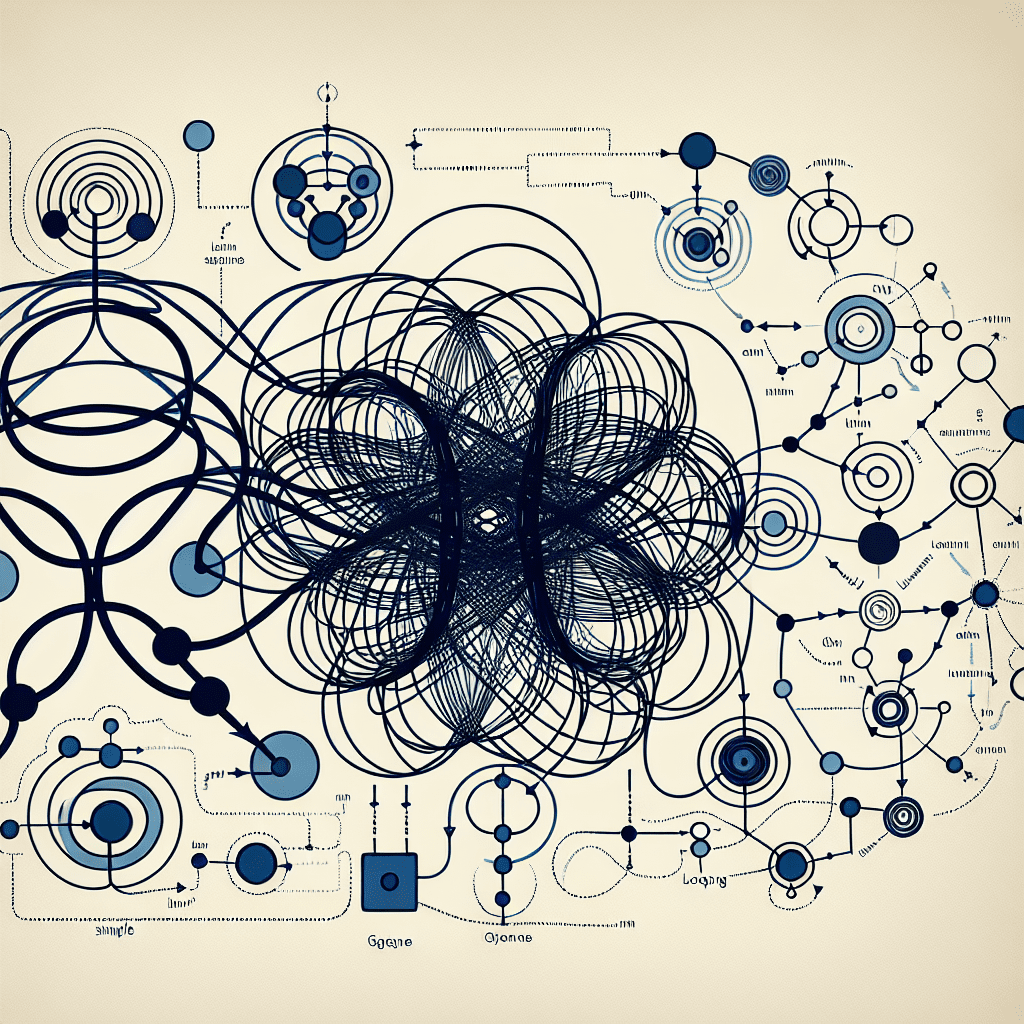

Mastering the Art of Recurrent Neural Networks: From Simple Models to Gated Architectures

Recurrent Neural Networks (RNNs) are a powerful class of artificial neural networks that are designed to handle sequential data. They have been widely used in various applications such as natural language processing, speech recognition, and time series analysis. In this article, we will explore the fundamentals of RNNs and delve into the more advanced gated architectures that have revolutionized the field of deep learning.

At its core, an RNN is a type of neural network that has connections between nodes in a directed cycle, allowing it to maintain a memory of past information. This enables RNNs to process sequential data by taking into account the context of previous inputs. However, traditional RNNs suffer from the vanishing gradient problem, where gradients become extremely small and learning becomes difficult over long sequences.

To address this issue, more advanced architectures such as Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) were introduced. These gated architectures incorporate mechanisms to selectively update and forget information, allowing them to better capture long-range dependencies in data. LSTM, in particular, has been shown to be highly effective in tasks requiring long-term memory retention, such as machine translation and sentiment analysis.

To master the art of RNNs, it is crucial to understand the inner workings of these architectures and how to effectively train and optimize them. This involves tuning hyperparameters, selecting appropriate activation functions, and implementing regularization techniques to prevent overfitting. Additionally, practitioners must be familiar with techniques such as gradient clipping and teacher forcing to stabilize training and improve convergence.

Moreover, the use of pre-trained word embeddings and attention mechanisms can further enhance the performance of RNN models in tasks involving natural language processing. By leveraging external knowledge and focusing on relevant parts of the input sequence, RNNs can achieve state-of-the-art results in tasks such as machine translation, text generation, and sentiment analysis.

In conclusion, mastering the art of recurrent neural networks requires a deep understanding of both the basic concepts and advanced architectures that underlie these models. By combining theoretical knowledge with practical experience in training and optimizing RNNs, practitioners can unlock the full potential of these powerful tools in solving complex sequential data tasks. With continuous advancements in the field of deep learning, RNNs are poised to remain a cornerstone in the development of intelligent systems for years to come.

Request immediate IT services, talents, equipments and innovation.

#Mastering #Art #Recurrent #Neural #Networks #Simple #Models #Gated #Architectures,recurrent neural networks: from simple to gated architectures

Discover more from Zion AI: Free Marketplace for Talents, Tech Jobs, Services & Innovation, Sign-up for free

Subscribe to get the latest posts sent to your email.

Leave a Reply