Fix today. Protect forever.

Secure your devices with the #1 malware removal and protection software

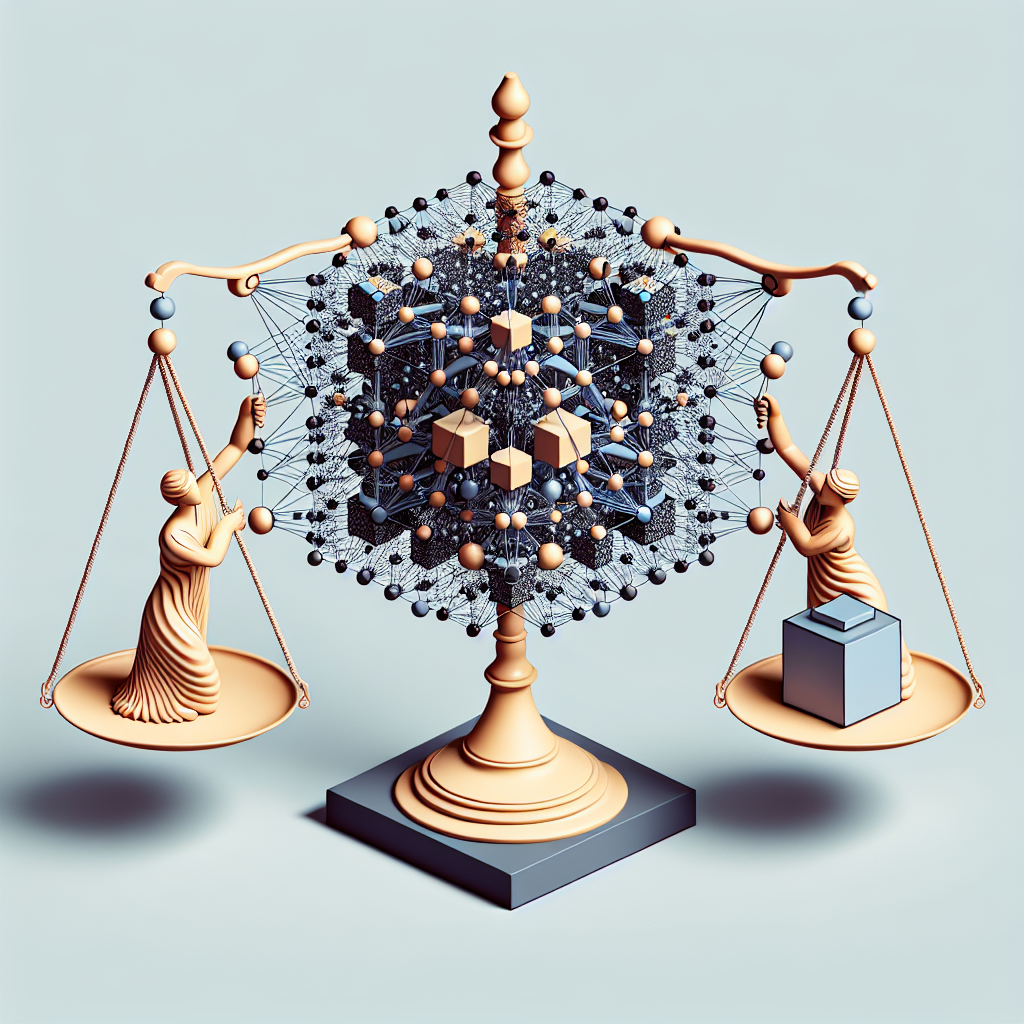

Deep neural networks have revolutionized the field of artificial intelligence and machine learning, achieving remarkable results in a wide range of tasks such as image recognition, natural language processing, and speech recognition. However, one of the challenges that researchers and practitioners face when working with deep neural networks is the issue of bias and fairness.

Bias in deep neural networks refers to the systematic errors or inaccuracies in the model’s predictions that are influenced by factors such as the training data, the algorithm used, or the assumptions made during model development. This bias can lead to unfair outcomes, such as discriminatory decisions in hiring practices, loan approvals, or criminal justice systems.

To address bias and fairness issues in deep neural networks, researchers have been exploring various techniques and approaches. One of the key strategies is to ensure that the training data used to train the model is diverse, representative, and free from any biases. This can be achieved by collecting data from a wide range of sources, balancing the representation of different groups in the dataset, and carefully curating the data to remove any biased or discriminatory information.

Another approach to overcoming bias in deep neural networks is to use fairness-aware algorithms that explicitly incorporate fairness constraints into the model optimization process. These algorithms aim to minimize discrimination and promote fairness by penalizing the model for making biased predictions or by adjusting the decision boundaries to ensure equal treatment of different groups.

In addition to data collection and algorithmic approaches, researchers are also exploring the use of interpretability techniques to understand and mitigate bias in deep neural networks. By analyzing the model’s predictions and identifying the factors that contribute to biased outcomes, researchers can make informed decisions about how to improve the model’s fairness and mitigate the impact of bias on the final predictions.

Overall, overcoming bias and fairness issues in deep neural networks is a complex and challenging task that requires a multi-faceted approach. By addressing bias at every stage of the model development process, from data collection to algorithm design to model interpretation, researchers can build more fair and unbiased deep neural networks that can be deployed in a wide range of applications with confidence and trust.

Fix today. Protect forever.

Secure your devices with the #1 malware removal and protection software

#Overcoming #Bias #Fairness #Issues #Deep #Neural #Networks,dnn

Leave a Reply

You must be logged in to post a comment.