ATLANTA — Texas senior kicker Bert Auburn, who has an additional year of eligibility, told Horns247 on Monday he plans to return to Texas next season.

And Texas special teams coordinator Jeff Banks said despite signing senior Utah transfer Jack Bouwmeester, he wants freshman Michael Kern to stay at Texas and continue to develop.

“I think adding [Bouwmeester], a senior punter, coming in with Mike [Kern’s] development, we’re going to have a great, solidified punter position for the next four years, which I’m excited about,” Banks said. “I think Mike has had his two best games the last two games, so that’s been good.”

Texas coaches and players had Media Day Monday leading up to their College Football Playoff quarterfinal against Arizona State in the Peach Bowl on Jan. 1 at 12 pm CT (on ESPN).

Auburn told Horns247 he plans to return to Texas next season.

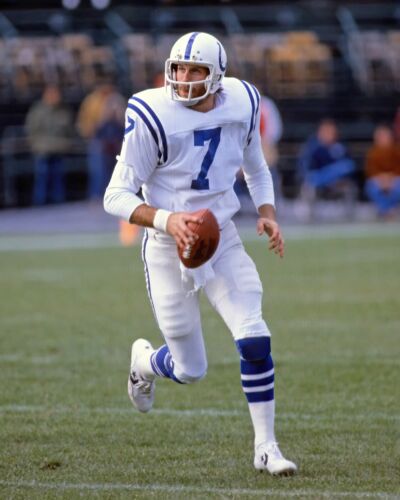

After hitting 80.8% of his field goals in 2022 (21-of-26) and 82.9% in 2023 (29-of-35), Auburn has hit just 68.2% this season (15-of-22).

Auburn is 9-of-9 on field goals of 20-39 yards, but only 6-of-11 on attempts of 40-49 yards and 0-of-2 on attempts of 50 or more yards. His misses this season have been from 43 (Michigan), 44 (OU), 51 (Florida), 47 (Kentucky), 48 (A&M), 42 and 51 yards (both in the SEC title game).

“I think it’s pretty clear as far as my game I can speak on, I mean, it hasn’t gone the way I’ve wanted it to,” Auburn said of his season so far. “It hasn’t been the best year, but, I mean, it’s all in the past. That doesn’t mean I can’t have the next couple of games going forward that I want to have. So just looking forward to what’s next.”

The good news is Auburn’s 37-yard field goal with 18 seconds left, forcing overtime against Georgia in the SEC Championship game, made Auburn 5-of-5 on field-goal attempts to tie or take the lead in the final two minutes of a game during his career. The others: a 48-yarder as time expired at Texas Tech in 2022, forcing OT; a 49-yarder with 1:27 left, giving Texas a 19-17 lead against Alabama in 2022; a 47-yarder against Oklahoma in 2023 with 1:17 left, giving Texas a 30-27 lead; a 42-yarder in OT in Texas’ 33-30 win over Kansas State in 2023.

“It’s definitely reassuring to think about [his successful pressure kicks],” Auburn said. “But like I said earlier, that’s the past. It doesn’t guarantee the next one. So really, just focusing in on everything you’ve practiced prepares you for those situations.”

Auburn said the thing he loves most about this year’s team is the culture coach Steve Sarkisian has established.

“I got here the first year Sark was here. We were five and seven, and now just being a part of the whole culture change has been, has been unreal, just what Sark and and all the seniors here, and you know, people that have left to have put into this team, as far as as culture wise, I don’t think anybody in the country can beat it.

Punter Michael Kern has struggled through freshman growing pains, averaging 40.53 yards per punt.

Most freshman punters struggle. Texas’ Michael Dickson, who won the Ray Guy Award in 2017, averaged 41.3 yards per punt as a freshman in 2015 before averaging 47.4 yards per punt in 2016 and 2017.

Banks’ plan is for Kern to stay at Texas and develop into a great punter. Bouwmeester, who averaged 45.5 ypp in 2023 with 19 punts of 50 or more yards and 20 downed inside opponents’ 20, provides veteran insurance as Kern grows on the job.

After a successful season with the Auburn Tigers, star punter Bert Auburn is reportedly planning to return to his home state of Texas. However, coaches at the University of Texas are hoping to persuade Michael Kern, their talented punter, to stay and continue to develop his skills at UT.

Auburn, a native of Houston, has been a standout player for Auburn, consistently pinning opponents deep in their own territory with his booming punts. His decision to return to Texas has sparked excitement among Longhorn fans, who are eager to see him suit up in burnt orange.

Meanwhile, Kern, a promising young talent, has shown great potential during his time at Texas. Coaches believe that with the right guidance and development, he has the potential to become one of the top punters in the country.

The coaching staff at UT is working hard to convince Kern to stay and continue his development with the Longhorns. They see him as a key piece of their special teams unit and are hopeful that he will choose to remain in Austin.

As the offseason unfolds, all eyes will be on the decisions of both Auburn and Kern. Longhorn fans will be eagerly awaiting news of their plans for the upcoming season. Stay tuned for updates on this developing story.

Tags:

- Bert Auburn Texas return

- Michael Kern UT punter

- Texas football coaching news

- Bert Auburn coaching update

- Longhorns punter Michael Kern

- Texas Longhorns football updates

- Bert Auburn coaching plans

- Michael Kern development at UT

- Texas football team news

- Longhorns special teams update

#Bert #Auburn #planning #return #Texas #coaches #punter #Michael #Kern #stay #develop

You must be logged in to post a comment.