Your cart is currently empty!

Tag: Concept

The Evolution of NVIDIA Virtual Reality: From Concept to Consumer Favorite

Virtual reality (VR) technology has come a long way in recent years, and one of the companies at the forefront of this evolution is NVIDIA. From its humble beginnings as a concept to its current status as a consumer favorite, NVIDIA has played a key role in shaping the VR landscape.The concept of virtual reality has been around for decades, but it wasn’t until recently that the technology began to gain mainstream popularity. NVIDIA, a leading manufacturer of graphics processing units (GPUs), recognized the potential of VR early on and began investing heavily in the development of VR technology.

One of NVIDIA’s first forays into VR was the introduction of its GeForce GTX 970 and 980 graphics cards, which were specifically designed to support VR applications. These cards offered high-performance graphics capabilities that were crucial for creating immersive VR experiences.

As VR technology continued to advance, NVIDIA expanded its offerings to include the GeForce GTX 1080 and 1080 Ti, which further improved performance and visual quality in VR applications. These cards were particularly well-received by consumers and quickly became favorites among VR enthusiasts.

In addition to its hardware offerings, NVIDIA also developed software solutions to enhance the VR experience. One of the company’s most notable contributions to VR technology is its VRWorks SDK, which provides developers with tools and resources to optimize their VR applications for NVIDIA GPUs.

NVIDIA’s commitment to VR technology has not gone unnoticed, with the company receiving accolades for its contributions to the industry. In 2017, NVIDIA was awarded the Best VR Hardware award at the prestigious VR Awards for its GeForce GTX 1080 Ti graphics card.

Today, NVIDIA continues to push the boundaries of VR technology with its latest offerings, including the GeForce RTX series of graphics cards. These cards feature real-time ray tracing technology, which further enhances the visual quality of VR applications.

Overall, the evolution of NVIDIA’s virtual reality technology has been nothing short of impressive. From its early investments in VR hardware to its ongoing commitment to innovation, NVIDIA has established itself as a key player in the VR industry. As VR technology continues to evolve, it’s safe to say that NVIDIA will be at the forefront, shaping the future of virtual reality for years to come.

From Concept to Production: Developing Serverless Applications on Google Cloud Run

Serverless computing has become an increasingly popular choice for developing and deploying applications in the cloud. With serverless architecture, developers can focus on writing code without the need to manage servers, scaling, or infrastructure. Google Cloud Run is a serverless platform that allows developers to build and deploy containerized applications easily.From concept to production, developing serverless applications on Google Cloud Run involves a few key steps. Let’s break down the process:

1. Conceptualizing the Application: Before getting started with developing a serverless application on Google Cloud Run, it’s essential to have a clear understanding of the application’s requirements and goals. This includes defining the application’s functionality, user experience, and any third-party integrations that may be needed.

2. Choosing the Technology Stack: Once the application concept is defined, the next step is to choose the technology stack that will be used to develop the application. Google Cloud Run supports a variety of programming languages, frameworks, and tools, making it flexible for developers to work with their preferred technology stack.

3. Developing the Application: With the technology stack in place, developers can start writing code to build the application. Google Cloud Run allows developers to package their code into containers using Docker, making it easy to deploy and manage applications in a serverless environment.

4. Testing and Debugging: Testing is a critical part of the development process to ensure that the application functions as expected. Google Cloud Run provides tools for testing and debugging applications, including local testing environments and logging capabilities.

5. Deploying the Application: Once the application has been tested and debugged, it’s time to deploy it on Google Cloud Run. The platform makes it easy to deploy containerized applications with a simple command, and developers can easily scale their applications based on demand.

6. Monitoring and Maintenance: After the application is deployed, it’s important to monitor its performance and make any necessary updates or maintenance. Google Cloud Run provides monitoring tools and dashboards to track the application’s performance and make informed decisions about scaling and optimization.

In conclusion, developing serverless applications on Google Cloud Run offers a streamlined and efficient way to build and deploy applications in the cloud. By following the steps outlined above, developers can take their application from concept to production with ease. With Google Cloud Run’s flexibility and scalability, developers can focus on writing code and delivering value to their users without the hassle of managing servers or infrastructure.

From Concept to Deployment: Building Real-World Services with Google Cloud Run

Google Cloud Run is a serverless platform that allows developers to build, deploy, and scale containerized applications quickly and easily. In this article, we will discuss how to take a concept for a real-world service and deploy it using Google Cloud Run.The first step in building a real-world service with Google Cloud Run is to define the concept for the service. This could be a web application, API, or any other type of service that you want to deploy. Once you have a clear idea of what you want to build, the next step is to create a containerized application that implements this concept.

To create a containerized application, you can use Docker to package your application and its dependencies into a single container image. This image can then be deployed to Google Cloud Run, which will automatically manage the scaling and infrastructure for your application.

Once you have created your container image, you can deploy it to Google Cloud Run using the gcloud command-line tool or the Cloud Console. Google Cloud Run will automatically create a fully managed and serverless environment for your application, handling all of the infrastructure and scaling for you.

After deploying your application to Google Cloud Run, you can access it using a unique URL that is provided by the platform. This URL can be used to access your service from anywhere in the world, making it easy to share your application with users.

Overall, Google Cloud Run provides an easy and efficient way to build, deploy, and scale real-world services. By following the steps outlined in this article, you can take your concept for a service and quickly deploy it to Google Cloud Run, allowing you to focus on building your application rather than managing infrastructure.

From Concept to Reality: The Journey of Amazon Nova

From Concept to Reality: The Journey of Amazon NovaThe story of Amazon Nova is a testament to the power of innovation and determination. What started as a simple idea in the mind of its founder, Jeff Bezos, has now become one of the largest and most successful companies in the world. The journey of Amazon Nova from concept to reality is a fascinating one, filled with ups and downs, twists and turns, and ultimately, triumph.

It all began in the early 1990s, when Jeff Bezos was working on Wall Street and noticed the rapid growth of the internet. He saw an opportunity to revolutionize the way people shopped and decided to create an online bookstore. In 1994, Bezos left his job and founded Amazon Nova in his garage in Seattle, Washington.

The early days of Amazon Nova were challenging. Bezos faced skepticism from investors and competitors, as well as technical difficulties and logistical challenges. But he was determined to succeed and worked tirelessly to make his vision a reality. He focused on providing customers with a wide selection of books at competitive prices, as well as excellent customer service and fast delivery.

As Amazon Nova grew, Bezos expanded its product offerings to include electronics, clothing, and household goods. The company also developed innovative technologies such as the Kindle e-reader and Amazon Prime subscription service. These initiatives helped Amazon Nova attract millions of customers and become a household name.

Over the years, Amazon Nova has faced criticism and controversy, including accusations of unfair labor practices, antitrust violations, and the impact of its dominance on small businesses. But the company has continued to thrive, adapting to changing market conditions and evolving consumer preferences.

Today, Amazon Nova is a global behemoth, with operations in over 100 countries and annual revenues in the billions. The company has diversified into cloud computing, streaming services, and artificial intelligence, and continues to push the boundaries of technology and commerce.

The journey of Amazon Nova from concept to reality is a remarkable one, demonstrating the power of innovation, perseverance, and a relentless focus on customer satisfaction. As Jeff Bezos once said, “If you never want to be criticized, don’t do anything new.” Amazon Nova has certainly done something new and has forever changed the way we shop and interact with the world.

The Evolution of Super Micro: From Concept to Reality

Super Micro is a technology company that has been making waves in the industry with its innovative products and solutions. The company’s journey from concept to reality is a fascinating one, showcasing the evolution of technology and the power of innovation.The concept of Super Micro was born out of the desire to create high-performance, energy-efficient servers and storage solutions that could meet the growing demands of businesses and organizations. The founders of the company recognized the need for more efficient and reliable hardware that could support the increasing data processing and storage requirements of modern-day enterprises.

With this vision in mind, Super Micro set out to develop cutting-edge technology that would revolutionize the industry. The company invested heavily in research and development, hiring top engineers and designers to bring their ideas to life. Through years of hard work and dedication, Super Micro was able to create a range of products that were not only powerful and reliable but also cost-effective and environmentally friendly.

One of the key factors in the success of Super Micro was its focus on innovation. The company was quick to adopt new technologies and trends, continuously pushing the boundaries of what was possible in the world of hardware. This commitment to innovation allowed Super Micro to stay ahead of the competition and remain a leader in the industry.

Today, Super Micro is known for its state-of-the-art servers, storage solutions, and networking equipment that are used by some of the world’s largest companies and organizations. The company’s products are renowned for their performance, reliability, and efficiency, making them a popular choice for businesses looking to optimize their IT infrastructure.

The evolution of Super Micro is a testament to the power of innovation and the impact that technology can have on the world. By constantly pushing the boundaries of what is possible, Super Micro has been able to create products that are not only cutting-edge but also practical and cost-effective. As the company continues to grow and expand its offerings, it is clear that the future of technology is in good hands with Super Micro leading the way.

Beyond the Limits of Our Universe: Exploring the Concept of 8 Space

The concept of 8 space is a mind-bending idea that pushes the boundaries of our understanding of the universe. Beyond the limits of our known universe lies a realm of infinite possibilities and dimensions that are beyond our comprehension. This concept challenges our perception of reality and forces us to question the nature of existence itself.Imagine a universe that exists beyond the three dimensions of space and one dimension of time that we are familiar with. In this realm of 8 space, there are additional dimensions that we cannot perceive or imagine. These dimensions could be curled up into tiny loops, invisible to the naked eye, or they could be vast and expansive, stretching out into infinity.

One of the most intriguing aspects of 8 space is the idea of parallel universes. In this theory, there are an infinite number of universes that exist alongside our own, each with its own set of physical laws and constants. These parallel universes could be vastly different from our own, with different forms of matter, energy, and even life forms.

The concept of 8 space also raises questions about the nature of reality and consciousness. If there are other dimensions beyond our own, could there be beings that exist in those dimensions that are aware of our existence? Could they be influencing our world in ways that we cannot perceive or understand?

Exploring the concept of 8 space opens up a world of possibilities and challenges our understanding of the universe. It forces us to think beyond the limits of our known reality and consider the existence of other realms that are beyond our comprehension. While the idea of 8 space may seem far-fetched and speculative, it is a fascinating concept that pushes the boundaries of our understanding of the universe and the nature of reality itself.

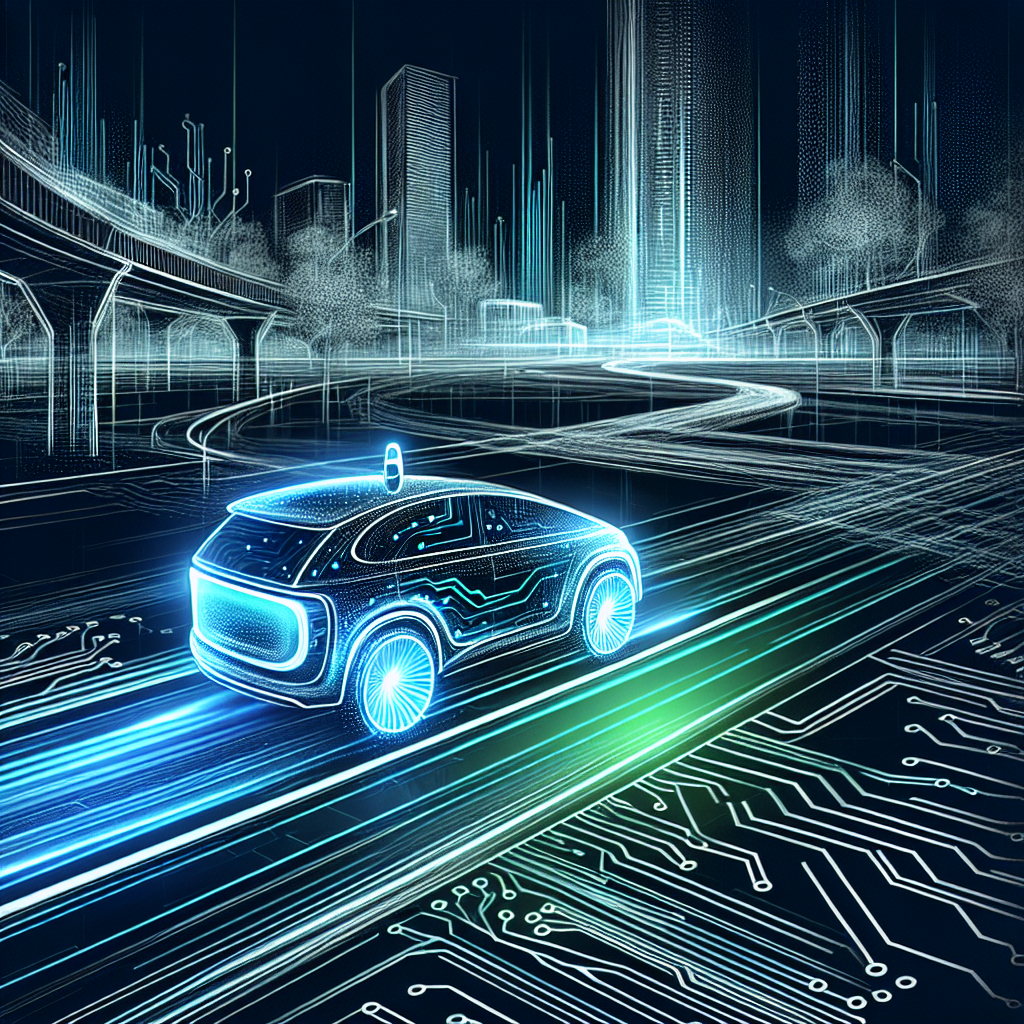

From Concept to Reality: NVIDIA’s Journey in Developing Autonomous Vehicles

From Concept to Reality: NVIDIA’s Journey in Developing Autonomous VehiclesAutonomous vehicles have long been a dream of the future, promising to revolutionize the way we travel and drastically reduce accidents on the road. One company that has been at the forefront of developing this technology is NVIDIA, a leading provider of graphics processing units (GPUs) for gaming and professional markets.

NVIDIA’s journey in developing autonomous vehicles began in 2015 when they announced the Drive PX platform, designed to provide the computing power necessary for self-driving cars. The platform featured a powerful GPU and deep learning software, enabling cars to perceive and understand their surroundings in real-time.

Since then, NVIDIA has made significant strides in advancing autonomous vehicle technology. In 2016, they partnered with Audi to develop an autonomous driving system for the automaker’s next-generation cars. The system, known as the Audi A8, featured NVIDIA’s Drive PX platform and was capable of Level 3 autonomous driving, where the car can handle most driving tasks but still requires human intervention.

In 2018, NVIDIA announced the Drive AGX platform, a scalable AI computing platform for autonomous vehicles. The platform is designed to handle the complex computing tasks required for self-driving cars, such as sensor fusion, perception, and path planning. It also features NVIDIA’s new TensorRT software, which optimizes deep learning models for real-time processing.

One of the key innovations that NVIDIA has brought to autonomous vehicle development is their use of deep learning technology. Deep learning allows computers to learn from data and make decisions without being explicitly programmed. NVIDIA’s GPUs are well-suited for deep learning tasks, making them a natural choice for autonomous vehicle development.

NVIDIA’s commitment to autonomous vehicles is evident in their partnerships with leading automakers such as Mercedes-Benz, Volvo, and Toyota. These partnerships have allowed NVIDIA to integrate their technology into a wide range of vehicles, from luxury sedans to commercial trucks.

As we look towards the future of autonomous vehicles, NVIDIA’s role in shaping this technology cannot be understated. Their expertise in GPU technology and deep learning has propelled them to the forefront of the industry, making them a key player in the development of self-driving cars.

In conclusion, NVIDIA’s journey in developing autonomous vehicles is a testament to their commitment to innovation and technological advancement. With their cutting-edge technology and partnerships with leading automakers, NVIDIA is poised to revolutionize the way we travel in the years to come. From concept to reality, NVIDIA is paving the way for a future where self-driving cars are a common sight on the roads.

The Evolution of QOM2: From Concept to Reality

The concept of Quality of Manufacturing 2.0 (QOM2) has been gaining traction in the manufacturing industry over the past few years. It represents a shift in thinking from traditional quality control methods to a more proactive and data-driven approach to improving manufacturing processes. But how did this concept evolve from just an idea to a reality?The evolution of QOM2 can be traced back to the growing demand for more efficient and higher quality manufacturing processes. With advancements in technology such as the Internet of Things (IoT) and big data analytics, manufacturers began to see the potential for using real-time data to monitor and optimize their production lines. This shift towards data-driven decision making paved the way for the development of QOM2.

One of the key components of QOM2 is the use of advanced analytics and machine learning algorithms to analyze data collected from sensors and other sources on the factory floor. By tracking key performance indicators in real-time, manufacturers can identify trends and patterns that may indicate potential issues or areas for improvement. This proactive approach allows for quicker problem solving and ultimately leads to higher quality products.

Another important aspect of QOM2 is the integration of quality control processes directly into the manufacturing line. This means that instead of waiting until the end of the production process to inspect products for defects, quality checks are performed at various stages of production. This not only reduces the likelihood of defects slipping through the cracks but also helps to identify and address issues earlier in the process.

The evolution of QOM2 has also been driven by the increasing demand for customization and personalization in manufacturing. With consumers expecting more personalized products, manufacturers need to be able to quickly adapt their production processes to meet these changing demands. By implementing QOM2 principles, manufacturers can more easily adjust their production lines to accommodate different product configurations and variations.

Overall, the evolution of QOM2 has been a gradual process, driven by the need for more efficient and higher quality manufacturing processes. As technology continues to advance and the demand for personalized products grows, we can expect to see even more innovations in the field of quality manufacturing. By embracing the principles of QOM2, manufacturers can stay ahead of the curve and continue to deliver high-quality products to their customers.

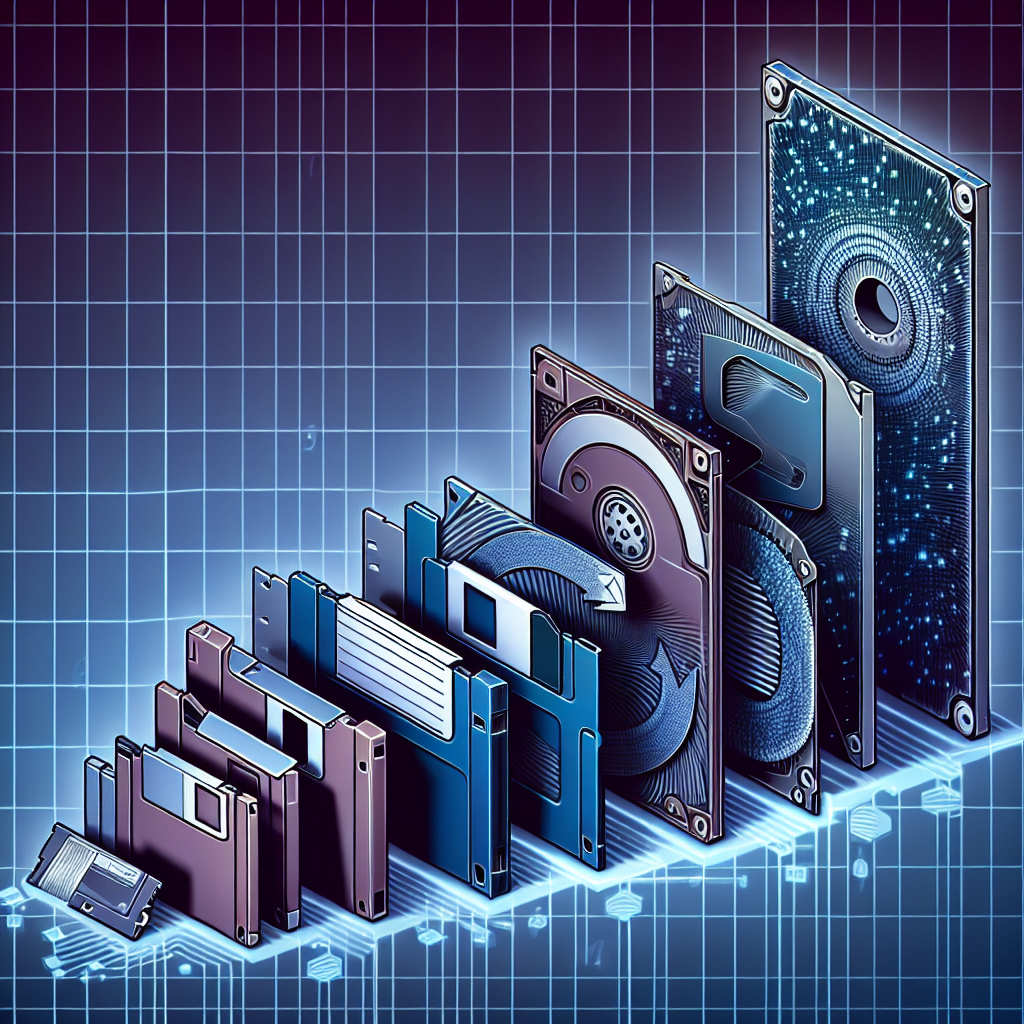

Exploring the Evolution of NVMe: From Concept to Mainstream Adoption

Non-Volatile Memory Express (NVMe) has been making waves in the world of data storage and processing, revolutionizing the way we think about storage technology. But how did NVMe come to be, and how has it evolved from a concept to mainstream adoption?NVMe was first introduced in 2011 as a new interface specification designed to take advantage of the speed and efficiency of solid-state drives (SSDs). Traditional storage interfaces, such as Serial ATA (SATA), were not optimized for the high-speed, low-latency capabilities of SSDs, leading to bottlenecks in data transfer and processing.

By developing a new interface specifically tailored to the needs of SSDs, NVMe was able to unlock the full potential of these storage devices, allowing for faster data transfer speeds, reduced latency, and improved performance. This marked the beginning of a new era in storage technology.

In the years following its introduction, NVMe continued to evolve and improve, with new versions of the specification being released to address the changing needs of the industry. These updates included features such as improved power management, enhanced security capabilities, and support for new types of storage media.

As NVMe continued to gain traction in the market, more and more companies began to adopt the technology in their products. Today, NVMe has become the standard interface for high-performance storage devices, with a wide range of products available from a variety of manufacturers.

One of the key factors driving the adoption of NVMe has been the increasing demand for faster and more efficient storage solutions. With the rise of big data, artificial intelligence, and other data-intensive applications, the need for high-speed, low-latency storage has never been greater. NVMe provides a solution to these challenges, allowing for faster data access and processing, improved system performance, and reduced energy consumption.

In addition to its performance benefits, NVMe has also proven to be cost-effective for many organizations. While the initial cost of NVMe storage devices may be higher than traditional HDDs or SATA SSDs, the increased speed and efficiency of NVMe can lead to significant cost savings in the long run. This has made NVMe an attractive option for a wide range of applications, from enterprise data centers to consumer electronics.

Looking to the future, the evolution of NVMe is likely to continue, with new technologies and innovations driving further improvements in speed, efficiency, and performance. As the demand for high-speed storage solutions continues to grow, NVMe is poised to play an increasingly important role in shaping the future of data storage and processing.

In conclusion, NVMe has come a long way since its inception, evolving from a concept to mainstream adoption in a relatively short period of time. With its ability to deliver high-speed, low-latency storage solutions, NVMe is set to remain a key player in the world of data storage for years to come.