Generative Adversarial Networks (GANs) have gained significant popularity in the field of computer vision for their ability to generate realistic images. However, their potential in Natural Language Processing (NLP) has not been fully explored yet. In this article, we will delve into how GANs can be harnessed for NLP tasks and the challenges that come with it.

GANs are a type of neural network architecture that consists of two networks – a generator and a discriminator. The generator generates samples, while the discriminator distinguishes between real and generated samples. Through a process of competition and cooperation, the generator learns to generate realistic samples that can fool the discriminator.

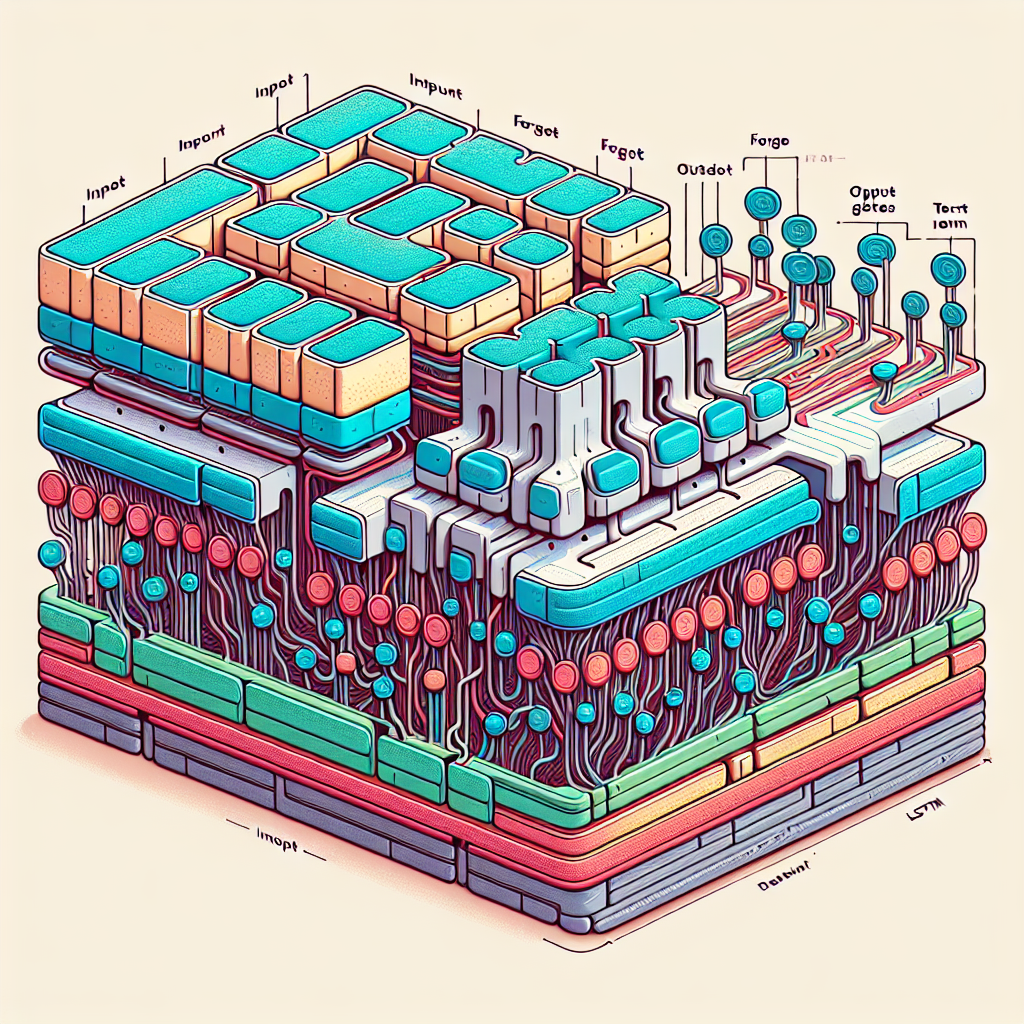

In the context of NLP, GANs can be used for tasks such as text generation, text summarization, and machine translation. One of the key advantages of using GANs for NLP tasks is their ability to generate diverse and high-quality text samples. Traditional language models like LSTMs and Transformers often suffer from mode collapse, where they generate repetitive and low-quality text. GANs can help overcome this issue by generating more diverse and realistic text samples.

Another advantage of using GANs for NLP is their ability to learn from unlabeled data. GANs can be trained on a large amount of unlabeled text data to learn the underlying structure of the data and generate realistic text samples. This can be particularly useful in scenarios where labeled data is scarce or expensive to obtain.

However, there are several challenges that come with using GANs for NLP tasks. One of the major challenges is the evaluation of generated text samples. Unlike images, which can be visually evaluated for realism, evaluating the quality of generated text samples is more subjective and requires human judgment. Researchers are actively working on developing automated metrics for evaluating text generation quality, but this remains an ongoing challenge.

Another challenge is the training instability of GANs. GANs are notoriously difficult to train and often suffer from issues such as mode collapse, where the generator learns to generate a limited set of samples. Researchers are exploring techniques such as Wasserstein GANs and self-attention mechanisms to improve the stability of GAN training for NLP tasks.

In conclusion, GANs have the potential to revolutionize NLP by enabling the generation of diverse and high-quality text samples. However, there are still several challenges that need to be addressed before GANs can be widely adopted for NLP tasks. Researchers are actively working on developing new architectures and training techniques to harness the full potential of GANs for NLP. As the field continues to evolve, we can expect to see more innovative applications of GANs in NLP in the near future.

#Harnessing #Potential #GANs #NLP #Deep #Dive #Generative #Adversarial #Networks,gan)

to natural language processing (nlp) pdf