Data center downtime can have far-reaching consequences beyond just monetary losses. While the financial impact of downtime is significant, there are also hidden costs that are often overlooked but can have a lasting impact on a business’s operations and reputation.

One of the most obvious hidden costs of data center downtime is the damage to a company’s reputation. In today’s digital age, customers expect instant access to information and services. When a company’s website or online services are down, customers may become frustrated and lose trust in the company’s ability to deliver on its promises. This can result in lost customers and damage to the company’s brand image, which can be difficult to repair.

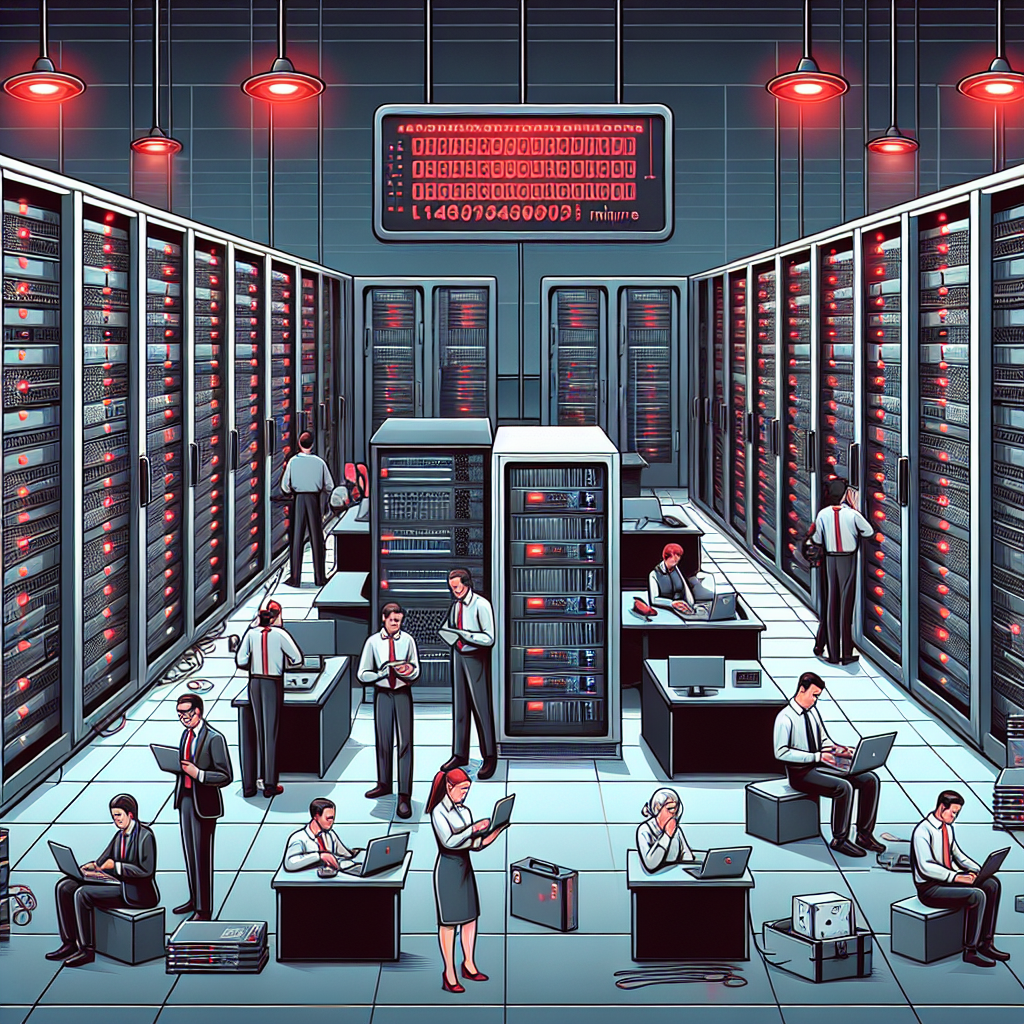

In addition to reputation damage, data center downtime can also lead to lost productivity and missed opportunities. When employees are unable to access critical data and applications, work comes to a standstill. This can lead to missed deadlines, lost revenue, and decreased employee morale. In some cases, businesses may even lose out on potential business opportunities because they were unable to respond to customer inquiries or complete transactions.

Furthermore, data center downtime can also have legal and regulatory implications. Depending on the industry, businesses may be required to adhere to strict data protection regulations and guidelines. When downtime occurs, sensitive data may be at risk of being compromised, leading to potential legal repercussions and fines. Additionally, downtime can also impact a company’s ability to meet service level agreements (SLAs) with customers, which can result in contract breaches and legal disputes.

Another hidden cost of data center downtime is the impact on employee morale and job satisfaction. When employees are constantly dealing with technical issues and downtime, it can lead to frustration and burnout. This can result in increased employee turnover, which can be costly for a company in terms of recruitment and training expenses.

Overall, the hidden costs of data center downtime go beyond just monetary losses. From damage to reputation and missed opportunities to legal implications and employee morale, downtime can have a lasting impact on a business’s operations and bottom line. It is crucial for companies to invest in robust backup and disaster recovery solutions to minimize the risks of downtime and protect their business from these hidden costs.