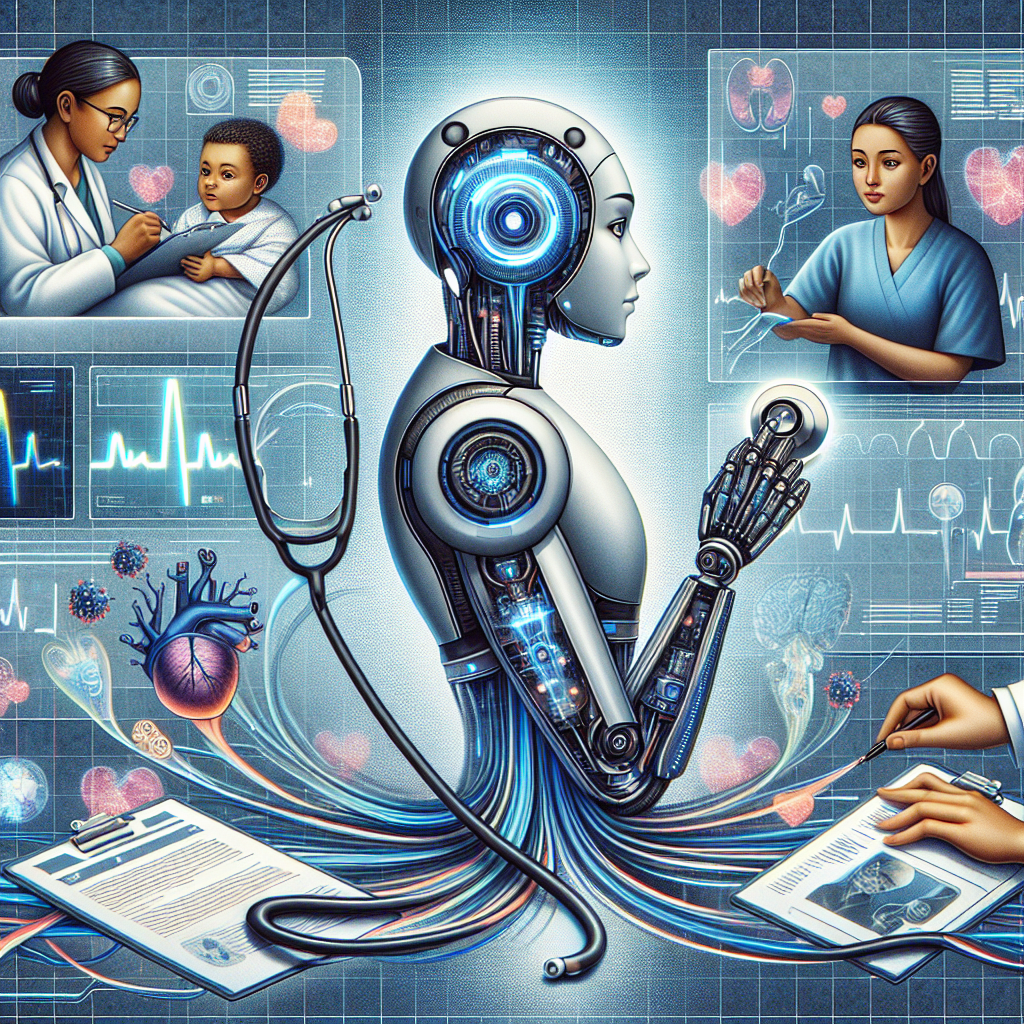

Artificial Intelligence (AI) is revolutionizing the healthcare industry by enhancing patient care in ways that were previously thought impossible. From diagnosing diseases to personalizing treatment plans, AI is transforming healthcare delivery and improving patient outcomes.

One of the key roles of AI in healthcare is its ability to analyze large amounts of data quickly and accurately. This is particularly important in the field of medical imaging, where AI can assist radiologists in detecting abnormalities and making more accurate diagnoses. By using algorithms to analyze images, AI can help identify diseases such as cancer at an early stage, leading to earlier treatment and improved outcomes for patients.

AI is also playing a crucial role in personalized medicine, where treatment plans are tailored to individual patients based on their unique genetic makeup and medical history. By analyzing genetic data and clinical information, AI can help doctors identify the most effective treatment options for each patient, reducing the risk of adverse reactions and improving overall outcomes.

Another area where AI is making a significant impact is in predictive analytics. By analyzing a patient’s medical history and monitoring real-time data, AI can help identify patients at high risk of developing certain conditions, allowing healthcare providers to intervene early and prevent complications. This proactive approach to healthcare can lead to better outcomes and lower healthcare costs in the long run.

AI is also being used to improve the efficiency of healthcare delivery by automating routine tasks and streamlining administrative processes. By freeing up healthcare providers to focus on patient care, AI can help reduce wait times, improve communication between healthcare professionals, and ultimately enhance the overall patient experience.

While AI has the potential to revolutionize healthcare, there are also challenges that need to be addressed. These include concerns about data privacy and security, as well as the need for healthcare providers to be properly trained in using AI tools effectively.

Overall, the role of AI in healthcare is rapidly evolving, and its potential to enhance patient care is significant. By leveraging the power of AI to analyze data, personalize treatment plans, and improve healthcare delivery, we can expect to see continued advancements in patient care and outcomes in the years to come.