Enhancing NLP with Generative Adversarial Networks (GANs): A Review

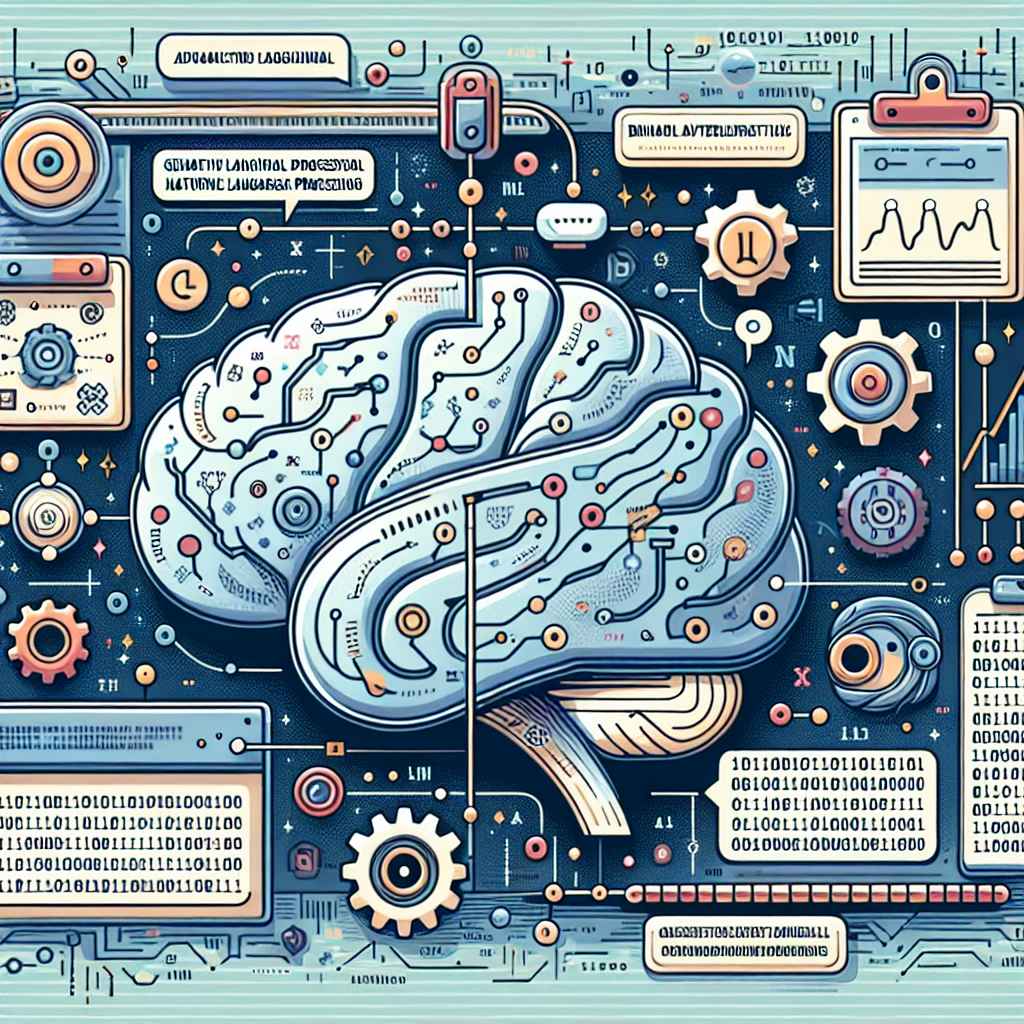

Natural Language Processing (NLP) has seen incredible advancements in recent years, thanks to the development of deep learning techniques. One of the most promising approaches to enhancing NLP is through the use of Generative Adversarial Networks (GANs). GANs have been successfully used in a variety of domains, including computer vision and speech recognition, and their potential in NLP is just beginning to be explored.

GANs are a type of neural network that consists of two components: a generator and a discriminator. The generator is responsible for creating new samples of data, while the discriminator is tasked with distinguishing between real and generated data. Through a process of competition and collaboration, GANs can generate highly realistic and diverse data samples.

In the context of NLP, GANs have been used to improve the quality of text generation tasks, such as language modeling, machine translation, and dialog systems. By training a GAN on a large corpus of text data, researchers have been able to generate more coherent and fluent text samples compared to traditional language models.

One of the key advantages of using GANs in NLP is their ability to capture the underlying structure of language and generate text that is more contextually relevant. This can be particularly useful in tasks such as paraphrasing and text summarization, where generating diverse and coherent outputs is crucial.

In addition to text generation, GANs have also been used to enhance other NLP tasks, such as sentiment analysis and named entity recognition. By leveraging the power of GANs, researchers have been able to improve the accuracy and robustness of these tasks, leading to more reliable and interpretable results.

Despite their potential, GANs also come with challenges and limitations in the context of NLP. Training GANs can be computationally expensive and time-consuming, requiring large amounts of data and computational resources. Additionally, GANs can be prone to mode collapse, where the generator fails to produce diverse outputs, leading to a lack of variability in the generated text.

Overall, the use of GANs in NLP holds great promise for advancing the field and improving the quality of text generation and other NLP tasks. As researchers continue to explore the potential of GANs in NLP, we can expect to see even more innovative applications and breakthroughs in the near future.

#Enhancing #NLP #Generative #Adversarial #Networks #GANs #Review,gan)

to natural language processing (nlp) pdf