Your cart is currently empty!

Tag: Gated

Comparing Simple and Gated Architectures in Recurrent Neural Networks: Which is Better?

Recurrent Neural Networks (RNNs) are a type of artificial neural network designed to model sequential data, making them well-suited for tasks such as speech recognition, language modeling, and time series prediction. One important architectural decision when designing an RNN is whether to use a simple architecture or a gated architecture.Simple RNNs, also known as vanilla RNNs, are the most basic type of RNN. They consist of a single layer of neurons that process input sequences one element at a time, updating their hidden state at each time step. While simple RNNs are easy to implement and train, they suffer from the vanishing gradient problem, which can make it difficult for them to learn long-range dependencies in the data.

Gated architectures, on the other hand, address the vanishing gradient problem by introducing additional mechanisms to control the flow of information within the network. The most popular gated architecture is the Long Short-Term Memory (LSTM) network, which includes three types of gates – input, forget, and output – that regulate the flow of information through the network. LSTMs have been shown to be highly effective for tasks requiring modeling long-range dependencies, such as machine translation and speech recognition.

So, which architecture is better for RNNs – simple or gated? The answer depends on the specific task at hand. Simple RNNs are often sufficient for tasks with short-term dependencies, such as simple language modeling or time series prediction. They are also faster to train and may require less computational resources compared to gated architectures.

However, for tasks with long-range dependencies or complex temporal patterns, gated architectures like LSTMs are generally preferred. LSTMs have been shown to outperform simple RNNs on a wide range of tasks, thanks to their ability to learn and remember long-term dependencies in the data.

In conclusion, the choice between simple and gated architectures in RNNs depends on the specific requirements of the task. While simple RNNs may be sufficient for tasks with short-term dependencies, gated architectures like LSTMs are better suited for tasks with long-range dependencies or complex temporal patterns. Experimenting with different architectures and evaluating their performance on the specific task at hand is the best way to determine which architecture is better for a given application.

#Comparing #Simple #Gated #Architectures #Recurrent #Neural #Networks,recurrent neural networks: from simple to gated architectures

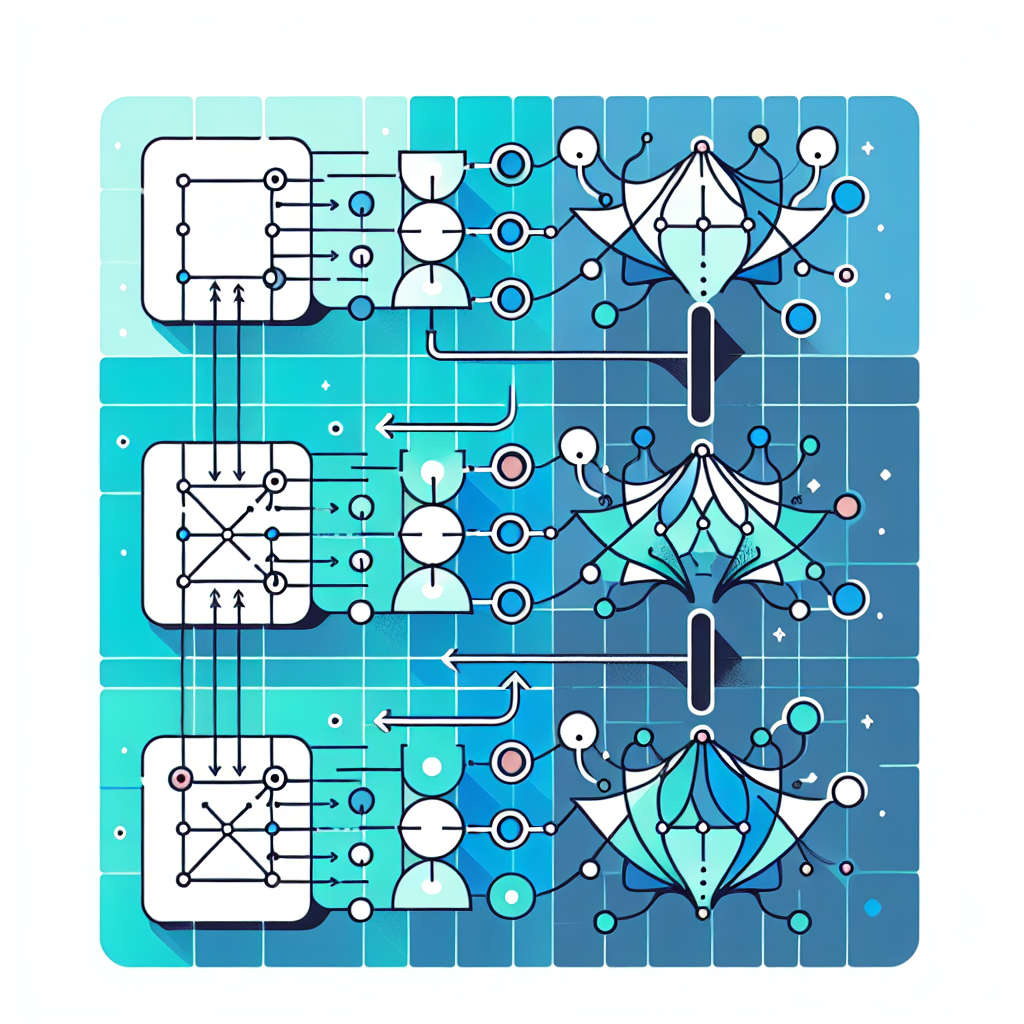

A Deep Dive into the Inner Workings of Recurrent Neural Networks: From Simple to Gated Architectures

Recurrent Neural Networks (RNNs) are a type of artificial neural network designed to handle sequential data. They are widely used in natural language processing, speech recognition, and time series analysis, among other applications. In this article, we will dive deep into the inner workings of RNNs, from simple architectures to more advanced gated architectures like Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU).At its core, an RNN processes sequences of data by maintaining a hidden state that is updated at each time step. This hidden state acts as a memory that captures information from previous time steps and influences the network’s predictions at the current time step. The basic architecture of an RNN consists of a single layer of recurrent units, each of which has a set of weights that are shared across all time steps.

One of the key challenges with simple RNN architectures is the vanishing gradient problem, where gradients become very small as they are backpropagated through time. This can lead to difficulties in learning long-range dependencies in the data. To address this issue, more advanced gated architectures like LSTM and GRU were introduced.

LSTM networks introduce additional gating mechanisms that control the flow of information through the network. These gates include an input gate, a forget gate, and an output gate, each of which regulates the information that enters, leaves, and is stored in the hidden state. By selectively updating the hidden state using these gates, LSTM networks are able to learn long-range dependencies more effectively than simple RNNs.

GRU networks, on the other hand, simplify the architecture of LSTM by combining the forget and input gates into a single update gate. This reduces the number of parameters in the network and makes training more efficient. GRU networks have been shown to perform comparably to LSTM networks in many tasks, while being simpler and faster to train.

In conclusion, recurrent neural networks are a powerful tool for processing sequential data. From simple architectures to more advanced gated architectures like LSTM and GRU, RNNs have revolutionized the field of deep learning and are widely used in a variety of applications. By understanding the inner workings of these networks, we can better leverage their capabilities and build more effective models for a wide range of tasks.

#Deep #Dive #Workings #Recurrent #Neural #Networks #Simple #Gated #Architectures,recurrent neural networks: from simple to gated architectures

Unveiling the Magic of Gated Recurrent Neural Networks: A Comprehensive Overview

Gated Recurrent Neural Networks (GRNNs) have become a popular choice for many machine learning tasks due to their ability to effectively handle sequential data. In this article, we will unveil the magic of GRNNs and provide a comprehensive overview of this powerful neural network architecture.At their core, GRNNs are a type of recurrent neural network (RNN) that incorporates gating mechanisms to control the flow of information through the network. This gating mechanism allows GRNNs to better capture long-range dependencies in sequential data, making them well-suited for tasks such as natural language processing, speech recognition, and time series analysis.

One of the key components of a GRNN is the gate, which is a set of learnable parameters that control the flow of information through the network. The most common type of gate used in GRNNs is the Long Short-Term Memory (LSTM) gate, which consists of three main components: the input gate, the forget gate, and the output gate. These gates work together to selectively update and output information from the network, allowing GRNNs to effectively model complex sequential patterns.

Another important component of GRNNs is the recurrent connection, which allows information to persist through time. This recurrent connection enables GRNNs to capture dependencies between elements in a sequence, making them well-suited for tasks that involve analyzing temporal data.

In addition to their ability to handle sequential data, GRNNs also have the advantage of being able to learn from both past and future information. This bidirectional nature of GRNNs allows them to make more informed predictions by considering information from both directions in a sequence.

Overall, GRNNs are a powerful tool for modeling sequential data and have been shown to outperform traditional RNNs in many tasks. By incorporating gating mechanisms and recurrent connections, GRNNs are able to effectively capture long-range dependencies in sequential data and make more accurate predictions.

In conclusion, GRNNs are a versatile and powerful neural network architecture that is well-suited for a wide range of machine learning tasks. By unveiling the magic of GRNNs and understanding their key components, researchers and practitioners can leverage this advanced neural network architecture to tackle complex sequential data analysis tasks with ease.

#Unveiling #Magic #Gated #Recurrent #Neural #Networks #Comprehensive #Overview,recurrent neural networks: from simple to gated architectures

Harnessing the Power of Gated Architectures: A Closer Look at Recurrent Neural Networks

In recent years, recurrent neural networks (RNNs) have emerged as a powerful tool in the field of artificial intelligence, particularly in tasks involving sequential data such as natural language processing, speech recognition, and time series prediction. One key aspect of RNNs that has contributed to their success is the use of gated architectures, which allow the network to selectively update and forget information as it processes each input.Gated architectures were first introduced in the form of long short-term memory (LSTM) units, which were designed to address the vanishing gradient problem that can occur in traditional RNNs. The vanishing gradient problem occurs when gradients become extremely small as they are backpropagated through many layers of a neural network, making it difficult for the network to learn long-range dependencies in sequential data. LSTM units use a combination of gating mechanisms to control the flow of information through the network, allowing it to retain relevant information over long time periods.

One of the key components of LSTM units is the forget gate, which determines how much of the previous cell state should be retained and how much should be forgotten. The forget gate takes the previous cell state and the current input as input, and outputs a value between 0 and 1 that determines the amount of information that should be retained. This allows the network to selectively update its memory based on the current input, enabling it to learn long-range dependencies more effectively.

Another important component of LSTM units is the input gate, which determines how much of the current input should be added to the cell state. The input gate takes the current input and the previous hidden state as input, and outputs a value between 0 and 1 that determines how much of the input should be added to the cell state. This allows the network to selectively update its memory based on the current input, enabling it to adapt to changing input patterns.

By using gated architectures like LSTM units, RNNs are able to effectively capture long-range dependencies in sequential data, making them well-suited for tasks such as language modeling, machine translation, and speech recognition. In recent years, researchers have also developed more advanced gated architectures such as gated recurrent units (GRUs), which are simpler and more efficient than LSTM units while still being able to capture long-range dependencies.

Overall, the use of gated architectures has been instrumental in harnessing the power of RNNs for a wide range of applications. By allowing the network to selectively update and forget information as it processes each input, gated architectures enable RNNs to effectively capture long-range dependencies in sequential data, making them a valuable tool for solving complex AI tasks.

#Harnessing #Power #Gated #Architectures #Closer #Recurrent #Neural #Networks,recurrent neural networks: from simple to gated architectures

A Comprehensive Review of Gated Architectures in Recurrent Neural Networks

Recurrent Neural Networks (RNNs) have been widely used in various applications such as natural language processing, speech recognition, and time series analysis due to their ability to capture temporal dependencies in data. However, traditional RNNs suffer from the vanishing gradient problem, which limits their ability to capture long-range dependencies in sequential data.To address this issue, a new class of RNN architectures called Gated Recurrent Units (GRUs) and Long Short-Term Memory (LSTM) cells have been introduced. These architectures incorporate gating mechanisms that allow the network to selectively update and forget information based on the input data, enabling them to better capture long-range dependencies.

In this article, we will provide a comprehensive review of gated architectures in RNNs, focusing on the GRU and LSTM cells. We will discuss how these architectures work, their advantages and disadvantages, and their applications in various domains.

Gated Recurrent Units (GRUs) are a simplified version of LSTM cells that have been shown to perform comparably to LSTMs in many tasks. GRUs have two gating mechanisms – an update gate and a reset gate – that control the flow of information through the network. The update gate determines how much of the previous hidden state should be retained, while the reset gate determines how much of the new input should be incorporated into the current hidden state.

One of the advantages of GRUs is that they are computationally more efficient than LSTMs, as they have fewer parameters and require fewer computations. This makes them ideal for applications where computational resources are limited.

On the other hand, Long Short-Term Memory (LSTM) cells are more complex than GRUs and have three gating mechanisms – an input gate, a forget gate, and an output gate. The input gate controls how much of the new input should be incorporated into the current hidden state, the forget gate determines how much of the previous hidden state should be retained, and the output gate determines how much of the current hidden state should be outputted.

LSTMs have been shown to excel in tasks that require capturing long-range dependencies, such as machine translation and speech recognition. However, they are also more computationally expensive than GRUs due to their higher number of parameters and computations.

In conclusion, gated architectures such as GRUs and LSTMs have revolutionized the field of recurrent neural networks by addressing the vanishing gradient problem and enabling the networks to capture long-range dependencies in sequential data. While both architectures have their own strengths and weaknesses, they have been successfully applied in a wide range of applications and continue to be a topic of active research in the deep learning community.

#Comprehensive #Review #Gated #Architectures #Recurrent #Neural #Networks,recurrent neural networks: from simple to gated architectures

Enhancing Sequence Learning with Gated Recurrent Neural Networks

Sequence learning is a fundamental task in machine learning that involves predicting the next element in a sequence given the previous elements. One popular approach to sequence learning is using Recurrent Neural Networks (RNNs), which are designed to handle sequential data by maintaining a hidden state that captures information about the past elements in the sequence.However, traditional RNNs can struggle to capture long-range dependencies in sequences, leading to difficulties in learning complex patterns. To address this issue, researchers have developed Gated Recurrent Neural Networks (GRNNs), which incorporate gating mechanisms to better regulate the flow of information through the network.

One of the key advantages of GRNNs is their ability to learn long-range dependencies more effectively than traditional RNNs. This is achieved through the use of gating units, such as the Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU), which control the flow of information by selectively updating and forgetting information in the hidden state.

The gating mechanisms in GRNNs enable the network to better retain important information over longer periods of time, making them well-suited for tasks that require modeling complex temporal dependencies, such as speech recognition, language translation, and music generation.

In addition to improving the learning of long-range dependencies, GRNNs also help address the issue of vanishing and exploding gradients that can occur in traditional RNNs. By controlling the flow of information through the network, the gating mechanisms in GRNNs help mitigate these gradient-related problems, leading to more stable and efficient training.

Furthermore, GRNNs have been shown to outperform traditional RNNs on a wide range of sequence learning tasks, including language modeling, machine translation, and speech recognition. Their ability to capture long-range dependencies and better regulate the flow of information through the network makes them a powerful tool for modeling sequential data.

In conclusion, Gated Recurrent Neural Networks offer a significant improvement over traditional RNNs for sequence learning tasks. Their gating mechanisms enable them to better capture long-range dependencies, mitigate gradient-related issues, and outperform traditional RNNs on a variety of sequence learning tasks. As the field of machine learning continues to advance, GRNNs are likely to play an increasingly important role in modeling sequential data and advancing the state-of-the-art in various applications.

#Enhancing #Sequence #Learning #Gated #Recurrent #Neural #Networks,recurrent neural networks: from simple to gated architectures

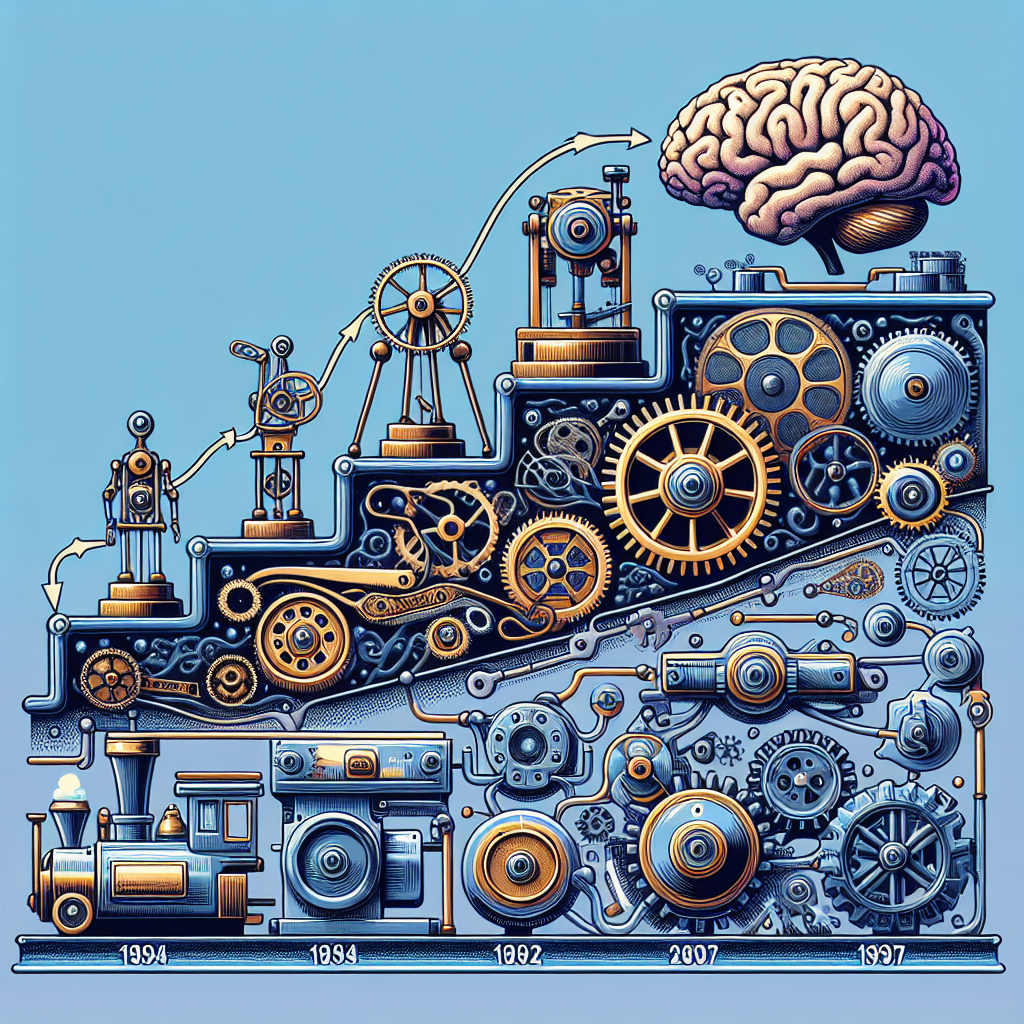

The Evolution of Recurrent Neural Networks: From Simple RNNs to Advanced Gated Models

Recurrent Neural Networks (RNNs) have become a powerful tool in the field of artificial intelligence and machine learning, particularly for tasks involving sequential data such as time series analysis, natural language processing, and speech recognition. The evolution of RNNs has seen the development of more advanced models, such as Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) networks, which address some of the limitations of traditional RNNs.The concept of RNNs dates back to the 1980s, with the development of simple RNN models that were designed to process sequential data by maintaining a hidden state that captures information from previous time steps. However, these simple RNNs were found to suffer from the problem of vanishing gradients, where the gradients of the loss function with respect to the parameters of the network become very small, making it difficult to train the model effectively.

In order to address this issue, more advanced RNN models were developed, such as LSTM networks, which incorporate a mechanism to selectively retain or forget information in the hidden state. LSTM networks have a more complex architecture with additional gating mechanisms that allow them to learn long-range dependencies in sequential data, making them more effective for tasks that require capturing long-term dependencies.

Another advanced RNN model that has gained popularity in recent years is the GRU network, which is similar to LSTM networks but has a simpler architecture with fewer gating mechanisms. GRU networks have been found to be as effective as LSTM networks for many tasks while being computationally more efficient.

The development of these advanced gated models has significantly improved the performance of RNNs for a wide range of applications. For example, in natural language processing tasks such as language translation and sentiment analysis, LSTM and GRU networks have been shown to outperform simple RNN models by better capturing the context and semantics of the text.

Overall, the evolution of RNNs from simple models to advanced gated models has revolutionized the field of sequential data processing, enabling more accurate and efficient modeling of complex relationships in sequential data. As research in this field continues to advance, we can expect to see even more sophisticated RNN architectures that further enhance the capabilities of these powerful neural networks.

#Evolution #Recurrent #Neural #Networks #Simple #RNNs #Advanced #Gated #Models,recurrent neural networks: from simple to gated architectures

Long Short-Term Memory (LSTM) Networks: A Deep Dive into Gated Architectures

Long Short-Term Memory (LSTM) networks are a type of recurrent neural network (RNN) that are specifically designed to address the vanishing gradient problem in traditional RNNs. LSTM networks are equipped with specialized memory cells that allow them to learn long-term dependencies in sequential data, making them particularly well-suited for tasks such as natural language processing, speech recognition, and time series forecasting.At the heart of LSTM networks are gated architectures, which enable the network to selectively update and retain information in its memory cells. The key components of an LSTM network include the input gate, forget gate, output gate, and memory cell, each of which plays a critical role in determining how information is processed and stored.

The input gate controls the flow of information into the memory cell, while the forget gate determines what information should be discarded from the cell. The output gate then regulates the information that is output from the memory cell to the next time step in the sequence. By selectively gating the flow of information, LSTM networks are able to maintain long-term dependencies in the data while avoiding the vanishing gradient problem that plagues traditional RNNs.

One of the key advantages of LSTM networks is their ability to learn and remember patterns over long sequences of data. This makes them well-suited for tasks such as speech recognition, where the network needs to retain information about phonemes and words that occur at different points in a sentence. Additionally, LSTM networks are able to capture complex patterns in sequential data, such as the relationships between words in a sentence or the trends in a time series.

In recent years, LSTM networks have become increasingly popular in the field of deep learning, with applications ranging from language translation to stock market prediction. Researchers continue to explore new variations and improvements to the basic LSTM architecture, such as the addition of attention mechanisms or the use of stacked LSTM layers, in order to further enhance the performance of these powerful networks.

In conclusion, LSTM networks represent a powerful tool for modeling sequential data and capturing long-term dependencies. By leveraging gated architectures and specialized memory cells, LSTM networks are able to learn complex patterns in sequential data and maintain information over long sequences. As deep learning continues to advance, LSTM networks are likely to play an increasingly important role in a wide range of applications across various industries.

#Long #ShortTerm #Memory #LSTM #Networks #Deep #Dive #Gated #Architectures,recurrent neural networks: from simple to gated architectures

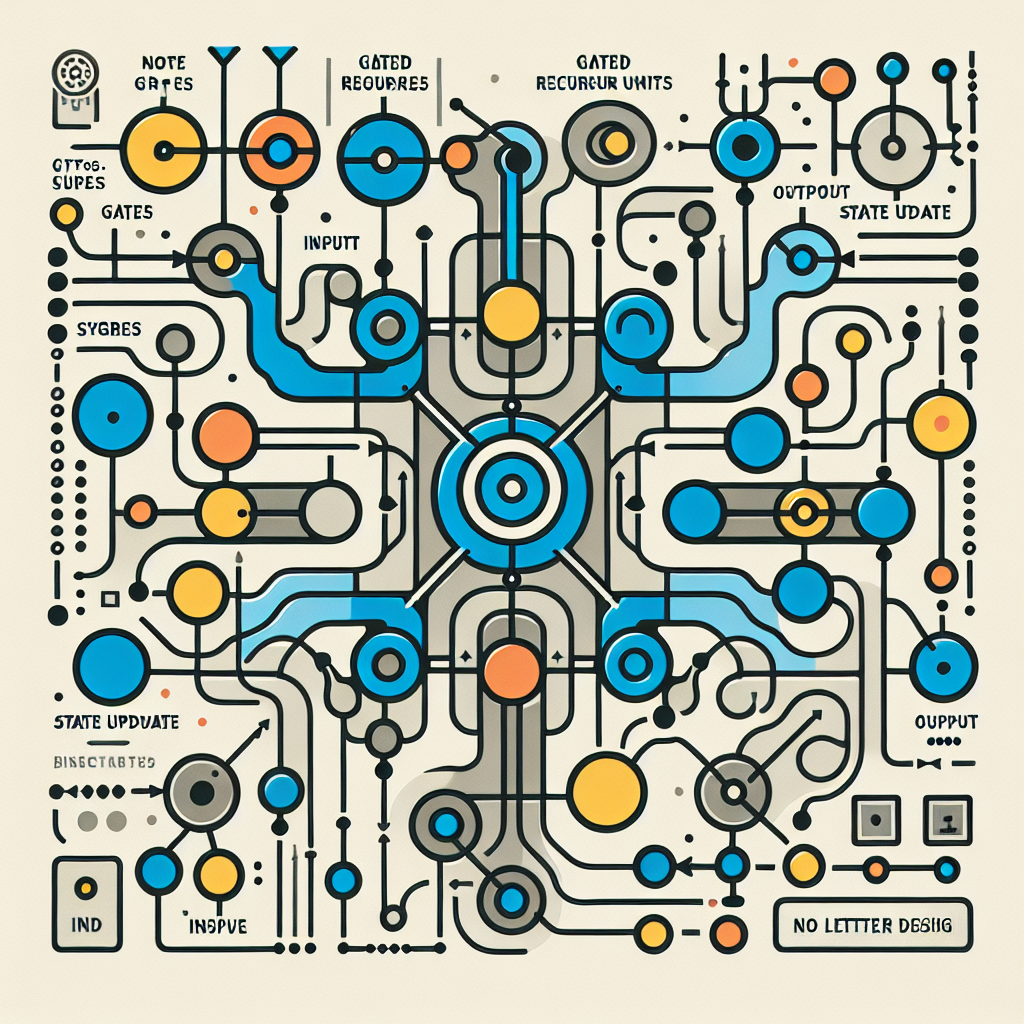

Unveiling the Inner Workings of Gated Recurrent Units (GRUs)

Gated Recurrent Units (GRUs) are a type of neural network architecture that has gained popularity in recent years for its effectiveness in handling sequential data. In this article, we will delve into the inner workings of GRUs and explore how they work.GRUs are a type of recurrent neural network (RNN) that are designed to address the vanishing gradient problem that can occur in traditional RNNs. The vanishing gradient problem occurs when the gradients during training become very small, making it difficult for the network to learn long-term dependencies in the data. GRUs address this issue by using gating mechanisms to control the flow of information through the network.

The key components of a GRU are the reset gate and the update gate. The reset gate determines how much of the previous hidden state to forget, while the update gate determines how much of the new hidden state to keep. These gates allow the GRU to selectively update its hidden state based on the input data, enabling it to learn long-term dependencies more effectively.

One of the advantages of GRUs over traditional RNNs is their ability to capture long-term dependencies in the data while avoiding the vanishing gradient problem. This makes them well-suited for tasks such as language modeling, speech recognition, and machine translation, where understanding sequential patterns is crucial.

In addition to their effectiveness in handling sequential data, GRUs are also computationally efficient compared to other types of RNNs such as Long Short-Term Memory (LSTM) networks. This makes them a popular choice for researchers and practitioners working with sequential data.

Overall, GRUs are a powerful tool for modeling sequential data and have become an essential component of many state-of-the-art neural network architectures. By understanding the inner workings of GRUs and how they address the challenges of traditional RNNs, researchers and practitioners can leverage their capabilities to build more effective and efficient models for a wide range of applications.

#Unveiling #Workings #Gated #Recurrent #Units #GRUs,recurrent neural networks: from simple to gated architectures

From Simple to Complex: A Guide to Gated Architectures in Recurrent Neural Networks

Recurrent Neural Networks (RNNs) have become a popular choice for tasks involving sequential data, such as natural language processing, time series analysis, and speech recognition. One of the key features that sets RNNs apart from traditional feedforward neural networks is their ability to effectively model long-range dependencies in the data. This is achieved through the use of gated architectures, which allow the network to selectively update and forget information over time.In this article, we will explore the evolution of gated architectures in RNNs, starting from the simplest form of gating mechanisms to more sophisticated and complex variants. We will discuss the motivations behind the development of these architectures and how they have revolutionized the field of deep learning.

The simplest form of gating mechanism in RNNs is the basic RNN cell, which consists of a single recurrent layer that updates its hidden state at each time step. While this architecture is effective for modeling short-range dependencies, it struggles to capture long-term dependencies due to the vanishing gradient problem. This issue arises when gradients become extremely small as they are backpropagated through time, leading to difficulties in training the network effectively.

To address this problem, researchers introduced the Long Short-Term Memory (LSTM) architecture, which incorporates multiple gating mechanisms to control the flow of information within the network. The key components of an LSTM cell include the input gate, forget gate, output gate, and cell state, which work together to preserve relevant information over long sequences while discarding irrelevant information. This allows the network to learn long-range dependencies more effectively and avoid the vanishing gradient problem.

Building upon the success of LSTM, researchers developed the Gated Recurrent Unit (GRU) architecture, which simplifies the structure of LSTM by merging the forget and input gates into a single update gate. This reduces the computational complexity of the model while still maintaining strong performance on sequential tasks. GRU has been shown to be more efficient than LSTM in terms of training time and memory consumption, making it a popular choice for many applications.

In recent years, there have been further advancements in gated architectures, such as the Transformer model, which utilizes self-attention mechanisms to capture long-range dependencies in a parallelizable manner. Transformers have achieved state-of-the-art performance on a wide range of natural language processing tasks, demonstrating the power of attention-based mechanisms in sequence modeling.

In conclusion, gated architectures have played a crucial role in advancing the capabilities of RNNs in modeling sequential data. From the basic RNN cell to the sophisticated Transformer model, these architectures have enabled deep learning models to learn complex patterns and dependencies in data more effectively. By understanding the evolution of gated architectures in RNNs, researchers and practitioners can leverage these advancements to build more powerful and efficient neural network models for a variety of applications.

#Simple #Complex #Guide #Gated #Architectures #Recurrent #Neural #Networks,recurrent neural networks: from simple to gated architectures