Your cart is currently empty!

Tag: Gated

The Evolution of Gated Recurrent Units (GRUs) in Neural Networks

Gated Recurrent Units (GRUs) are a type of neural network architecture that has become increasingly popular in recent years for sequence modeling tasks such as natural language processing and speech recognition. GRUs were first introduced in 2014 by Kyunghyun Cho et al., as a simpler and more efficient alternative to the more complex Long Short-Term Memory (LSTM) units.The main idea behind GRUs is to address the vanishing gradient problem that often occurs in traditional recurrent neural networks (RNNs). The vanishing gradient problem arises when the gradients become very small during backpropagation, making it difficult for the network to learn long-term dependencies in sequential data. GRUs tackle this issue by using gating mechanisms to control the flow of information through the network.

The key components of a GRU unit are the reset gate and the update gate. The reset gate determines how much of the previous state should be forgotten, while the update gate determines how much of the new state should be added to the current state. By dynamically updating these gates during each time step, GRUs are able to capture long-term dependencies in the data more effectively than traditional RNNs.

One of the main advantages of GRUs over LSTMs is their simplicity and efficiency. GRUs have fewer parameters and computations compared to LSTMs, making them easier to train and faster to converge. This has made GRUs a popular choice for researchers and practitioners working on sequence modeling tasks.

Since their introduction, GRUs have undergone several improvements and variations. For example, researchers have proposed different activation functions for the gates, as well as modifications to the gating mechanisms to improve performance on specific tasks. Some studies have also explored incorporating attention mechanisms into GRUs to further enhance their ability to capture long-term dependencies in the data.

Overall, the evolution of GRUs in neural networks has been driven by the need for more effective and efficient models for sequence modeling tasks. As researchers continue to explore new architectures and techniques for improving the performance of GRUs, we can expect to see even more advancements in this area in the future.

#Evolution #Gated #Recurrent #Units #GRUs #Neural #Networks,recurrent neural networks: from simple to gated architectures

Mastering Gated Architectures: Tips and Tricks for Optimizing RNN Performance

Recurrent Neural Networks (RNNs) are a powerful tool for modeling sequential data, such as natural language processing, speech recognition, and time series forecasting. However, training RNNs can be challenging due to issues like vanishing and exploding gradients, which can hinder learning and degrade performance. One popular solution to these problems is to use gated architectures, such as Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) networks, which are designed to better capture long-range dependencies in the data.Mastering gated architectures is essential for optimizing RNN performance, as they can significantly improve the learning capabilities of the network and lead to better results. In this article, we will discuss some tips and tricks for effectively using gated architectures to enhance RNN performance.

1. Use LSTM or GRU networks: When working with RNNs, it is advisable to use LSTM or GRU networks instead of traditional RNNs, as they are better equipped to handle long-range dependencies in the data. LSTM networks have a more complex structure with additional gates that control the flow of information, while GRU networks are simpler and more computationally efficient. Both architectures have been shown to outperform traditional RNNs in many tasks.

2. Initialize the network properly: Proper initialization of the network weights is crucial for training RNNs effectively. Xavier initialization or He initialization are commonly used techniques that can help prevent vanishing and exploding gradients during training. Initializing the weights too small or too large can lead to poor performance, so it is important to experiment with different initialization strategies to find the best one for your specific task.

3. Regularize the network: Regularization techniques, such as dropout or weight decay, can help prevent overfitting and improve the generalization capabilities of the network. Dropout randomly sets a fraction of the neurons to zero during training, which acts as a form of regularization and helps prevent the network from memorizing the training data. Weight decay penalizes large weights in the network, encouraging the model to learn simpler representations of the data.

4. Optimize the learning rate: Choosing the right learning rate is crucial for training RNNs effectively. A learning rate that is too small can slow down the training process, while a learning rate that is too large can lead to unstable training and poor performance. It is recommended to start with a small learning rate and gradually increase it if necessary, using techniques like learning rate schedules or adaptive learning rate methods.

5. Monitor and analyze the training process: Monitoring the training process is important for identifying potential issues and making adjustments to improve performance. Keep track of metrics like loss function value, accuracy, and validation performance to assess the progress of the training. Analyze the gradients, activations, and weights of the network to gain insights into how the model is learning and identify potential problems.

By following these tips and tricks, you can effectively master gated architectures and optimize the performance of your RNNs. Experiment with different techniques, monitor the training process, and continuously improve your model to achieve the best results in your specific task. With the right approach, gated architectures can unlock the full potential of RNNs and help you tackle complex sequential data with ease.

#Mastering #Gated #Architectures #Tips #Tricks #Optimizing #RNN #Performance,recurrent neural networks: from simple to gated architectures

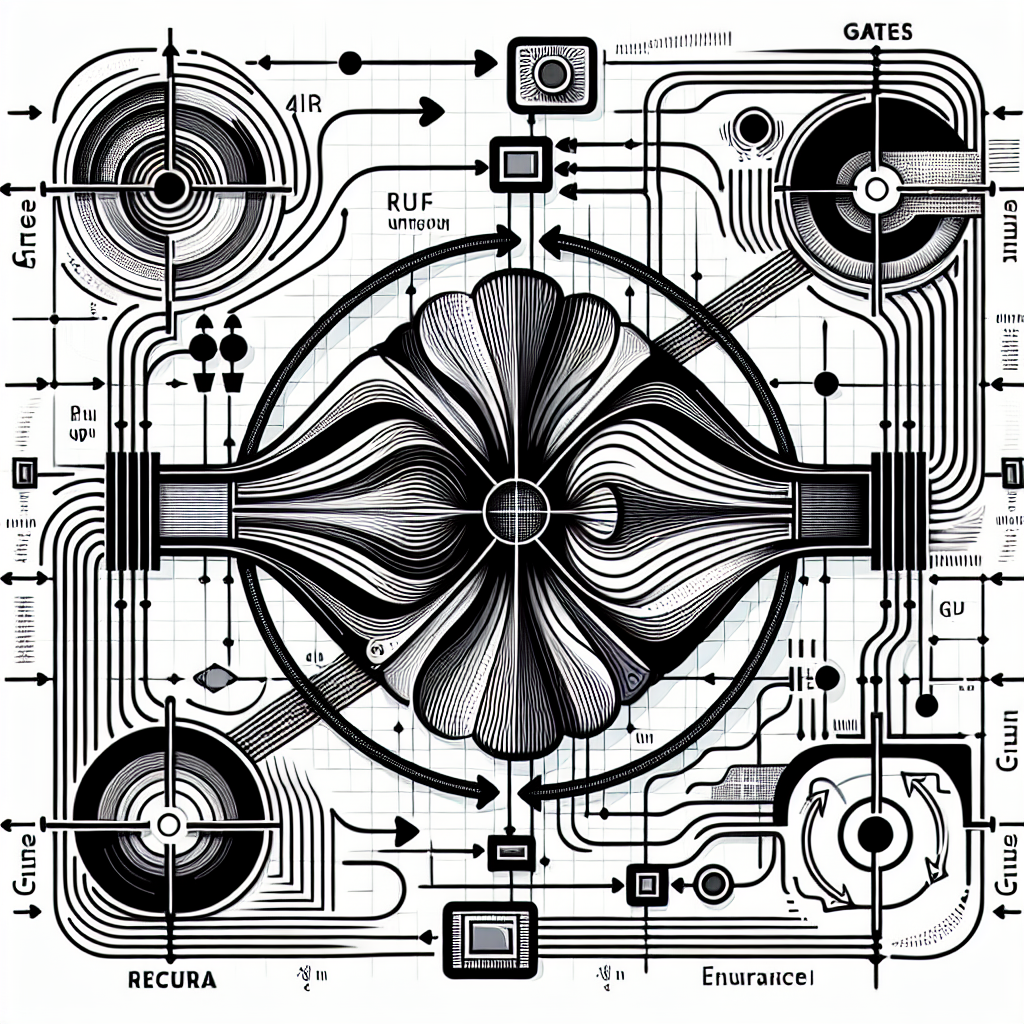

The Role of Gated Architectures in Overcoming the Vanishing Gradient Problem in RNNs

Recurrent Neural Networks (RNNs) are a powerful type of artificial neural network that is commonly used in natural language processing, speech recognition, and other sequential data analysis tasks. However, RNNs are prone to the vanishing gradient problem, which can hinder their ability to learn long-term dependencies in data.The vanishing gradient problem occurs when the gradients of the loss function with respect to the network’s parameters become very small as they are backpropagated through time. This can cause the weights of the network to become stagnant or even vanish altogether, making it difficult for the network to learn from distant past information.

One approach to overcoming the vanishing gradient problem in RNNs is the use of gated architectures, such as Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) networks. These architectures incorporate specialized gating mechanisms that control the flow of information through the network, allowing them to better capture long-term dependencies in data.

In LSTM networks, for example, each cell has three gates – the input gate, forget gate, and output gate – that regulate the flow of information. The input gate controls the flow of new information into the cell, the forget gate determines which information to discard from the cell’s memory, and the output gate decides which information to pass on to the next time step.

Similarly, GRU networks have two gates – the reset gate and update gate – that control the flow of information in a similar manner. The reset gate determines how much of the past information to forget, while the update gate decides how much of the new information to incorporate.

By incorporating these gating mechanisms, LSTM and GRU networks are able to maintain a more stable gradient flow during training, allowing them to effectively learn long-term dependencies in data. This makes them well-suited for tasks that require capturing complex sequential patterns, such as machine translation and speech recognition.

In conclusion, gated architectures play a crucial role in overcoming the vanishing gradient problem in RNNs. By incorporating specialized gating mechanisms, such as those found in LSTM and GRU networks, RNNs are able to effectively capture long-term dependencies in data and achieve superior performance in various sequential data analysis tasks.

#Role #Gated #Architectures #Overcoming #Vanishing #Gradient #Problem #RNNs,recurrent neural networks: from simple to gated architectures

Harnessing the Power of Gated Architectures in RNNs: A Practical Guide

Recurrent Neural Networks (RNNs) have proven to be powerful tools for sequential data processing tasks such as language modelling, speech recognition, and time series prediction. However, training RNNs can be challenging due to the vanishing and exploding gradient problem, which can lead to difficulties in learning long-term dependencies in the data.One way to address this issue is by using gated architectures, which are designed to control the flow of information within the network. Gated architectures, such as Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU), have been shown to be effective in capturing long-term dependencies in sequential data.

In this article, we will explore how gated architectures can be harnessed to improve the performance of RNNs in practice. We will discuss the key concepts behind gated architectures and provide a practical guide on how to implement them in your RNN models.

Gated architectures, such as LSTM and GRU, include mechanisms called gates that regulate the flow of information within the network. These gates consist of sigmoid and tanh activation functions that control the input, output, and forget operations in the network. By selectively updating and forgetting information at each time step, gated architectures are able to capture long-term dependencies in the data while preventing the vanishing and exploding gradient problem.

To implement gated architectures in your RNN models, you can use popular deep learning frameworks such as TensorFlow or PyTorch, which provide built-in implementations of LSTM and GRU cells. Simply replace the standard RNN cell in your model with an LSTM or GRU cell to take advantage of the benefits of gated architectures.

When training RNNs with gated architectures, it is important to pay attention to hyperparameters such as the number of hidden units in the LSTM or GRU cell, the learning rate, and the batch size. Experiment with different hyperparameter settings to find the optimal configuration for your specific task.

In conclusion, gated architectures offer a powerful solution to the vanishing and exploding gradient problem in RNNs, allowing for better capture of long-term dependencies in sequential data. By harnessing the power of gated architectures in your RNN models, you can improve their performance on a wide range of tasks. Experiment with different hyperparameter settings and implementation strategies to find the best approach for your specific application.

#Harnessing #Power #Gated #Architectures #RNNs #Practical #Guide,recurrent neural networks: from simple to gated architectures

From Vanilla RNNs to Gated Architectures: Evolution of Recurrent Neural Networks

Recurrent Neural Networks (RNNs) have been a fundamental tool in the field of artificial intelligence and machine learning for quite some time. Initially, vanilla RNNs were the go-to model for tasks that required sequential data processing, such as natural language processing, speech recognition, and time series forecasting. However, as researchers delved deeper into the capabilities and limitations of vanilla RNNs, they began to explore more sophisticated architectures that could better handle long-range dependencies and mitigate the vanishing gradient problem.One of the major drawbacks of vanilla RNNs is the vanishing gradient problem, where gradients become extremely small as they are propagated back through time. This results in the model being unable to effectively learn long-term dependencies in the data. To address this issue, researchers introduced Gated Recurrent Units (GRUs) and Long Short-Term Memory (LSTM) units, which are two types of gated architectures that have become immensely popular in the deep learning community.

GRUs and LSTMs are equipped with gating mechanisms that allow them to selectively retain or discard information at each time step, enabling them to better capture long-term dependencies in the data. The key difference between GRUs and LSTMs lies in their internal structure, with LSTMs having an additional cell state that helps in controlling the flow of information. Both architectures have proven to be highly effective in a wide range of tasks, including language modeling, machine translation, and image captioning.

Another important development in the evolution of RNNs is the introduction of bidirectional RNNs, which process the input sequence in both forward and backward directions. This allows the model to capture information from both past and future contexts, making it particularly useful in tasks that require a holistic understanding of the input sequence.

In recent years, researchers have also explored attention mechanisms in RNNs, which enable the model to focus on specific parts of the input sequence that are most relevant to the task at hand. This has led to significant improvements in the performance of RNNs in tasks such as machine translation, where the model needs to attend to different parts of the input sequence at different time steps.

Overall, the evolution of RNNs from vanilla models to gated architectures and attention mechanisms has significantly advanced the capabilities of these models in handling sequential data. As researchers continue to explore new architectures and optimization techniques, we can expect further advancements in the field of recurrent neural networks, leading to even more powerful and versatile models for a wide range of applications.

#Vanilla #RNNs #Gated #Architectures #Evolution #Recurrent #Neural #Networks,recurrent neural networks: from simple to gated architectures

A Comprehensive Overview of Different Gated Architectures in RNNs

Recurrent Neural Networks (RNNs) have gained immense popularity in recent years for their ability to handle sequential data. However, traditional RNNs suffer from the vanishing gradient problem, which limits their ability to capture long-term dependencies in sequences. To address this issue, various gated architectures have been proposed, each offering unique advantages and applications. In this article, we will provide a comprehensive overview of different gated architectures in RNNs.1. Long Short-Term Memory (LSTM):

LSTM is one of the most widely used gated architectures in RNNs. It consists of three gates – input gate, forget gate, and output gate – that control the flow of information through the network. The input gate regulates how much new information should be stored in the cell state, the forget gate determines what information should be discarded from the cell state, and the output gate decides what information should be passed to the next time step. LSTM is particularly effective in capturing long-term dependencies in sequences and has been successfully applied in various tasks such as language modeling, speech recognition, and machine translation.

2. Gated Recurrent Unit (GRU):

GRU is a simplified version of LSTM that combines the forget and input gates into a single update gate. It also has a reset gate that controls the flow of information from the previous time step. GRU is computationally more efficient than LSTM and has fewer parameters, making it easier to train on smaller datasets. Despite its simplicity, GRU has been shown to perform on par with LSTM in many tasks and is a popular choice for researchers and practitioners.

3. Clockwork RNN:

Clockwork RNN is a unique gated architecture that divides the hidden units into separate clock partitions, each operating at a different frequency. Each partition is responsible for processing a specific time scale of the input sequence, allowing the network to capture both short-term and long-term dependencies efficiently. Clockwork RNN has been shown to outperform traditional RNNs in tasks requiring hierarchical temporal modeling, such as video analysis and action recognition.

4. Independent Gating Mechanism (IGM):

IGM is a novel gated architecture that introduces independent gating mechanisms for each input dimension in the sequence. This allows the network to selectively attend to different dimensions of the input at each time step, enabling more fine-grained control over the information flow. IGM has shown promising results in tasks involving high-dimensional inputs, such as image captioning and video generation.

In conclusion, gated architectures play a crucial role in enhancing the capabilities of RNNs for handling sequential data. Each architecture offers unique advantages and trade-offs, depending on the task at hand. Researchers continue to explore new variations and combinations of gated mechanisms to further improve the performance of RNNs in various applications. Understanding the strengths and weaknesses of different gated architectures is essential for selecting the most suitable model for a given task and achieving optimal results.

#Comprehensive #Overview #Gated #Architectures #RNNs,recurrent neural networks: from simple to gated architectures

Gated Recurrent Units: Enhancing RNN Performance with GRU Architectures

Recurrent Neural Networks (RNNs) have been widely used in the field of natural language processing, speech recognition, and time series analysis due to their ability to handle sequential data. However, traditional RNNs have limitations in capturing long-range dependencies in sequential data, which can lead to vanishing or exploding gradients during training.To address these limitations, researchers have developed a new type of RNN architecture called Gated Recurrent Units (GRUs). GRUs are a variation of traditional RNNs that incorporate gating mechanisms to control the flow of information within the network. This allows GRUs to better capture long-range dependencies in sequential data and avoid the vanishing or exploding gradient problem.

One of the key features of GRUs is their ability to selectively update and reset hidden states based on the input data. This gating mechanism allows GRUs to adaptively learn how much information to retain from previous time steps and how much new information to incorporate from the current time step. As a result, GRUs are better equipped to capture complex patterns in sequential data and make more accurate predictions.

In addition to their improved ability to capture long-range dependencies, GRUs are also more computationally efficient than traditional RNNs. The gating mechanisms in GRUs allow them to learn which information is important to retain and which information can be discarded, reducing the amount of computation required during training.

Overall, GRUs have been shown to enhance the performance of RNNs in a variety of tasks, including language modeling, machine translation, and speech recognition. By incorporating gating mechanisms to control the flow of information within the network, GRUs are able to better capture long-range dependencies in sequential data and make more accurate predictions.

In conclusion, Gated Recurrent Units (GRUs) are a powerful extension of traditional RNN architectures that can significantly improve the performance of RNNs in handling sequential data. With their ability to selectively update and reset hidden states based on input data, GRUs are better equipped to capture complex patterns and make more accurate predictions. As the field of deep learning continues to advance, GRUs are likely to play an increasingly important role in a wide range of applications.

#Gated #Recurrent #Units #Enhancing #RNN #Performance #GRU #Architectures,recurrent neural networks: from simple to gated architectures

The Future of Recurrent Neural Networks: Advancements in Gated Architectures

Recurrent Neural Networks (RNNs) have proven to be a powerful tool in the field of deep learning, particularly in tasks that involve sequential data such as speech recognition, language modeling, and machine translation. However, traditional RNNs have limitations in capturing long-term dependencies in sequences, due to the vanishing or exploding gradient problem.To address these issues, researchers have developed various gated architectures that improve the capabilities of RNNs in capturing long-range dependencies. One of the most popular gated architectures is the Long Short-Term Memory (LSTM) network, which includes gating mechanisms to control the flow of information through the network, allowing it to selectively remember or forget information at each time step.

Another popular gated architecture is the Gated Recurrent Unit (GRU), which simplifies the LSTM architecture by combining the forget and input gates into a single update gate, making it computationally more efficient while still maintaining similar performance.

Recently, advancements in gated architectures have led to the development of more sophisticated models that further enhance the capabilities of RNNs. For example, the Transformer model, which uses self-attention mechanisms to capture long-range dependencies in sequences, has achieved state-of-the-art performance in various natural language processing tasks.

Another notable advancement is the introduction of the Neural Turing Machine (NTM) and its variants, which combine the power of neural networks with external memory to enable RNNs to perform complex tasks that require memory access, such as algorithmic reasoning and program induction.

In addition, researchers have also explored the use of attention mechanisms in RNNs, which allow the network to focus on different parts of the input sequence at each time step, improving the model’s ability to learn complex patterns in data.

Overall, the future of recurrent neural networks looks promising, with advancements in gated architectures and attention mechanisms pushing the boundaries of what RNNs can achieve. These developments are expected to lead to further improvements in performance across a wide range of tasks, making RNNs even more versatile and powerful tools in the field of deep learning.

#Future #Recurrent #Neural #Networks #Advancements #Gated #Architectures,recurrent neural networks: from simple to gated architectures

Breaking Down the Differences Between Simple and Gated Recurrent Neural Networks

Recurrent Neural Networks (RNNs) are a type of artificial neural network that is designed to handle sequential data. They are widely used in natural language processing, speech recognition, and time series analysis, among other applications. Within the realm of RNNs, there are two main types: simple recurrent neural networks and gated recurrent neural networks. In this article, we will break down the differences between these two types of RNNs.Simple Recurrent Neural Networks (SRNNs) are the most basic form of RNNs. They work by passing information from one time step to the next, creating a feedback loop that allows them to capture dependencies in sequential data. However, SRNNs have a major limitation known as the vanishing gradient problem. This occurs when the gradients become extremely small as they are backpropagated through time, making it difficult for the network to learn long-term dependencies.

Gated Recurrent Neural Networks (GRNNs) were developed to address the vanishing gradient problem present in SRNNs. The most popular type of GRNN is the Long Short-Term Memory (LSTM) network, which includes gated units called “memory cells” that control the flow of information within the network. These memory cells have three gates – input, forget, and output – that regulate the flow of information by deciding what to store, discard, or output at each time step.

One of the key differences between SRNNs and GRNNs lies in their ability to capture long-term dependencies. While SRNNs struggle with this due to the vanishing gradient problem, GRNNs, particularly LSTMs, excel at learning and remembering long sequences of data. This makes them well-suited for tasks that involve processing and generating sequential data, such as language modeling and speech recognition.

Another difference between SRNNs and GRNNs is their computational complexity. GRNNs, especially LSTMs, are more complex and have more parameters than SRNNs, which can make them slower to train and more resource-intensive. However, this increased complexity allows GRNNs to learn more intricate patterns in the data and achieve better performance on tasks that require capturing long-term dependencies.

In conclusion, while simple recurrent neural networks are a good starting point for working with sequential data, gated recurrent neural networks, particularly LSTMs, offer a more powerful and sophisticated approach for handling long sequences and capturing complex dependencies. By understanding the differences between these two types of RNNs, researchers and practitioners can choose the most appropriate model for their specific tasks and achieve better results in their applications.

#Breaking #Differences #Simple #Gated #Recurrent #Neural #Networks,recurrent neural networks: from simple to gated architectures

Harnessing the Power of Gated Recurrent Units in Neural Networks

Neural networks have revolutionized the field of artificial intelligence, enabling machines to perform tasks that were once thought to be exclusive to human intelligence. One of the key components of neural networks is the recurrent neural network (RNN), which is designed to handle sequential data by maintaining a memory of past inputs.One popular variant of RNNs is the gated recurrent unit (GRU), which was introduced by Kyunghyun Cho and his colleagues in 2014. GRUs are designed to address some of the limitations of traditional RNNs, such as the vanishing gradient problem and the difficulty of learning long-range dependencies.

The key innovation of GRUs is the inclusion of gating mechanisms that control the flow of information within the network. These gates, which consist of a reset gate and an update gate, enable the GRU to selectively remember or forget information from previous time steps, allowing it to capture long-range dependencies more effectively.

By harnessing the power of gated recurrent units, neural networks can achieve better performance on tasks such as natural language processing, speech recognition, and sequence prediction. GRUs have been shown to outperform traditional RNNs on a variety of benchmarks, demonstrating their effectiveness in handling sequential data.

In addition to their superior performance, GRUs are also more computationally efficient than other types of recurrent units, making them well-suited for large-scale applications. This efficiency is achieved by simplifying the structure of the network and reducing the number of parameters that need to be learned during training.

Overall, the incorporation of gated recurrent units in neural networks represents a significant advancement in the field of artificial intelligence. By enabling networks to better capture long-range dependencies and improve performance on sequential tasks, GRUs are helping to push the boundaries of what machines can accomplish. As researchers continue to refine and optimize these models, we can expect to see even greater advancements in the field of neural networks in the years to come.

#Harnessing #Power #Gated #Recurrent #Units #Neural #Networks,recurrent neural networks: from simple to gated architectures