Deep learning has revolutionized the field of artificial intelligence, enabling machines to learn complex patterns and make predictions based on vast amounts of data. Recurrent Neural Networks (RNNs) are a popular type of deep learning model that can process sequences of data, making them well-suited for tasks such as speech recognition, natural language processing, and time series prediction. Gated Recurrent Units (GRUs) are a variant of RNNs that have shown promise in improving the performance of these models.

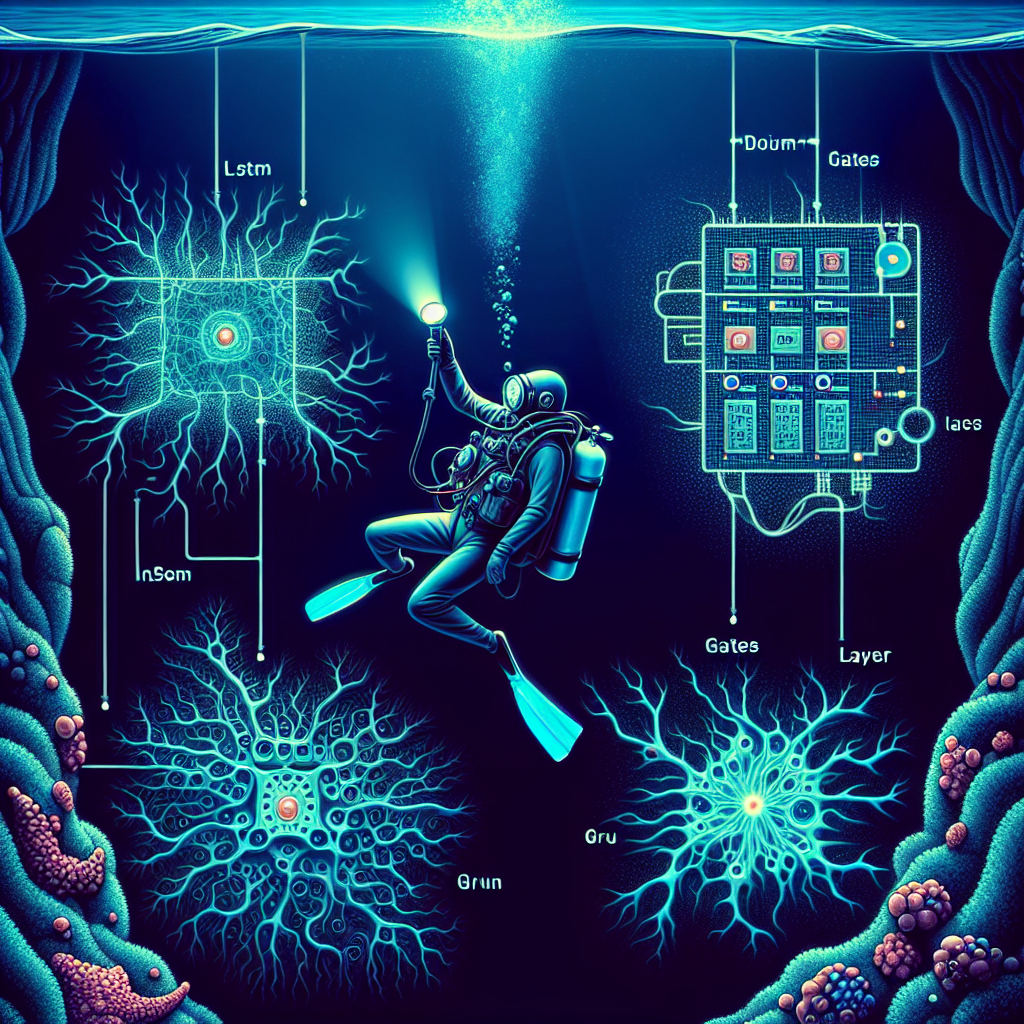

GRUs were introduced in a 2014 paper by Kyunghyun Cho, et al., as a simpler and more computationally efficient alternative to Long Short-Term Memory (LSTM) units, another type of RNN. Like LSTMs, GRUs are designed to address the vanishing gradient problem that can hinder the training of deep neural networks. The key innovation of GRUs is the use of gating mechanisms that control the flow of information through the network, allowing it to capture long-term dependencies in the data.

One of the main advantages of GRUs is their ability to learn complex patterns in sequential data while requiring fewer parameters than LSTMs. This makes them faster to train and more memory-efficient, making them well-suited for applications with limited computational resources. Additionally, GRUs have been shown to outperform LSTMs on certain tasks, such as language modeling and machine translation.

Researchers have explored the capabilities of GRUs in a variety of applications, from speech recognition to music generation. In a recent study, researchers at Google Brain demonstrated that GRUs can effectively model the dynamics of music sequences, producing more realistic and coherent compositions compared to traditional RNNs. Other studies have shown that GRUs can improve the performance of RNNs in tasks such as sentiment analysis, question answering, and image captioning.

Despite their advantages, GRUs are not a one-size-fits-all solution for every deep learning problem. Like any neural network architecture, the performance of GRUs can vary depending on the specific task and dataset. Researchers continue to investigate ways to further optimize and enhance the capabilities of GRUs, such as incorporating attention mechanisms or combining them with other types of neural networks.

In conclusion, Gated Recurrent Units (GRUs) have emerged as a powerful tool for modeling sequential data in deep learning. Their ability to capture long-term dependencies and learn complex patterns make them well-suited for a wide range of applications, from natural language processing to music generation. As researchers continue to explore the capabilities of GRUs, we can expect to see further advancements in the field of deep learning and artificial intelligence.

#Exploring #Capabilities #Gated #Recurrent #Units #GRUs #Deep #Learning,rnn