Fix today. Protect forever.

Secure your devices with the #1 malware removal and protection software

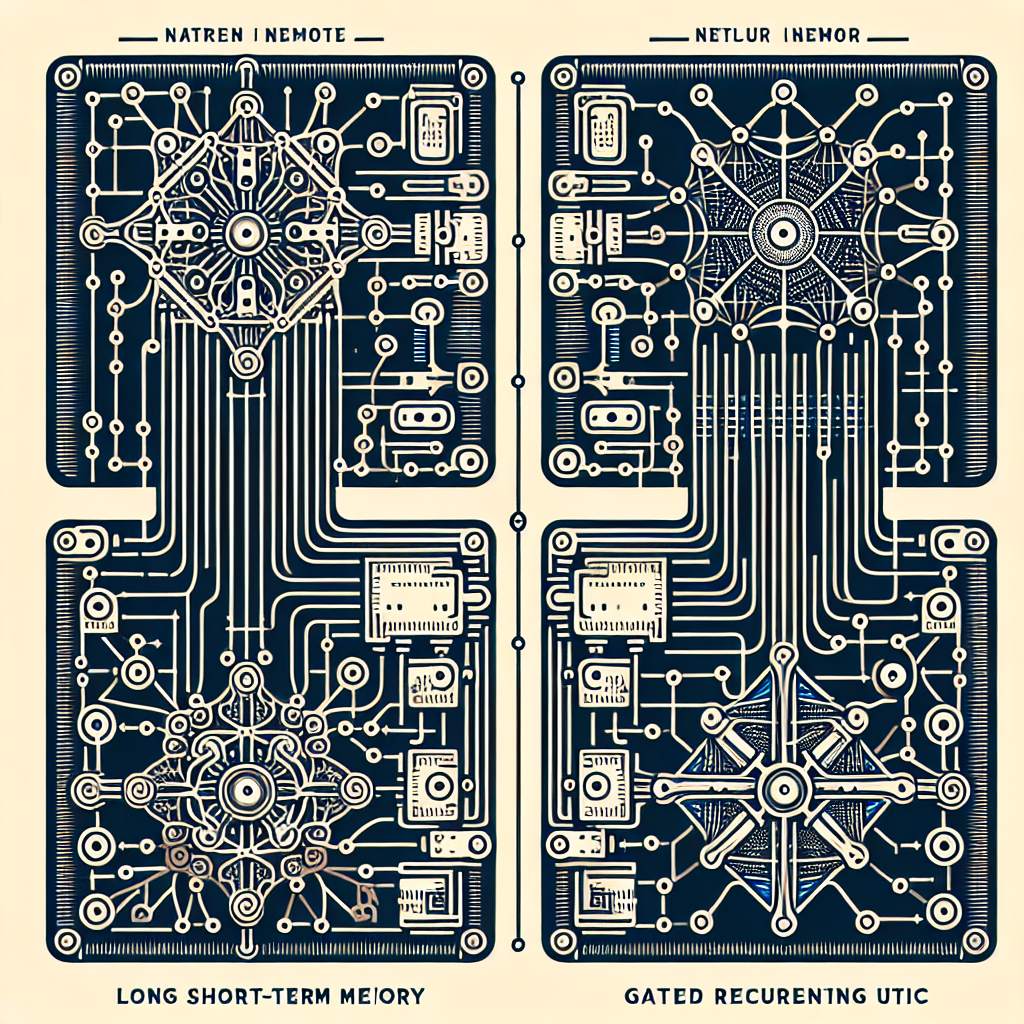

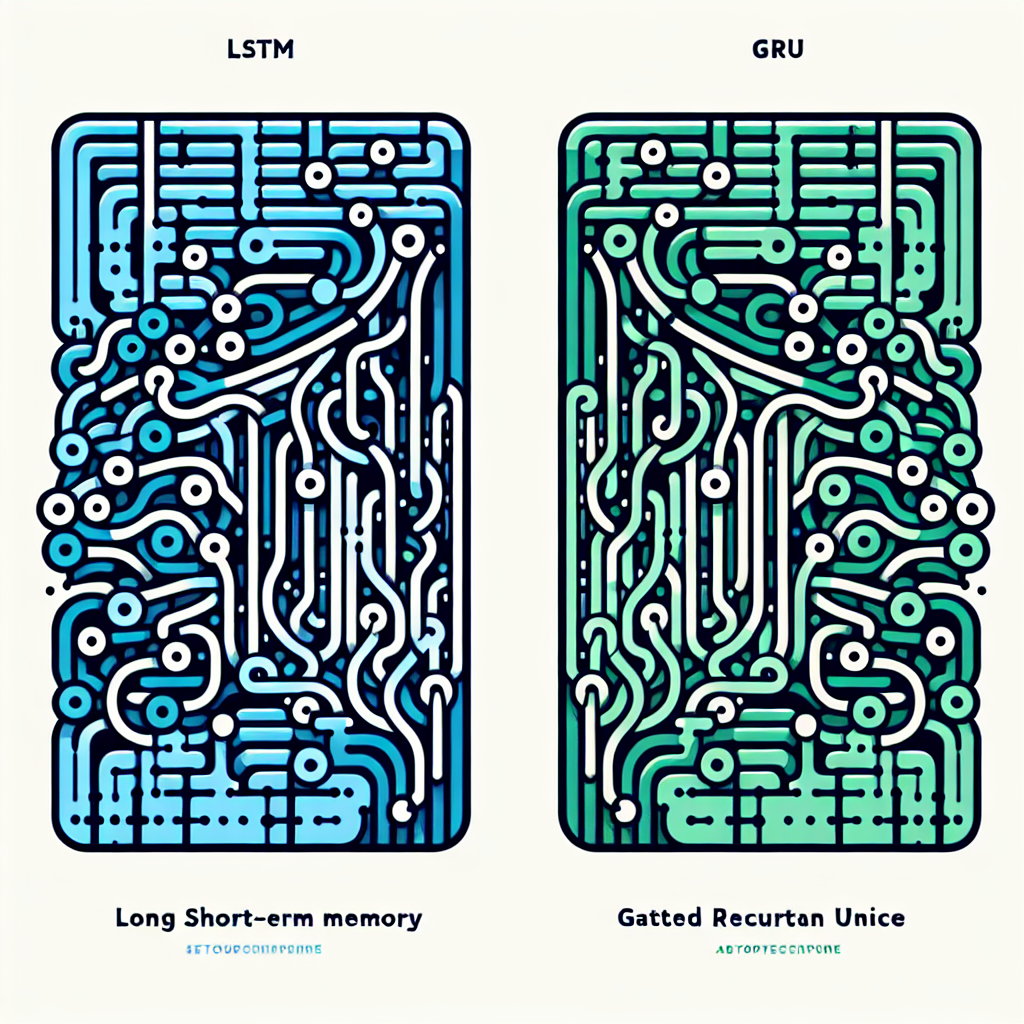

Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) are two popular architectures for recurrent neural networks (RNNs). Both models are designed to effectively capture and learn long-term dependencies in sequential data, making them ideal for tasks such as natural language processing, time series analysis, and speech recognition.

LSTMs were introduced in 1997 by Hochreiter and Schmidhuber as a solution to the vanishing gradient problem in traditional RNNs. LSTMs use a system of gates, including input, forget, and output gates, to control the flow of information through the network, allowing it to remember and forget information over long sequences. This architecture has been widely successful in a variety of applications and has become a standard in the field of deep learning.

GRUs, on the other hand, were introduced in 2014 by Cho et al. as a simpler and more computationally efficient alternative to LSTMs. GRUs also use a system of gates, including update and reset gates, to control the flow of information, but they have fewer parameters and computations compared to LSTMs. This makes GRUs faster to train and less prone to overfitting, making them a popular choice for researchers and practitioners alike.

When comparing LSTM and GRU, there are a few key differences to consider. One major difference is the number of gates used in each architecture. LSTMs have three gates (input, forget, and output), while GRUs have two gates (update and reset). This means that LSTMs have more parameters to learn and control the flow of information, potentially allowing them to capture more complex patterns in the data. However, this also makes LSTMs more computationally expensive and slower to train compared to GRUs.

Another important difference is the way these architectures handle information flow. LSTMs have a separate cell state that runs through the entire network, allowing information to flow without being altered by the activation functions. GRUs, on the other hand, do not have a separate cell state and directly pass information between time steps, potentially making them more efficient in capturing short-term dependencies.

In terms of performance, both LSTM and GRU have been shown to be effective in a variety of tasks. LSTMs are typically preferred for tasks that require capturing long-term dependencies, such as machine translation and sentiment analysis. GRUs are often used in tasks that require faster training times and less complex architectures, such as speech recognition and image captioning.

In conclusion, LSTM and GRU are two popular recurrent neural network architectures that have their own strengths and weaknesses. While LSTMs are known for their ability to capture long-term dependencies and complex patterns in data, GRUs offer a simpler and more computationally efficient alternative. The choice between LSTM and GRU ultimately depends on the specific task at hand and the trade-offs between performance and efficiency.

Fix today. Protect forever.

Secure your devices with the #1 malware removal and protection software

#LSTM #GRU #Comparing #Popular #Recurrent #Neural #Network #Architectures,lstm