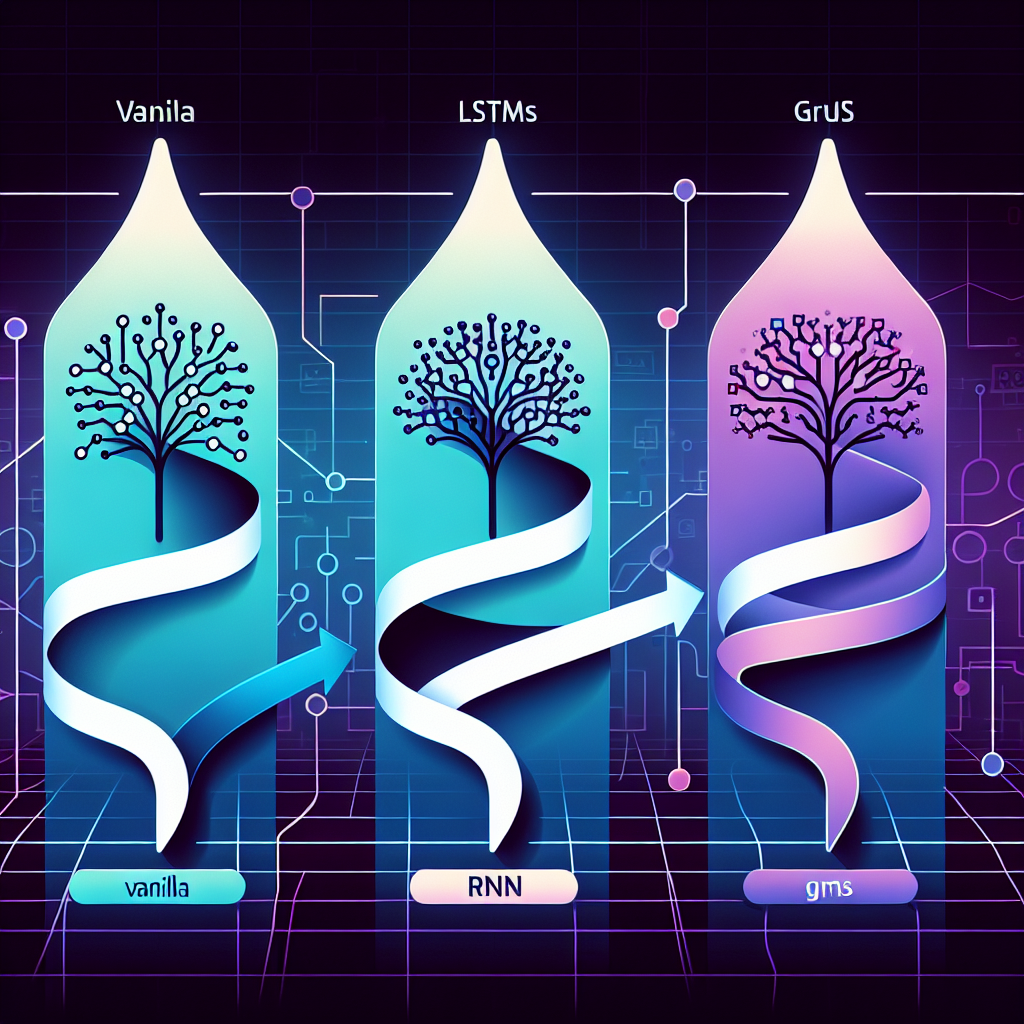

Sequential data analysis plays a crucial role in various fields such as natural language processing, speech recognition, and time series forecasting. Recurrent Neural Networks (RNNs) are commonly used for analyzing sequential data due to their ability to capture dependencies over time. However, traditional RNNs suffer from the vanishing gradient problem, which makes it difficult for them to learn long-range dependencies in sequential data.

To address this issue, researchers have introduced Gated Recurrent Units (GRUs), a variant of RNNs that incorporates gating mechanisms to better capture long-term dependencies in sequential data. GRUs have been shown to outperform traditional RNNs in various tasks, making them a popular choice for sequential data analysis.

One of the key advantages of GRUs is their ability to selectively update and forget information at each time step. This is achieved through the use of gating mechanisms, which consist of an update gate and a reset gate. The update gate controls how much of the previous hidden state should be passed on to the current time step, while the reset gate determines how much of the previous hidden state should be combined with the current input. This selective updating and forgetting of information helps GRUs to effectively capture long-term dependencies in sequential data.

Another advantage of GRUs is their computational efficiency compared to other variants of RNNs such as Long Short-Term Memory (LSTM) units. GRUs have fewer parameters and computations, making them faster to train and more suitable for applications with limited computational resources.

In recent years, researchers have further enhanced the performance of GRUs by introducing various modifications and extensions. For example, the use of stacked GRUs, where multiple layers of GRUs are stacked on top of each other, has been shown to improve the model’s ability to capture complex dependencies in sequential data. Additionally, techniques such as attention mechanisms and residual connections have been integrated with GRUs to further enhance their performance in sequential data analysis tasks.

Overall, Gated Recurrent Units (GRUs) have proven to be a powerful tool for enhancing sequential data analysis. Their ability to capture long-term dependencies, computational efficiency, and flexibility for extensions make them a popular choice for a wide range of applications. As research in this field continues to evolve, we can expect further advancements and improvements in the use of GRUs for analyzing sequential data.

#Enhancing #Sequential #Data #Analysis #Gated #Recurrent #Units #GRUs,rnn

You must be logged in to post a comment.