Your cart is currently empty!

Tag: LSTM

Genaue Vorhersage von Aktienkursen mit Hilfe von neuronalen LSTM- und GRU-Netzen: Ein Deep-Learning-Ansatz für die Vorhersage von Aktienkurs-Zeitreihen-Daten in Gruppen (German Edition)

Price: $47.00

(as of Dec 27,2024 07:14:24 UTC – Details)

Publisher : Verlag Unser Wissen (August 11, 2021)

Language : German

Paperback : 52 pages

ISBN-10 : 6204005618

ISBN-13 : 978-6204005614

Item Weight : 3.39 ounces

Dimensions : 5.91 x 0.12 x 8.66 inches

In diesem Beitrag werden wir einen innovativen Ansatz vorstellen, um präzise Vorhersagen von Aktienkursen mithilfe von neuronalen LSTM- und GRU-Netzen zu treffen. Diese Deep-Learning-Modelle haben sich als äußerst effektiv erwiesen, um komplexe Zeitreihen-Daten zu analysieren und präzise Vorhersagen zu treffen.Wir werden diskutieren, wie diese Modelle funktionieren und wie sie auf Aktienkurs-Zeitreihen-Daten angewendet werden können, um genaue Vorhersagen zu treffen. Darüber hinaus werden wir auch darauf eingehen, wie diese Modelle in Gruppen eingesetzt werden können, um noch präzisere Vorhersagen zu erzielen.

Wenn Sie daran interessiert sind, mehr über diesen innovativen Ansatz zur Vorhersage von Aktienkursen zu erfahren, dann lesen Sie weiter und entdecken Sie, wie neuronale LSTM- und GRU-Netze Ihnen dabei helfen können, bessere Investmententscheidungen zu treffen.

#Genaue #Vorhersage #von #Aktienkursen #mit #Hilfe #von #neuronalen #LSTM #und #GRUNetzen #Ein #DeepLearningAnsatz #für #die #Vorhersage #von #AktienkursZeitreihenDaten #Gruppen #German #Edition

LSTM Networks : Exploring the Evolution and Impact of Long Short-Term Memory Networks in Machine Learning

Price: $28.97

(as of Dec 26,2024 18:53:23 UTC – Details)

ASIN : B0C53JB435

Publication date : May 11, 2023

Language : English

File size : 1159 KB

Simultaneous device usage : Unlimited

Text-to-Speech : Enabled

Screen Reader : Supported

Enhanced typesetting : Enabled

X-Ray : Not Enabled

Word Wise : Not Enabled

Print length : 42 pages

LSTM Networks: Exploring the Evolution and Impact of Long Short-Term Memory Networks in Machine LearningLong Short-Term Memory (LSTM) networks have emerged as a powerful tool in the field of machine learning, enabling the development of sophisticated models capable of capturing long-term dependencies in data sequences. In this post, we will delve into the evolution of LSTM networks, from their inception to their current state-of-the-art performance, and explore the impact they have had on various applications in machine learning.

LSTM networks were first introduced in 1997 by Hochreiter and Schmidhuber as a solution to the vanishing gradient problem that plagued traditional recurrent neural networks (RNNs). By incorporating a memory cell and gating mechanisms, LSTM networks are able to learn and store information over long periods of time, making them well-suited for tasks such as speech recognition, language modeling, and time series prediction.

Over the years, researchers have made significant improvements to LSTM networks, introducing variants such as peephole connections, highway networks, and attention mechanisms to further enhance their performance. These advancements have enabled LSTM networks to achieve state-of-the-art results in a wide range of applications, including natural language processing, image captioning, and sentiment analysis.

The impact of LSTM networks on machine learning has been profound, revolutionizing the way we approach sequential data analysis and paving the way for the development of more complex and accurate models. Their ability to capture long-term dependencies has opened up new possibilities in fields such as healthcare, finance, and autonomous driving, where accurate predictions and decision-making are crucial.

As we continue to push the boundaries of what is possible with LSTM networks, it is clear that their evolution and impact on machine learning will only continue to grow. By staying at the forefront of research and innovation in this area, we can unlock new opportunities and drive advancements in artificial intelligence that have the potential to transform industries and improve lives.

#LSTM #Networks #Exploring #Evolution #Impact #Long #ShortTerm #Memory #Networks #Machine #Learning

LSTM Recurrent Neural Networks for Signature Verification (Paperback or Softback

LSTM Recurrent Neural Networks for Signature Verification (Paperback or Softback

Price :70.28– 58.57

Ends on : N/A

View on eBay

LSTM Recurrent Neural Networks for Signature Verification (Paperback)In this comprehensive guide, readers will learn about the cutting-edge technology of LSTM recurrent neural networks and how they can be applied to the field of signature verification.

With the rise of digital signatures and the need for secure authentication methods, signature verification has become a crucial area of research. LSTM recurrent neural networks offer a powerful tool for analyzing and recognizing patterns in sequential data, making them ideal for use in signature verification systems.

This book covers the fundamentals of LSTM networks, the basics of signature verification, and provides step-by-step instructions for implementing an LSTM-based signature verification system. Readers will also learn about the latest research and advancements in the field, as well as practical applications and case studies.

Whether you are a student, researcher, or industry professional looking to expand your knowledge in the field of signature verification, this book is a must-read. Get your copy in paperback today and stay ahead of the curve in this exciting and rapidly evolving field.

#LSTM #Recurrent #Neural #Networks #Signature #Verification #Paperback #Softback

LSTM Recurrent Neural Networks for Signature Verification: A Novel Approach

Price: $64.00

(as of Dec 24,2024 20:44:21 UTC – Details)

ASIN : 3846589942

Publisher : LAP LAMBERT Academic Publishing (February 6, 2012)

Language : English

Paperback : 104 pages

ISBN-10 : 9783846589946

ISBN-13 : 978-3846589946

Item Weight : 5.8 ounces

Dimensions : 5.91 x 0.24 x 8.66 inches

In recent years, there has been a growing interest in using deep learning techniques for signature verification tasks. One popular approach is the use of Long Short-Term Memory (LSTM) recurrent neural networks, which have shown promising results in various sequence modeling tasks.In this post, we will discuss a novel approach to using LSTM recurrent neural networks for signature verification. Unlike traditional methods that rely on handcrafted features and heuristic rules, LSTM networks have the ability to learn complex patterns and dependencies from raw data, making them well-suited for signature verification tasks.

One key advantage of using LSTM networks for signature verification is their ability to capture long-term dependencies in sequential data. Signatures are inherently sequential in nature, with strokes and pen movements forming a unique pattern that can be difficult to model using traditional methods. LSTM networks excel at capturing these temporal dependencies, allowing them to effectively distinguish between genuine and forged signatures.

Another benefit of using LSTM networks is their ability to learn from limited data. Signature verification tasks often suffer from a lack of labeled training data, making it challenging to train accurate models. LSTM networks are able to generalize well to unseen data, allowing them to perform well even with small training sets.

In conclusion, LSTM recurrent neural networks offer a promising approach to signature verification tasks. Their ability to capture long-term dependencies, learn from limited data, and generalize well to unseen examples make them a powerful tool for verifying the authenticity of signatures. By leveraging the power of deep learning, researchers and practitioners can develop more accurate and reliable signature verification systems.

#LSTM #Recurrent #Neural #Networks #Signature #Verification #Approach

Accurately Forecasting Stock Prices using LSTM and GRU Neural Networks: A Deep Learning approach for forecasting stock price time-series data in groups

Price: $47.00

(as of Dec 24,2024 19:24:10 UTC – Details)

Publisher : LAP LAMBERT Academic Publishing (July 30, 2021)

Language : English

Paperback : 52 pages

ISBN-10 : 620419092X

ISBN-13 : 978-6204190921

Item Weight : 3.39 ounces

Dimensions : 5.91 x 0.12 x 8.66 inches

In today’s fast-paced and volatile stock market environment, accurately forecasting stock prices is essential for making informed investment decisions. Traditional forecasting methods often fall short when it comes to capturing the complex patterns and trends in stock price time-series data. However, with the advancement of deep learning techniques such as Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) neural networks, forecasting stock prices has become more accurate and reliable.LSTM and GRU neural networks are specifically designed to handle sequential data and are well-suited for time-series forecasting tasks. By leveraging the memory capabilities of these networks, we can effectively capture long-term dependencies and patterns in stock price data, making them ideal for forecasting future stock prices.

In this post, we will explore how LSTM and GRU neural networks can be used to accurately forecast stock prices in groups. By grouping stocks based on similar characteristics or industry sectors, we can improve the forecasting accuracy by capturing common trends and patterns within each group.

To start, we will preprocess and normalize the stock price time-series data for each group. We will then train LSTM and GRU neural networks on historical stock price data to learn the underlying patterns and trends. By fine-tuning the network parameters and optimizing the model architecture, we can improve the forecasting accuracy and reduce prediction errors.

Once the models are trained and validated, we can use them to forecast future stock prices for each group. By comparing the predicted prices with the actual prices, we can evaluate the accuracy of the forecasting models and make adjustments as needed.

Overall, using LSTM and GRU neural networks for forecasting stock prices in groups offers a powerful and effective approach to capturing the complex dynamics of the stock market. By leveraging the memory capabilities of these networks, we can improve the accuracy and reliability of stock price forecasts, enabling investors to make more informed decisions and maximize their returns.

#Accurately #Forecasting #Stock #Prices #LSTM #GRU #Neural #Networks #Deep #Learning #approach #forecasting #stock #price #timeseries #data #groups

Deep Learning: Recurrent Neural Networks in Python: LSTM, GRU, and more RNN machine learning architectures in Python and Theano (Machine Learning in Python)

Price: $2.99

(as of Dec 24,2024 14:37:11 UTC – Details)

ASIN : B01K31SQQA

Publication date : August 8, 2016

Language : English

File size : 402 KB

Simultaneous device usage : Unlimited

Text-to-Speech : Enabled

Screen Reader : Supported

Enhanced typesetting : Enabled

X-Ray : Not Enabled

Word Wise : Not Enabled

Print length : 87 pages

Deep Learning: Recurrent Neural Networks in Python: LSTM, GRU, and more RNN machine learning architectures in Python and Theano (Machine Learning in Python)In this post, we will delve into the world of Recurrent Neural Networks (RNNs) in Python, exploring popular architectures such as Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU). RNNs are a powerful class of neural networks that are designed to handle sequential data, making them ideal for tasks such as time series forecasting, natural language processing, and speech recognition.

We will walk through the implementation of RNNs in Python using the Theano library, a popular deep learning framework. We will cover the basics of RNNs, including how they work and why they are well-suited for sequential data. We will then dive into the implementation of LSTM and GRU architectures, exploring their differences and advantages.

By the end of this post, you will have a solid understanding of how to use RNNs in Python for a variety of machine learning tasks. Whether you are a beginner looking to learn more about deep learning or an experienced data scientist looking to expand your skill set, this post will provide you with the knowledge and tools you need to harness the power of RNNs in Python.

#Deep #Learning #Recurrent #Neural #Networks #Python #LSTM #GRU #RNN #machine #learning #architectures #Python #Theano #Machine #Learning #Python

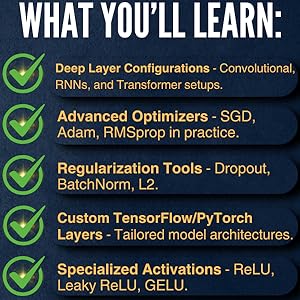

Understanding Deep Learning: Building Machine Learning Systems with PyTorch and TensorFlow: From Neural Networks (CNN, DNN, GNN, RNN, ANN, LSTM, GAN) to Natural Language Processing (NLP)

Price:$74.99– $61.09

(as of Dec 24,2024 01:19:52 UTC – Details)From the Publisher

Master the Fundamentals of Deep Learning with Ease

From Basics to Advanced Techniques, All in One Place

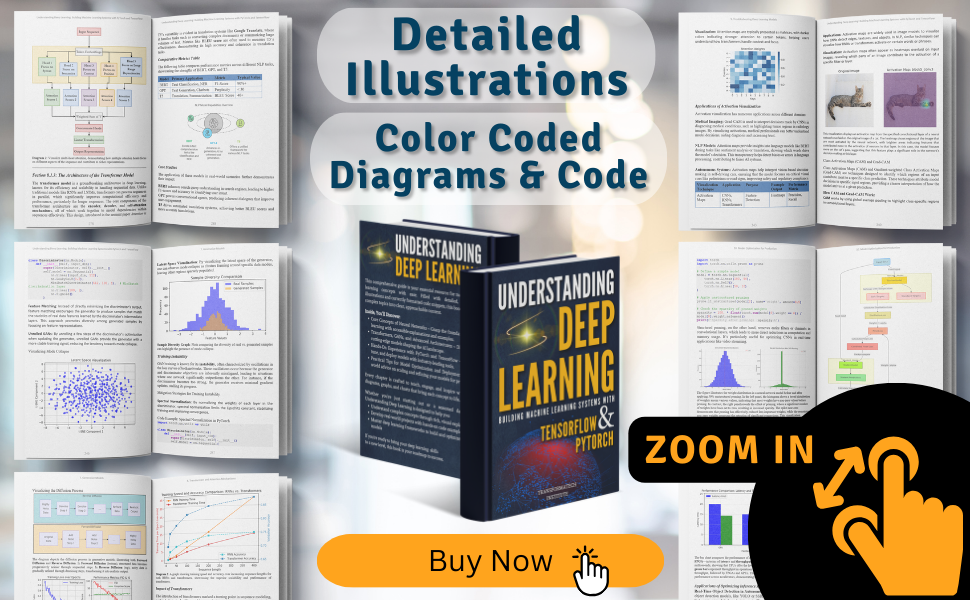

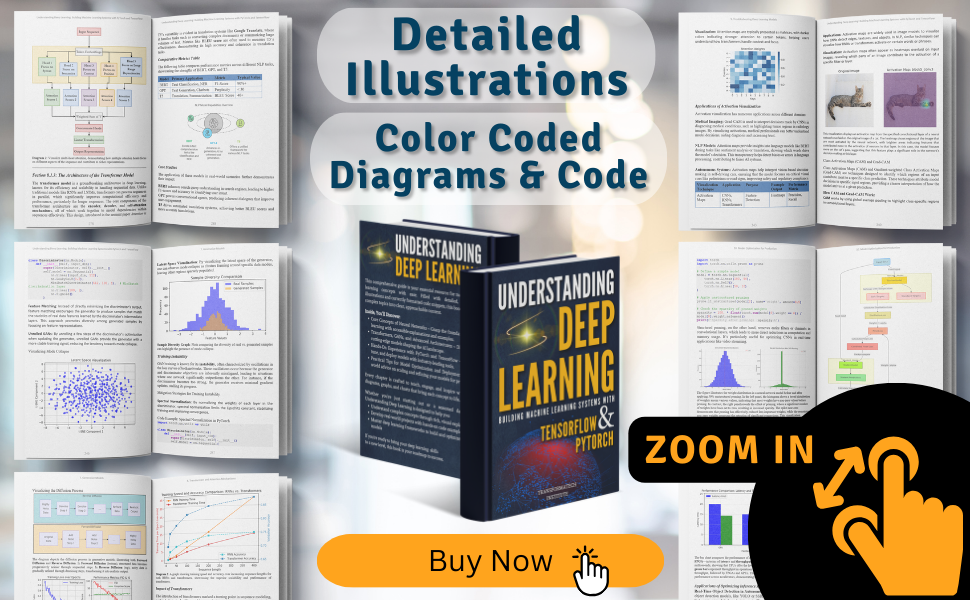

This book is your complete guide to deep learning. Dive into the concepts that power artificial intelligence, neural networks, and modern machine learning systems. Packed with clear, color-coded illustrations and hands-on exercises, this resource is designed to make complex ideas accessible and memorable.

Comprehensive and Practical

Whether you’re a student, professional, or tech enthusiast, this book bridges the gap between theory and real-world applications. Learn to implement cutting-edge models with frameworks like TensorFlow and PyTorch, develop a strong understanding of neural networks, and gain the skills to work with large datasets.

Why This Book Stands Out

Illustrated and Color-Coded: Complex topics made simple with diagrams and color-coded snippets.Hands-On Approach: Practical exercises with TensorFlow and PyTorch.For All Levels: Ideal for beginners, advanced learners, and professionals.Theory Meets Practice: Covers foundational concepts and advanced models.Expertly Written: Clear and comprehensive, created by industry professionals.

Who Should Read This Book? Data Scientists and AI/ML Engineers Software Developers Researchers and Academics Tech Enthusiasts Professionals seeking AI integration insights Job Seekers

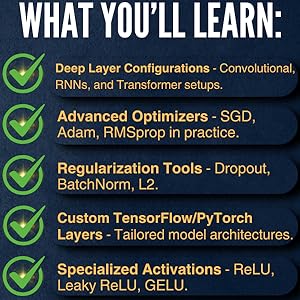

Gain Expertise in Model Architectures

Explore advanced network architectures that drive modern AI applications

In-depth Analysis of Neural Network Layers Explore neural network layers, from fully connected to specialized ones like convolutional and recurrent. Learn how each layer contributes to feature extraction, sequence modeling, and data compression for various AI applications.Optimization and Regularization Techniques Master optimization methods like SGD, Adam, and RMSprop for effective loss minimization. Understand regularization strategies such as Dropout, Batch Normalization, and L2 Regularization to control overfitting and stabilize training.Building and Training Custom Models with TensorFlow and PyTorch Gain expertise in constructing and training custom models in TensorFlow and PyTorch. Define architectures, customize activation functions, and integrate complex layers to create models suited for specific industry needs.

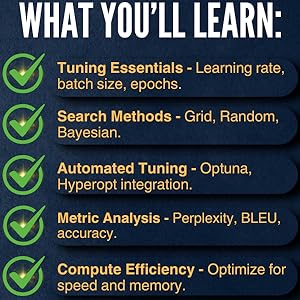

Fine-Tune for Maximum Efficiency

Advanced techniques for selecting hyperparameters that maximize your model’s accuracy and speed

Understanding the Impact of Hyperparameters on Model Performance Explore hyperparameters like learning rate, batch size, and epochs. See how fine-tuning affects convergence, stability, and model accuracy on test data.Techniques for Systematic Hyperparameter Tuning Learn methods like Grid Search, Random Search, and Bayesian Optimization to tune hyperparameters. Understand how each approach suits different models, improving resource efficiency and iteration speed.Automated Hyperparameter Optimization with Optuna and Hyperopt Automate hyperparameter tuning with Optuna and Hyperopt. Use these tools to optimize models for peak performance without manual intervention.

Adapt Pre-Trained Models for Custom Applications

Harness the power of transfer learning to adapt large models for your specific needs

Customizing Pre-Trained Models for Specialized Tasks Adapt models like ResNet, VGG, and BERT for niche applications. Explore layer customization by freezing lower layers and modifying upper layers for feature extraction and tuning to specific tasks.Fine-Tuning Techniques for Optimal Performance Master fine-tuning techniques like unfreezing layers, adjusting learning rates, and recalibrating batch sizes to maximize performance, especially in limited data settings.Managing Transfer Learning Challenges: Domain Shift & Overfitting Gain strategies for domain adaptation and managing overfitting in transfer learning. Address distribution shifts, apply data augmentation, and perform domain-specific tuning for robust adaptation.

Deep Learning with Detailed, Color-Coded Visuals

Deep learning with clear, color-coded illustrations that simplify complex concepts. From neural network architectures to data processing techniques, every page is packed with visuals to support your learning. Code snippets are thoughtfully formatted, making it easy to follow along and implement real-world applications. Perfect for visual learners and professionals seeking practical insights.

ASIN : B0DMP1XC3P

Publisher : Independently published (November 10, 2024)

Language : English

Paperback : 397 pages

ISBN-13 : 979-8346172659

Item Weight : 1.89 pounds

Dimensions : 7 x 0.9 x 10 inchesCustomers say

Customers find the book’s comprehensive coverage of deep learning concepts and clear explanations make it an invaluable resource for both novices and experienced practitioners. The vibrant illustrations and full-color diagrams make complex topics easier to grasp. Readers describe the book as well-worth their time and money, providing practical guidance and a valuable resource for anyone interested in building AI models.

AI-generated from the text of customer reviews

Deep learning has revolutionized the field of artificial intelligence and machine learning in recent years, with its ability to solve complex problems and make predictions with remarkable accuracy. Understanding how deep learning works and how to build machine learning systems using popular frameworks like PyTorch and TensorFlow is essential for anyone looking to work in this rapidly growing field.In this post, we will explore the fundamentals of deep learning, including neural networks such as Convolutional Neural Networks (CNN), Deep Neural Networks (DNN), Graph Neural Networks (GNN), Recurrent Neural Networks (RNN), Artificial Neural Networks (ANN), Long Short-Term Memory (LSTM), and Generative Adversarial Networks (GAN). We will also delve into the exciting world of Natural Language Processing (NLP) and how deep learning is used to analyze and understand human language.

PyTorch and TensorFlow are two of the most popular deep learning frameworks used by researchers and developers to build powerful machine learning models. These frameworks provide a wide range of tools and libraries for building and training neural networks, as well as pre-trained models that can be fine-tuned for specific tasks.

Whether you are a beginner looking to learn the basics of deep learning or an experienced data scientist looking to expand your skills, understanding how to build machine learning systems with PyTorch and TensorFlow is essential. Stay tuned for more in-depth discussions on each of these topics and how you can apply them to real-world problems in the field of artificial intelligence.

#Understanding #Deep #Learning #Building #Machine #Learning #Systems #PyTorch #TensorFlow #Neural #Networks #CNN #DNN #GNN #RNN #ANN #LSTM #GAN #Natural #Language #Processing #NLP

Deep Learning Crash Course for Beginners with Python: Theory and Applications of Artificial Neural Networks, CNN, RNN, LSTM and Autoencoders using … Learning & Data Science for Beginners)

Price:$24.99– $20.50

(as of Dec 17,2024 02:12:54 UTC – Details)

Welcome to our Deep Learning Crash Course for Beginners with Python! In this post, we will cover the theory and applications of artificial neural networks, convolutional neural networks (CNN), recurrent neural networks (RNN), long short-term memory (LSTM) networks, and autoencoders using TensorFlow, Keras, and other popular libraries.Deep learning is a subset of machine learning that focuses on learning representations of data through multiple layers of neural networks. It has revolutionized many industries, from healthcare to finance to autonomous driving, and is a powerful tool for pattern recognition, natural language processing, computer vision, and many other tasks.

In this crash course, we will start by introducing the basics of neural networks and how they work. We will then dive into more advanced topics such as CNNs for image classification, RNNs for sequential data, LSTMs for time series analysis, and autoencoders for unsupervised learning.

Throughout the course, we will use Python as our programming language of choice, along with popular libraries like TensorFlow and Keras. We will also cover the basics of data preprocessing, model training, evaluation, and deployment.

Whether you are a complete beginner or have some experience with machine learning, this crash course will provide you with a solid foundation in deep learning and data science. Stay tuned for our upcoming posts, where we will dive deeper into specific topics and applications of deep learning. Happy learning!

#Deep #Learning #Crash #Beginners #Python #Theory #Applications #Artificial #Neural #Networks #CNN #RNN #LSTM #Autoencoders #Learning #Data #Science #Beginners

Understanding Deep Learning: Building Machine Learning Systems with PyTorch and TensorFlow: From Neural Networks (CNN, DNN, GNN, RNN, ANN, LSTM, GAN) to Natural Language Processing (NLP)

Price: $2.99

(as of Nov 24,2024 06:40:19 UTC – Details)From the Publisher

Master the Fundamentals of Deep Learning with Ease

From Basics to Advanced Techniques, All in One Place

This book is your complete guide to deep learning. Dive into the concepts that power artificial intelligence, neural networks, and modern machine learning systems. Packed with clear, color-coded illustrations and hands-on exercises, this resource is designed to make complex ideas accessible and memorable.

Comprehensive and Practical

Whether you’re a student, professional, or tech enthusiast, this book bridges the gap between theory and real-world applications. Learn to implement cutting-edge models with frameworks like TensorFlow and PyTorch, develop a strong understanding of neural networks, and gain the skills to work with large datasets.

Why This Book Stands Out

Illustrated and Color-Coded: Complex topics made simple with diagrams and color-coded snippets.Hands-On Approach: Practical exercises with TensorFlow and PyTorch.For All Levels: Ideal for beginners, advanced learners, and professionals.Theory Meets Practice: Covers foundational concepts and advanced models.Expertly Written: Clear and comprehensive, created by industry professionals.

Who Should Read This Book? Data Scientists and AI/ML Engineers Software Developers Researchers and Academics Tech Enthusiasts Professionals seeking AI integration insights Job Seekers

Gain Expertise in Model Architectures

Explore advanced network architectures that drive modern AI applications

In-depth Analysis of Neural Network Layers Explore neural network layers, from fully connected to specialized ones like convolutional and recurrent. Learn how each layer contributes to feature extraction, sequence modeling, and data compression for various AI applications.Optimization and Regularization Techniques Master optimization methods like SGD, Adam, and RMSprop for effective loss minimization. Understand regularization strategies such as Dropout, Batch Normalization, and L2 Regularization to control overfitting and stabilize training.Building and Training Custom Models with TensorFlow and PyTorch Gain expertise in constructing and training custom models in TensorFlow and PyTorch. Define architectures, customize activation functions, and integrate complex layers to create models suited for specific industry needs.

Fine-Tune for Maximum Efficiency

Advanced techniques for selecting hyperparameters that maximize your model’s accuracy and speed

Understanding the Impact of Hyperparameters on Model Performance Explore hyperparameters like learning rate, batch size, and epochs. See how fine-tuning affects convergence, stability, and model accuracy on test data.Techniques for Systematic Hyperparameter Tuning Learn methods like Grid Search, Random Search, and Bayesian Optimization to tune hyperparameters. Understand how each approach suits different models, improving resource efficiency and iteration speed.Automated Hyperparameter Optimization with Optuna and Hyperopt Automate hyperparameter tuning with Optuna and Hyperopt. Use these tools to optimize models for peak performance without manual intervention.

Adapt Pre-Trained Models for Custom Applications

Harness the power of transfer learning to adapt large models for your specific needs

Customizing Pre-Trained Models for Specialized Tasks Adapt models like ResNet, VGG, and BERT for niche applications. Explore layer customization by freezing lower layers and modifying upper layers for feature extraction and tuning to specific tasks.Fine-Tuning Techniques for Optimal Performance Master fine-tuning techniques like unfreezing layers, adjusting learning rates, and recalibrating batch sizes to maximize performance, especially in limited data settings.Managing Transfer Learning Challenges: Domain Shift & Overfitting Gain strategies for domain adaptation and managing overfitting in transfer learning. Address distribution shifts, apply data augmentation, and perform domain-specific tuning for robust adaptation.

Deep Learning with Detailed, Color-Coded Visuals

Deep learning with clear, color-coded illustrations that simplify complex concepts. From neural network architectures to data processing techniques, every page is packed with visuals to support your learning. Code snippets are thoughtfully formatted, making it easy to follow along and implement real-world applications. Perfect for visual learners and professionals seeking practical insights.

ASIN : B0DLLM3W8T

Publication date : October 30, 2024

Language : English

File size : 11416 KB

Simultaneous device usage : Unlimited

Text-to-Speech : Enabled

Screen Reader : Supported

Enhanced typesetting : Enabled

X-Ray : Not Enabled

Word Wise : Not Enabled

Print length : 527 pagesCustomers say

Customers find the book comprehensive, bridging theory and practice. They say it provides a balanced introduction to both PyTorch and TensorFlow. Readers also appreciate the vibrant illustrations and well-designed book.

AI-generated from the text of customer reviews

Deep learning has revolutionized the field of artificial intelligence and machine learning, allowing machines to learn complex patterns and make decisions in a way that mimics the human brain. In this post, we will delve into the world of deep learning and explore how to build machine learning systems using popular frameworks like PyTorch and TensorFlow.At the core of deep learning are neural networks, which are computational models inspired by the structure and function of the human brain. There are various types of neural networks, including Convolutional Neural Networks (CNN), Deep Neural Networks (DNN), Graph Neural Networks (GNN), Recurrent Neural Networks (RNN), Artificial Neural Networks (ANN), Long Short-Term Memory (LSTM) networks, and Generative Adversarial Networks (GAN).

CNNs are commonly used for image recognition tasks, DNNs for general-purpose machine learning tasks, GNNs for graph data, RNNs for sequential data, ANNs for general learning tasks, LSTMs for sequence prediction, and GANs for generating new data samples.

In addition to neural networks, deep learning is also widely used in Natural Language Processing (NLP), which focuses on the interaction between computers and human language. NLP tasks include sentiment analysis, machine translation, text generation, and more. PyTorch and TensorFlow provide powerful tools and libraries for building deep learning models for NLP tasks.

By understanding the fundamentals of deep learning and mastering frameworks like PyTorch and TensorFlow, you can unlock the potential of building intelligent machine learning systems that can learn from data and make informed decisions. Stay tuned for more in-depth articles on each type of neural network and NLP tasks in the upcoming posts.

#Understanding #Deep #Learning #Building #Machine #Learning #Systems #PyTorch #TensorFlow #Neural #Networks #CNN #DNN #GNN #RNN #ANN #LSTM #GAN #Natural #Language #Processing #NLP