Your cart is currently empty!

Tag: LSTMs

From RNNs to LSTMs: A Comprehensive Guide to Sequential Data Processing

Recurrent Neural Networks (RNNs) have been a popular choice for processing sequential data in machine learning applications. However, they have some limitations that make them less effective for long-term dependencies in sequences. Long Short-Term Memory (LSTM) networks were introduced as a solution to these limitations and have since become a widely used model for sequential data processing.In this comprehensive guide, we will discuss the transition from RNNs to LSTMs and explore the key differences between the two models.

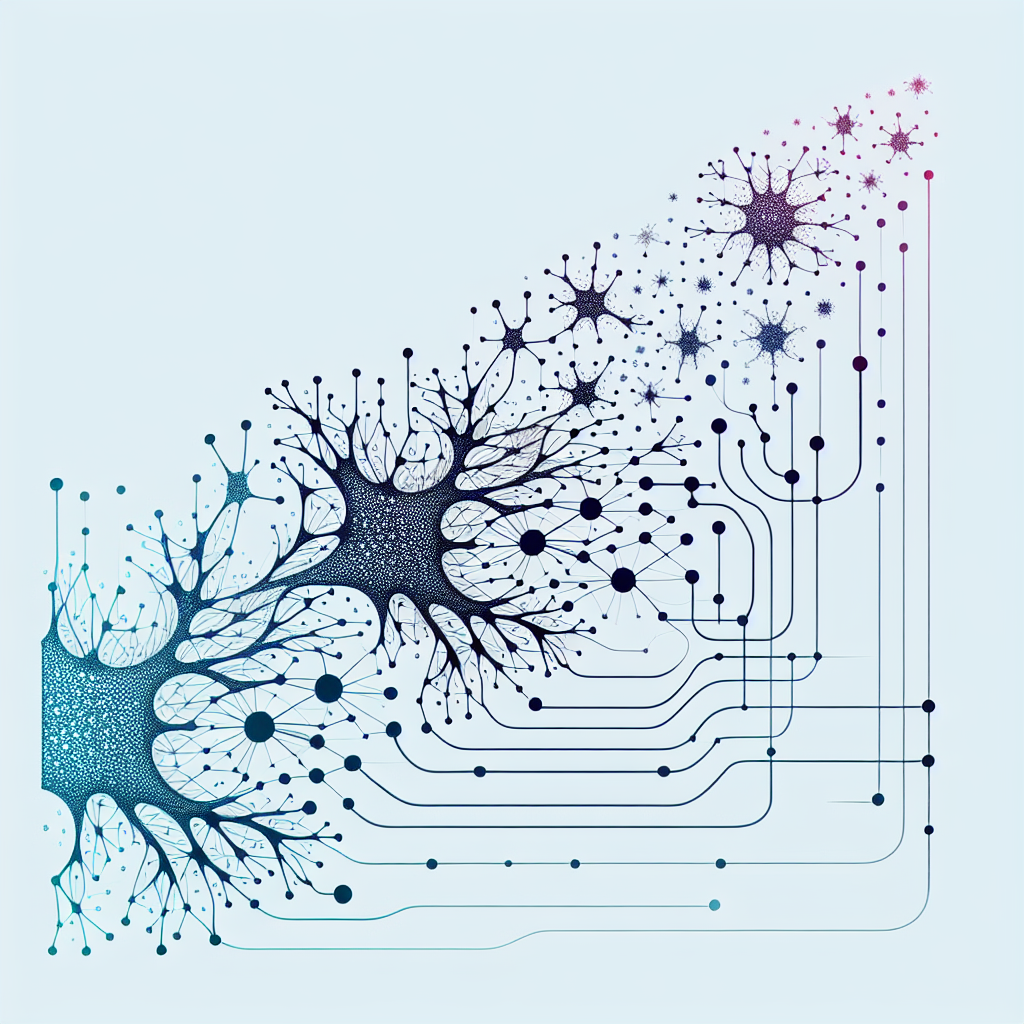

RNNs are designed to process sequential data by maintaining a hidden state that captures information from previous time steps. This hidden state is updated at each time step using the input at that time step and the previous hidden state. While RNNs are effective at capturing short-term dependencies in sequences, they struggle with long-term dependencies due to the vanishing gradient problem. This problem arises when the gradients of the error function with respect to the parameters become very small, making it difficult to update the model effectively.

LSTMs were introduced as a solution to the vanishing gradient problem in RNNs. LSTMs have a more complex structure compared to RNNs, with additional gates that control the flow of information within the network. These gates include the input gate, forget gate, and output gate, which regulate the flow of information into, out of, and within the LSTM cell. The key innovation of LSTMs is the cell state, which allows the network to maintain long-term dependencies by selectively updating and forgetting information.

The input gate controls how much of the new input should be added to the cell state, the forget gate determines which information from the previous cell state should be discarded, and the output gate decides how much of the cell state should be used to generate the output at the current time step. By carefully managing the flow of information through these gates, LSTMs can effectively capture long-term dependencies in sequences.

In practice, LSTMs have been shown to outperform RNNs on a wide range of sequential data processing tasks, including speech recognition, language modeling, and time series forecasting. The ability of LSTMs to capture long-term dependencies makes them particularly well-suited for tasks that involve processing sequences with complex temporal structures.

In conclusion, LSTMs have revolutionized the field of sequential data processing by addressing the limitations of RNNs and enabling the modeling of long-term dependencies in sequences. By understanding the key differences between RNNs and LSTMs, you can choose the right model for your specific application and achieve better performance in sequential data processing tasks.

#RNNs #LSTMs #Comprehensive #Guide #Sequential #Data #Processing,lstm

The Evolution of Recurrent Neural Networks: From RNNs to LSTMs and GRUs

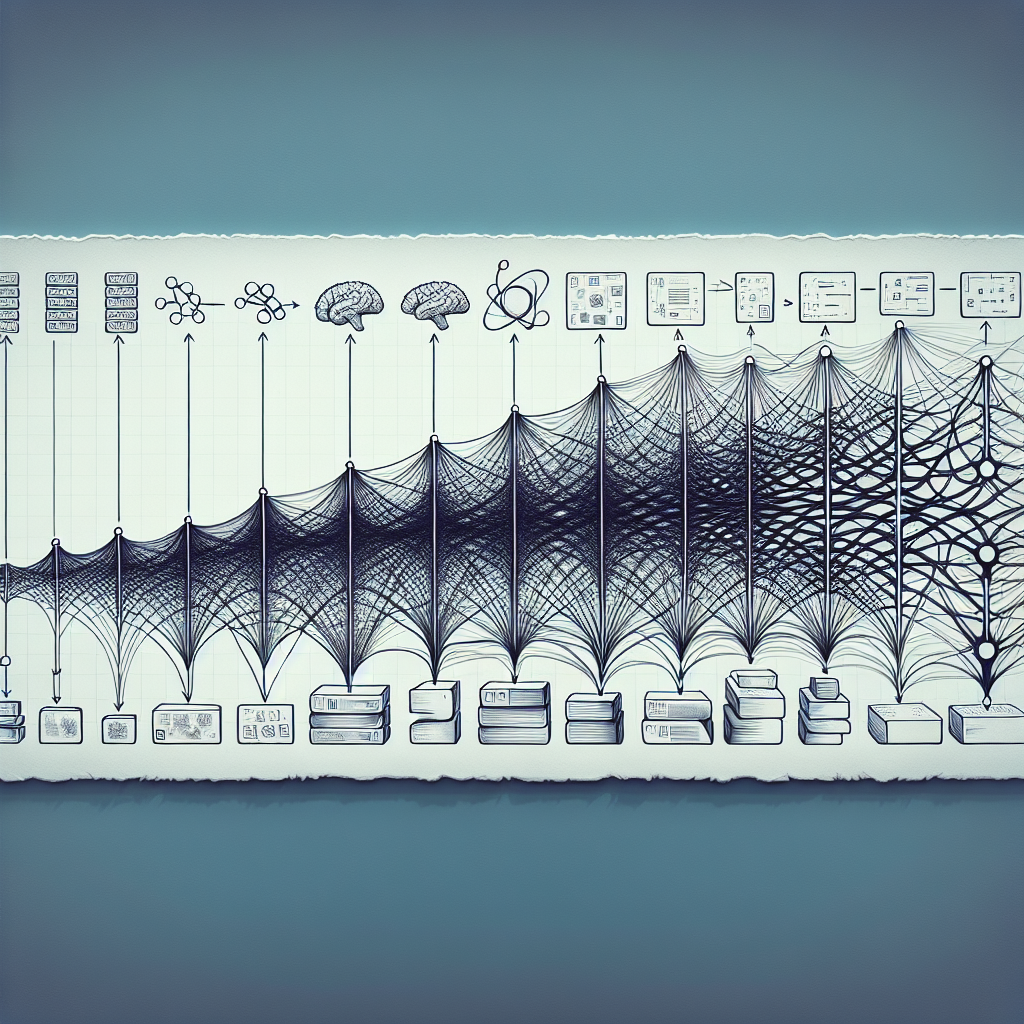

Recurrent Neural Networks (RNNs) have been a popular choice for sequential data processing tasks such as natural language processing, speech recognition, and time series prediction. However, traditional RNNs suffer from the vanishing gradient problem, where gradients diminish exponentially as they are backpropagated through time, leading to difficulties in learning long-term dependencies.To address this issue, Long Short-Term Memory (LSTM) networks were introduced by Hochreiter and Schmidhuber in 1997. LSTMs are a type of RNN architecture that includes memory cells and gating mechanisms to better capture long-range dependencies in sequential data. The memory cells can store information for long periods of time, and the gating mechanisms control the flow of information in and out of the cells. This allows LSTMs to learn long-term dependencies more effectively than traditional RNNs.

Another variation of RNNs that has gained popularity is Gated Recurrent Units (GRUs), introduced by Cho et al. in 2014. GRUs are similar to LSTMs in that they also include gating mechanisms, but they have a simpler architecture with fewer parameters. This makes GRUs faster to train and more computationally efficient than LSTMs while still being able to capture long-term dependencies in sequential data.

Both LSTMs and GRUs have been shown to outperform traditional RNNs in a variety of tasks, including language modeling, machine translation, and speech recognition. Their ability to learn long-term dependencies has made them essential tools in the field of deep learning.

In conclusion, the evolution of recurrent neural networks from traditional RNNs to LSTMs and GRUs has significantly improved their ability to capture long-term dependencies in sequential data. These advancements have led to breakthroughs in a wide range of applications and have established LSTMs and GRUs as state-of-the-art models for sequential data processing tasks.

#Evolution #Recurrent #Neural #Networks #RNNs #LSTMs #GRUs,rnn

From Vanilla RNNs to LSTMs: A Journey through Recurrent Neural Network Architectures

Recurrent Neural Networks (RNNs) have been widely used in the field of natural language processing, speech recognition, and machine translation. However, vanilla RNNs have limitations in capturing long-term dependencies in sequential data due to the vanishing gradient problem. To address this issue, more advanced RNN architectures like Long Short-Term Memory (LSTM) networks have been developed.The journey from vanilla RNNs to LSTMs represents a significant advancement in the field of deep learning. Vanilla RNNs are simple neural networks that have a single hidden layer and a feedback loop that allows them to process sequential data. However, they suffer from the problem of vanishing gradients, where the gradients become very small as they are backpropagated through time, leading to difficulties in learning long-term dependencies.

LSTMs were introduced in 1997 by Sepp Hochreiter and Jürgen Schmidhuber as a solution to the vanishing gradient problem. LSTMs have a more complex architecture with additional components called “gates” that control the flow of information through the network. These gates include an input gate, a forget gate, and an output gate, which allows the network to selectively remember or forget information at each time step.

The key innovation of LSTMs is the use of a cell state that runs through the entire network, allowing information to flow unchanged from one time step to the next. This helps LSTMs to capture long-term dependencies in sequential data and makes them more effective in tasks like language modeling, speech recognition, and machine translation.

In recent years, LSTMs have been further improved with variations like Gated Recurrent Units (GRUs) and Bidirectional LSTMs, which have shown even better performance in tasks like sentiment analysis, named entity recognition, and sequence prediction.

Overall, the journey from vanilla RNNs to LSTMs represents a significant advancement in the field of deep learning, allowing researchers and practitioners to build more powerful and effective models for processing sequential data. As the field continues to evolve, we can expect to see even more innovative architectures and techniques that push the boundaries of what is possible with recurrent neural networks.

#Vanilla #RNNs #LSTMs #Journey #Recurrent #Neural #Network #Architectures,recurrent neural networks: from simple to gated architectures

From RNNs to LSTMs: The Evolution of Recurrent Neural Networks

Recurrent Neural Networks (RNNs) have been a staple in the field of deep learning for many years, allowing for the processing of sequential data such as time series or natural language. However, as researchers and practitioners have delved deeper into the capabilities and limitations of RNNs, a new and improved model has emerged: Long Short-Term Memory networks (LSTMs).RNNs are designed to handle sequential data by maintaining a hidden state that evolves over time as new inputs are fed into the network. This hidden state allows RNNs to capture dependencies between elements in the sequence, making them well-suited for tasks such as time series prediction, language modeling, and machine translation. However, RNNs suffer from the vanishing gradient problem, which hinders their ability to learn long-range dependencies in sequences.

LSTMs were introduced in 1997 by Hochreiter and Schmidhuber as a solution to the vanishing gradient problem in RNNs. LSTMs are a type of RNN architecture that includes a memory cell and three gates: the input gate, forget gate, and output gate. These gates control the flow of information in and out of the memory cell, allowing LSTMs to retain important information over long periods of time and avoid the vanishing gradient problem.

The key innovation of LSTMs lies in their ability to learn long-range dependencies in sequences, making them well-suited for tasks that require modeling complex relationships between elements in the sequence. LSTMs have been successfully applied to a wide range of tasks, including speech recognition, handwriting recognition, and sentiment analysis.

One of the main reasons for the success of LSTMs is their ability to capture long-term dependencies in sequences, which is essential for tasks such as language modeling and machine translation. LSTMs have been shown to outperform traditional RNNs on a variety of benchmarks, making them a popular choice for researchers and practitioners working with sequential data.

In conclusion, the evolution of recurrent neural networks from RNNs to LSTMs represents a significant advancement in the field of deep learning. LSTMs have proven to be highly effective at capturing long-range dependencies in sequences, making them a powerful tool for a wide range of applications. As researchers continue to push the boundaries of deep learning, it is likely that we will see further advancements in the field of recurrent neural networks in the years to come.

#RNNs #LSTMs #Evolution #Recurrent #Neural #Networks,lstm

From RNNs to LSTMs: An Evolution in Neural Networks

Neural networks have come a long way since their inception, evolving from simple models like Recurrent Neural Networks (RNNs) to more sophisticated architectures like Long Short-Term Memory (LSTM) networks. This evolution has been driven by the need for better performance in tasks such as natural language processing, speech recognition, and image recognition.RNNs were one of the earliest types of neural networks used for sequential data processing. They are designed to capture temporal dependencies in data by passing information from one time step to the next. While RNNs were effective in many tasks, they suffered from the vanishing gradient problem, which made it difficult for them to capture long-range dependencies in data.

To address this issue, researchers introduced LSTM networks, which are a type of RNN with a more complex architecture. LSTMs have a unique memory cell that allows them to retain information over long periods of time, making them better at capturing long-range dependencies in data. This makes them particularly well-suited for tasks like language modeling, where context from earlier in a sentence is crucial for understanding the meaning of later words.

One of the key innovations in LSTM networks is the introduction of gates, which control the flow of information in and out of the memory cell. These gates include the input gate, forget gate, and output gate, which regulate the amount of information that is stored or discarded at each time step. By carefully controlling the flow of information, LSTMs are able to learn complex patterns in data and make more accurate predictions.

In recent years, LSTMs have become the go-to architecture for many sequential data processing tasks. Their ability to capture long-range dependencies, coupled with their effectiveness in handling vanishing gradients, has made them a popular choice for tasks like machine translation, sentiment analysis, and speech recognition. Researchers continue to explore ways to improve the performance of LSTMs, with ongoing research focusing on optimizing the structure of the memory cell and introducing new types of gates.

Overall, the evolution from RNNs to LSTMs represents a major advancement in the field of neural networks. By addressing the limitations of earlier models and introducing new techniques for capturing long-range dependencies, LSTMs have paved the way for more advanced applications in artificial intelligence. As researchers continue to push the boundaries of neural network design, it is likely that even more sophisticated architectures will be developed in the future, further improving the performance of AI systems.

#RNNs #LSTMs #Evolution #Neural #Networks,lstm

The Evolution of Recurrent Neural Networks: From Vanilla RNNs to LSTMs and GRUs

Recurrent Neural Networks (RNNs) have become a popular choice for tasks that involve sequential data, such as speech recognition, language modeling, and machine translation. The ability of RNNs to capture temporal dependencies makes them well-suited for these kinds of tasks. However, the vanilla RNNs have some limitations that can hinder their performance on long sequences. To address these limitations, more sophisticated RNN architectures, such as Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU), have been developed.The vanilla RNNs suffer from the vanishing gradient problem, which occurs when the gradients become too small during backpropagation, making it difficult for the network to learn long-term dependencies. This problem arises because the gradients are multiplied at each time step, causing them to either vanish or explode. As a result, vanilla RNNs struggle to capture long-range dependencies in the data.

LSTMs were introduced by Hochreiter and Schmidhuber in 1997 to address the vanishing gradient problem in vanilla RNNs. LSTMs have a more complex architecture with an additional memory cell and several gates that control the flow of information. The forget gate allows the network to decide what information to discard from the memory cell, while the input gate decides what new information to store in the memory cell. The output gate then controls what information to pass on to the next time step. This gating mechanism enables LSTMs to learn long-term dependencies more effectively compared to vanilla RNNs.

GRUs, introduced by Cho et al. in 2014, are a simplified version of LSTMs that also aim to address the vanishing gradient problem. GRUs combine the forget and input gates into a single update gate, which controls both the forgetting and updating of the memory cell. This simplification results in a more computationally efficient architecture compared to LSTMs while still achieving similar performance. GRUs have gained popularity due to their simplicity and effectiveness in capturing long-term dependencies in sequential data.

In conclusion, the evolution of RNN architectures from vanilla RNNs to LSTMs and GRUs has significantly improved the ability of neural networks to model sequential data. These more sophisticated architectures have overcome the limitations of vanilla RNNs and are now widely used in various applications such as language modeling, speech recognition, and machine translation. With ongoing research and advancements in RNN architectures, we can expect further improvements in capturing long-term dependencies and enhancing the performance of sequential data tasks.

#Evolution #Recurrent #Neural #Networks #Vanilla #RNNs #LSTMs #GRUs,recurrent neural networks: from simple to gated architectures

Advanced Forecasting with Python: With State-of-the-Art-Models Including LSTMs, Facebook’s Prophet, and Amazon’s DeepAR

Price:$54.99– $30.49

(as of Dec 26,2024 14:34:30 UTC – Details)

In the world of forecasting, advanced predictive models are essential for making accurate predictions and staying ahead of the competition. In this post, we will explore some of the state-of-the-art forecasting models available in Python, including LSTMs, Facebook’s Prophet, and Amazon’s DeepAR.Long Short-Term Memory (LSTM) networks are a type of recurrent neural network that are well-suited for time series forecasting. LSTMs are capable of learning long-term dependencies in data, making them ideal for predicting future trends and patterns. By training an LSTM model on historical data, you can generate forecasts that take into account complex relationships and nonlinear trends.

Facebook’s Prophet is another powerful forecasting tool that is popular among data scientists and analysts. Prophet is designed to handle time series data with strong seasonal patterns and irregular trends. It can automatically detect seasonality, holidays, and other recurring patterns in the data, making it a great choice for forecasting tasks that require a high degree of accuracy.

Amazon’s DeepAR is a deep learning model specifically designed for time series forecasting. DeepAR uses a variation of the LSTM architecture to capture complex patterns in the data and generate accurate forecasts. It also incorporates probabilistic forecasting techniques, allowing you to quantify the uncertainty in your predictions and make more informed decisions.

By leveraging these advanced forecasting models in Python, you can take your predictive analytics to the next level and gain a competitive edge in your industry. Whether you are forecasting sales, demand, or any other time series data, these state-of-the-art models can help you make more accurate and reliable predictions. So why wait? Start exploring these advanced forecasting techniques today and unlock the power of predictive analytics in your business.

#Advanced #Forecasting #Python #StateoftheArtModels #Including #LSTMs #Facebooks #Prophet #Amazons #DeepAR

Generative AI with Python and TensorFlow 2: Create images, text, and music with VAEs, GANs, LSTMs, Transformer models

Price:$43.99– $31.90

(as of Dec 14,2024 22:13:20 UTC – Details)From the brand

Packt is a leading publisher of technical learning content with the ability to publish books on emerging tech faster than any other.

Our mission is to increase the shared value of deep tech knowledge by helping tech pros put software to work.

We help the most interesting minds and ground-breaking creators on the planet distill and share the working knowledge of their peers.

New Releases

LLMs and Generative AI

Machine Learning

See Our Full Range

Publisher : Packt Publishing (April 30, 2021)

Language : English

Paperback : 488 pages

ISBN-10 : 1800200889

ISBN-13 : 978-1800200883

Item Weight : 1.87 pounds

Dimensions : 9.25 x 7.52 x 1.01 inches

In this post, we will explore the exciting world of generative artificial intelligence using Python and TensorFlow 2. We will dive into the capabilities of Variational Autoencoders (VAEs), Generative Adversarial Networks (GANs), Long Short-Term Memory networks (LSTMs), and Transformer models to create images, text, and music.Generative AI has revolutionized the way we think about creating content, allowing us to generate new and unique outputs based on patterns learned from existing data. With the power of TensorFlow 2, we can harness the potential of these advanced AI models to unleash our creativity.

We will start by understanding the basics of each model and how they can be used for generative tasks. We will then delve into hands-on examples, showing you how to implement these models in Python using TensorFlow 2. From generating realistic images to composing music, the possibilities are endless with generative AI.

Whether you are a beginner or an experienced AI practitioner, this post will provide you with the knowledge and tools to start creating your own generative content. So grab your Python IDE, fire up TensorFlow 2, and let’s dive into the fascinating world of generative AI.

#Generative #Python #TensorFlow #Create #images #text #music #VAEs #GANs #LSTMs #Transformer #models