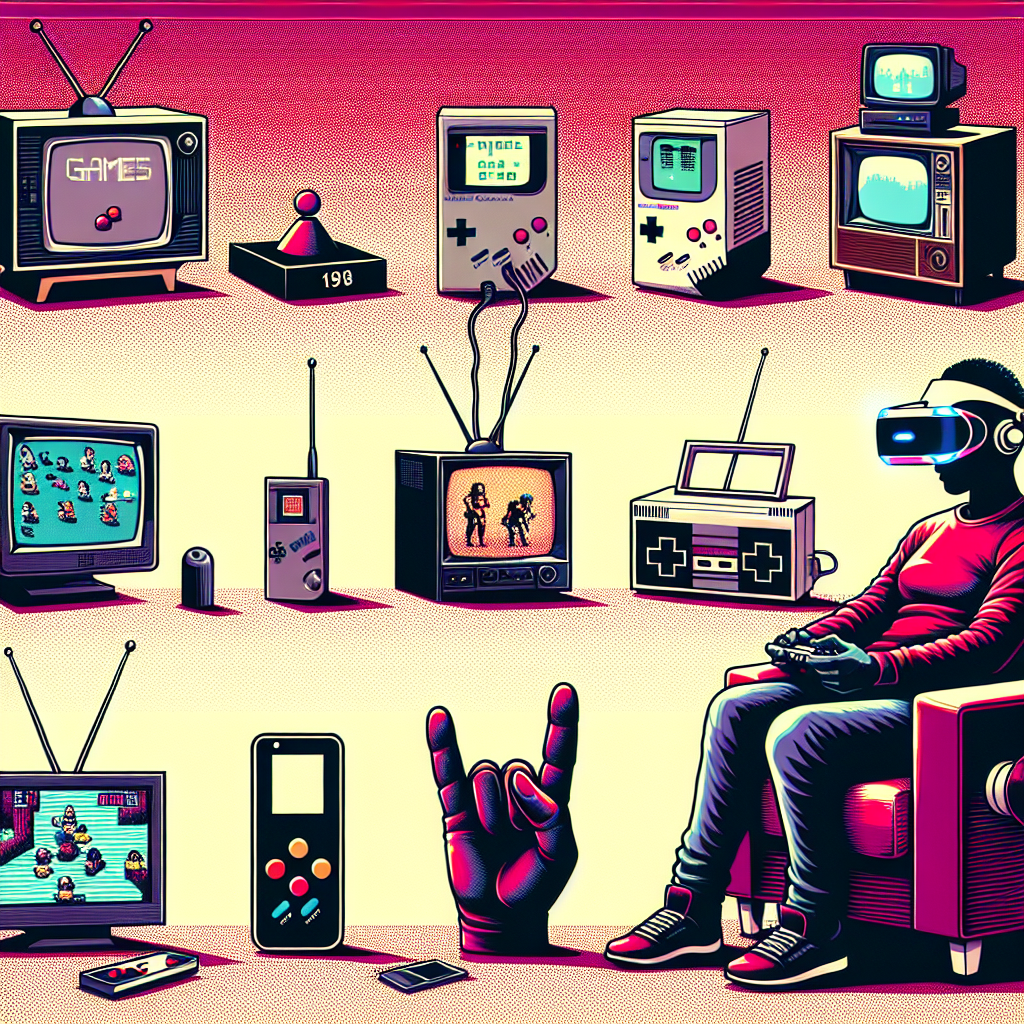

Gaming has come a long way since the days of Pong, the first commercially successful video game released in 1972. Over the past five decades, the gaming industry has evolved exponentially, with advancements in technology and innovation shaping the way we play and interact with games.

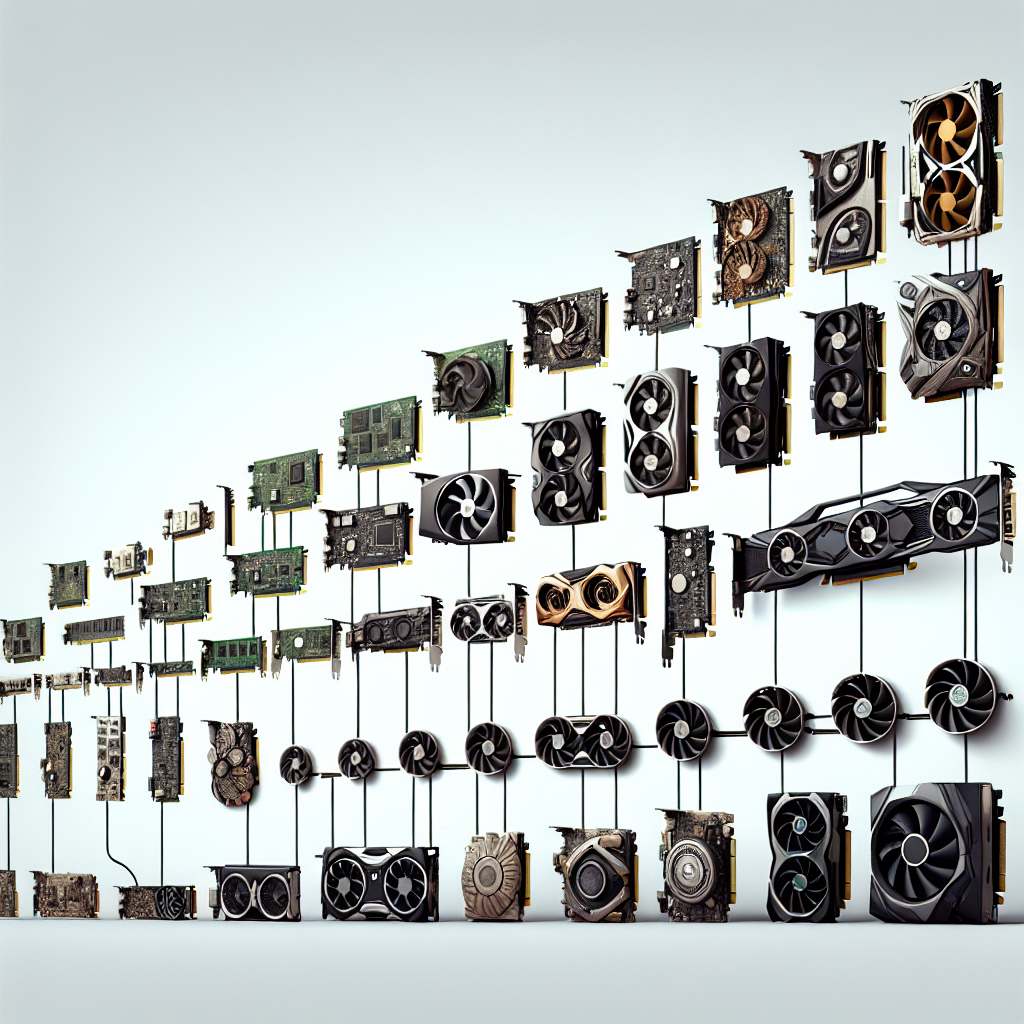

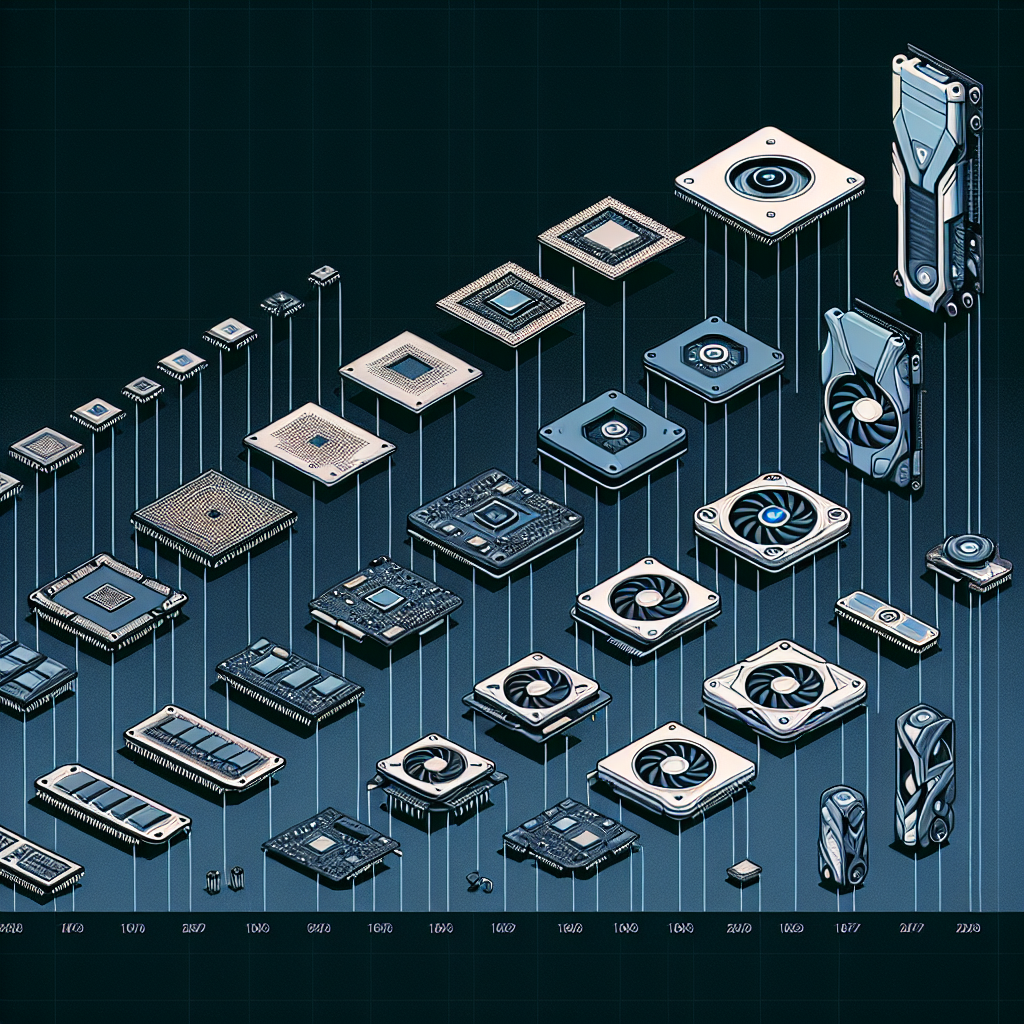

In the early days of gaming, players were limited to simple, pixelated graphics and basic gameplay mechanics. However, as technology progressed, so did the capabilities of video games. The introduction of consoles like the Atari 2600 and the Nintendo Entertainment System brought about a new era of gaming, with more complex games and improved graphics.

The 1990s saw the rise of 3D graphics and immersive gameplay experiences, with titles like Super Mario 64 and The Legend of Zelda: Ocarina of Time pushing the boundaries of what was possible in gaming. The introduction of CD-ROM technology also allowed for larger game worlds and more detailed graphics, paving the way for the future of gaming.

The early 2000s saw the emergence of online gaming, with titles like World of Warcraft and Halo revolutionizing the way we play games. Online multiplayer features allowed players to connect with others around the world, creating a sense of community and competition that was previously unheard of.

In recent years, virtual reality has taken gaming to a whole new level. With the introduction of VR headsets like the Oculus Rift and HTC Vive, players can now immerse themselves in virtual worlds like never before. This technology has opened up new possibilities for game developers, allowing them to create truly immersive and interactive experiences.

As we look to the future, the possibilities for gaming seem endless. From augmented reality to artificial intelligence, the gaming industry is constantly evolving and pushing the boundaries of what is possible. With each new advancement in technology, we can expect to see even more innovative and exciting games that will continue to captivate players for years to come.

In conclusion, the evolution of gaming from Pong to virtual reality has been nothing short of remarkable. With each new advancement in technology, the gaming industry has continued to push the boundaries of what is possible, creating new and exciting experiences for players around the world. As we look to the future, we can only imagine what incredible innovations are in store for the world of gaming.