Harnessing the Potential of the Nvidia Tesla V100 GPU Accelerator Card for Deep Learning and HPC Applications

Nvidia has been a pioneer in the world of graphics processing units (GPUs) for many years, and their Tesla series of GPU accelerator cards have become a staple in the high-performance computing (HPC) and deep learning industries. The Nvidia Tesla V100 GPU accelerator card is one of the most powerful and advanced GPUs on the market, and its capabilities make it an invaluable tool for researchers, data scientists, and engineers working with complex computational tasks.

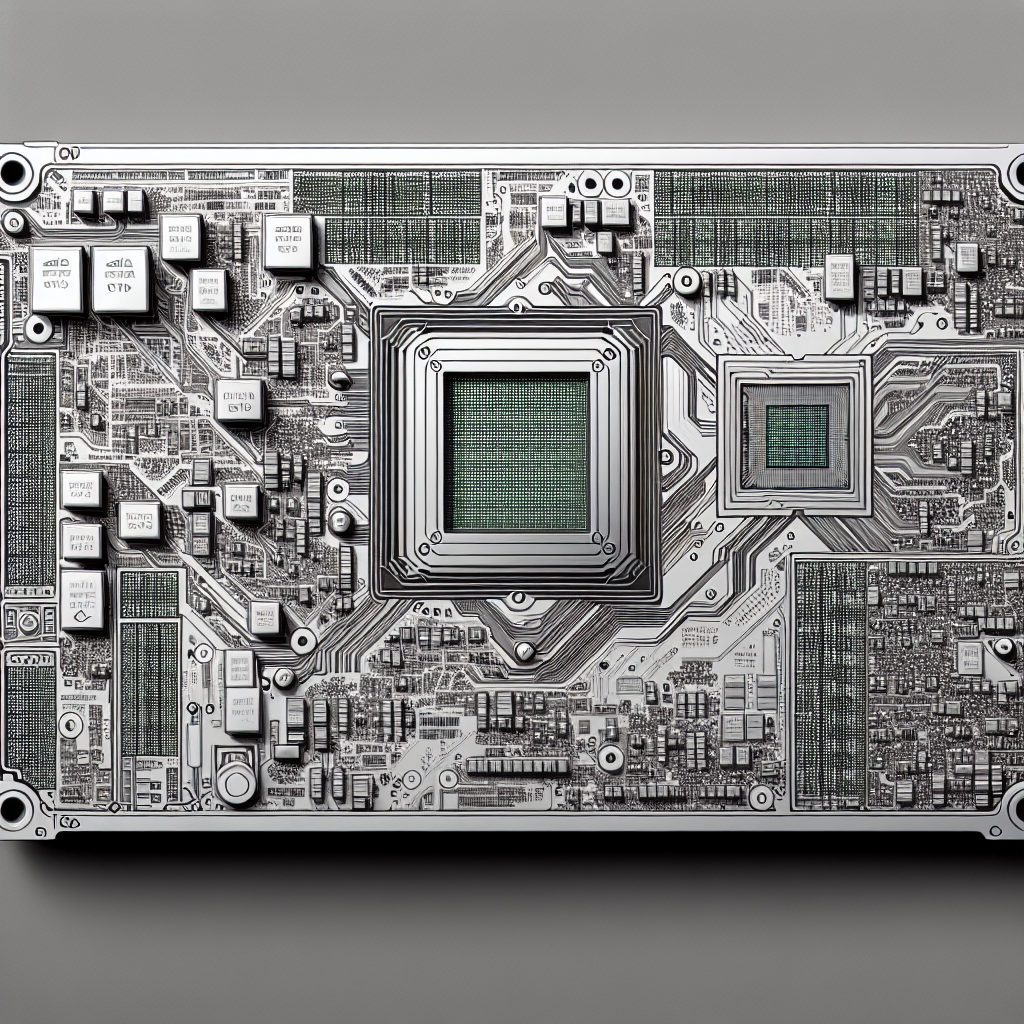

The Tesla V100 GPU accelerator card is based on Nvidia’s Volta architecture, which is specifically designed for deep learning and artificial intelligence applications. With 5,120 CUDA cores and 640 Tensor cores, the V100 is capable of delivering up to 125 teraflops of performance for deep learning workloads. This level of computational power allows researchers to train complex neural networks faster and more efficiently than ever before.

One of the key features of the Tesla V100 GPU accelerator card is its support for mixed-precision computing. This means that researchers can take advantage of both 16-bit and 32-bit floating-point precision in their deep learning models, allowing for faster training times without sacrificing accuracy. This feature is particularly useful for researchers working with large datasets or complex neural network architectures.

In addition to its deep learning capabilities, the Tesla V100 GPU accelerator card is also well-suited for traditional HPC applications. With support for double precision floating-point calculations and a large memory capacity of 16GB, the V100 is able to tackle a wide range of computational tasks with ease. Whether researchers are running simulations, analyzing complex data sets, or performing molecular modeling, the Tesla V100 GPU accelerator card can handle the workload with speed and efficiency.

To harness the full potential of the Nvidia Tesla V100 GPU accelerator card, researchers should take advantage of Nvidia’s software development tools and libraries. The CUDA parallel computing platform, cuDNN deep learning library, and TensorRT inference engine are just a few of the tools available to help researchers optimize their deep learning and HPC applications for the V100 GPU. By leveraging these tools, researchers can ensure that their models run efficiently on the V100 and take full advantage of its computational power.

In conclusion, the Nvidia Tesla V100 GPU accelerator card is a powerful and versatile tool for researchers working with deep learning and HPC applications. With its advanced architecture, support for mixed-precision computing, and extensive software development tools, the V100 is able to tackle complex computational tasks with speed and efficiency. By harnessing the full potential of the Tesla V100 GPU accelerator card, researchers can push the boundaries of what is possible in the fields of deep learning and high-performance computing.