Your cart is currently empty!

Tag: Nvidia Tesla V100 GPU Accelerator Card 16GB PCI-e Machine Learning AI HPC Volta

Unleashing the Power of Nvidia Tesla V100 GPU Accelerator Card for Machine Learning and AI

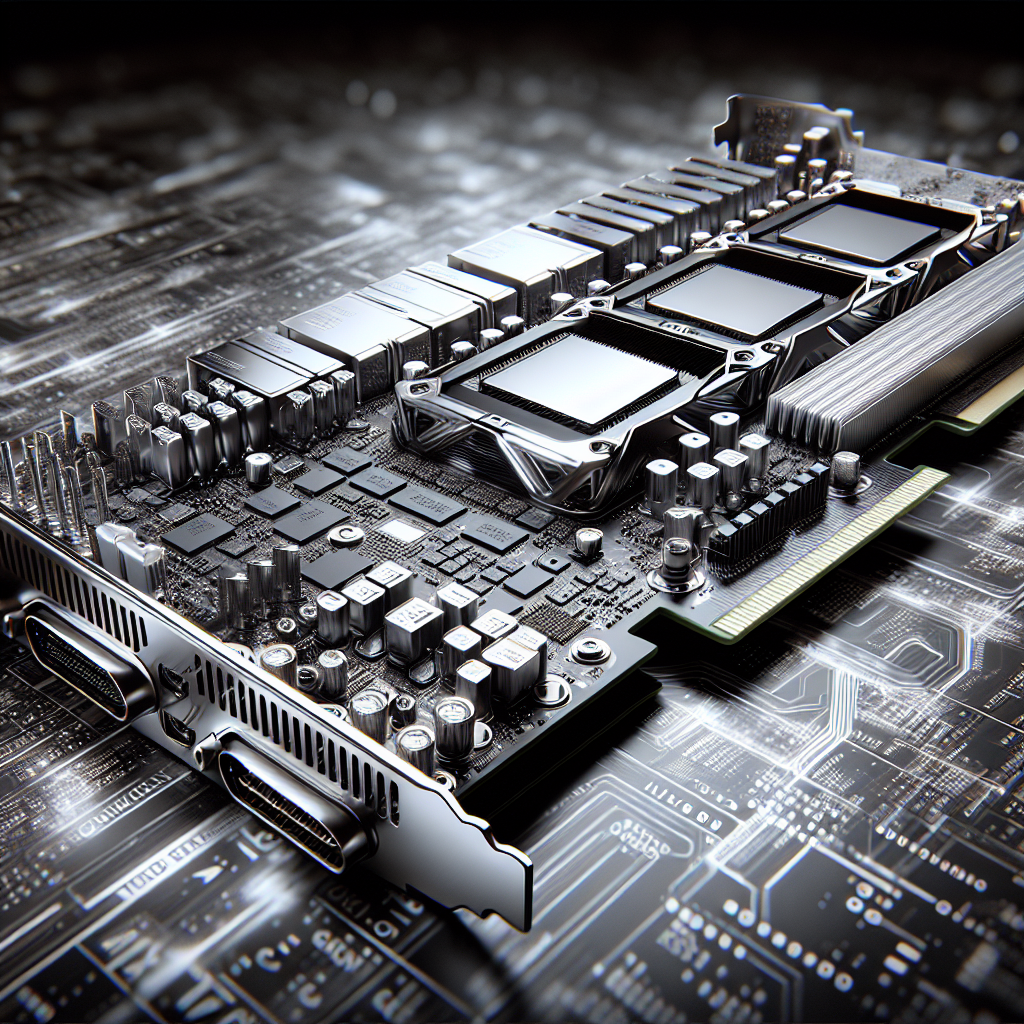

Nvidia Tesla V100 GPU accelerator card is a powerful tool for machine learning and artificial intelligence applications. With its cutting-edge technology and impressive performance capabilities, this GPU accelerator card is revolutionizing the way data scientists and researchers approach complex problems in these fields.The Nvidia Tesla V100 GPU accelerator card is built on the groundbreaking Volta architecture, which provides unprecedented levels of performance and efficiency. With 640 Tensor cores and 5,120 CUDA cores, the V100 is capable of delivering up to 125 teraflops of deep learning performance. This makes it ideal for training deep neural networks and tackling large-scale machine learning tasks with ease.

One of the key features of the Nvidia Tesla V100 GPU accelerator card is its support for mixed-precision computing. This allows researchers to take advantage of the card’s high compute capabilities while maintaining precision in their calculations. By using mixed-precision computing, data scientists can significantly accelerate their training times and achieve faster results without sacrificing accuracy.

The V100 also features high-bandwidth memory (HBM2) with a capacity of 16GB, providing ample space for storing large datasets and models. This, coupled with the card’s high memory bandwidth of 900GB/s, ensures that researchers can efficiently process and analyze vast amounts of data without any bottlenecks.

In addition to its impressive performance capabilities, the Nvidia Tesla V100 GPU accelerator card is also highly versatile and flexible. It supports popular machine learning frameworks such as TensorFlow, PyTorch, and MXNet, making it easy for researchers to integrate the card into their existing workflows. The card is also compatible with popular deep learning libraries such as cuDNN and NCCL, further enhancing its usability and ease of integration.

Overall, the Nvidia Tesla V100 GPU accelerator card is a game-changer for machine learning and artificial intelligence applications. Its unparalleled performance, efficiency, and versatility make it an indispensable tool for researchers and data scientists looking to push the boundaries of what is possible in these fields. By unleashing the power of the V100, researchers can accelerate their training times, tackle larger and more complex problems, and ultimately achieve breakthroughs that were previously out of reach.

Empowering Innovation: How the Nvidia Tesla V100 GPU Accelerator Card is Advancing AI Research and Development

Innovation is at the core of technological advancement, and one company that is leading the charge in pushing the boundaries of artificial intelligence (AI) research and development is Nvidia. With their Tesla V100 GPU accelerator card, Nvidia is empowering researchers and developers to make significant strides in the field of AI.The Nvidia Tesla V100 GPU accelerator card is a powerhouse of computing power, specifically designed to handle the intense computational demands of AI applications. With its 5120 CUDA cores and 32GB of high-bandwidth memory, the Tesla V100 is able to process massive amounts of data at lightning-fast speeds, making it an ideal tool for training complex AI models.

One of the key ways in which the Tesla V100 is advancing AI research and development is through its support for deep learning frameworks such as TensorFlow and PyTorch. These frameworks allow researchers to easily build and train AI models, and the Tesla V100’s accelerated computing capabilities enable them to do so faster and more efficiently than ever before.

In addition to its raw computing power, the Tesla V100 also features Nvidia’s Tensor Cores, which are specifically designed to handle the matrix operations that are common in deep learning algorithms. This specialized hardware accelerates the training of AI models even further, allowing researchers to iterate on their ideas and experiments more quickly.

The impact of the Nvidia Tesla V100 on AI research and development is already being felt across a wide range of industries. From healthcare to finance to transportation, researchers are using the Tesla V100 to push the boundaries of what is possible with AI, developing new applications and solutions that were previously out of reach.

In conclusion, the Nvidia Tesla V100 GPU accelerator card is a game-changer for AI research and development. By providing researchers and developers with unparalleled computing power and specialized hardware for deep learning, Nvidia is empowering them to innovate and create groundbreaking AI solutions. As we continue to push the boundaries of what is possible with artificial intelligence, the Tesla V100 will undoubtedly play a crucial role in shaping the future of technology.

The Nvidia Tesla V100 GPU Accelerator Card: A Game-Changer for Machine Learning and AI Applications

Nvidia has long been a powerhouse in the world of graphics processing units (GPUs), but their latest release, the Tesla V100 GPU accelerator card, is truly a game-changer for machine learning and artificial intelligence (AI) applications. This powerful card combines cutting-edge technology with unparalleled performance, making it a must-have for anyone working in these fields.The Tesla V100 is built on Nvidia’s Volta architecture, which features a new Tensor Core technology that delivers up to 125 teraflops of deep learning performance. This means that the card is capable of processing massive amounts of data at lightning-fast speeds, making it ideal for training complex neural networks and running sophisticated AI algorithms.

One of the key features of the Tesla V100 is its high memory bandwidth, which allows it to handle large datasets with ease. With 16GB of HBM2 memory and a memory bandwidth of 900GB/s, the card is able to quickly access and manipulate the data it needs to perform its calculations. This makes it ideal for applications that require processing huge amounts of data, such as image and speech recognition, natural language processing, and autonomous driving.

In addition to its impressive performance capabilities, the Tesla V100 also offers unmatched scalability and flexibility. The card is compatible with Nvidia’s CUDA programming platform, which allows developers to easily write and optimize code for the card. It also supports Nvidia’s NVLink technology, which enables multiple Tesla V100 cards to be connected together in a single system, providing even greater computational power.

Overall, the Nvidia Tesla V100 GPU accelerator card is a game-changer for machine learning and AI applications. Its unmatched performance, high memory bandwidth, and scalability make it an essential tool for anyone working in these fields. Whether you’re training neural networks, running complex algorithms, or processing large datasets, the Tesla V100 has the power and flexibility to handle whatever you throw at it. It’s truly a game-changer in the world of AI and machine learning.

Why the Nvidia Tesla V100 GPU Accelerator Card is the Ultimate Choice for Deep Learning and HPC Workloads

Deep learning and high-performance computing (HPC) workloads require immense computational power to process large amounts of data quickly and efficiently. One of the most powerful tools available for these tasks is the Nvidia Tesla V100 GPU Accelerator Card. This state-of-the-art graphics processing unit (GPU) is specifically designed for deep learning and HPC applications, making it the ultimate choice for researchers, data scientists, and developers.The Nvidia Tesla V100 GPU Accelerator Card boasts an impressive array of features that set it apart from other GPUs on the market. With 640 Tensor Cores, 5,120 CUDA cores, and 16GB of high-bandwidth memory, this GPU is capable of delivering up to 125 teraflops of performance. This unprecedented level of computational power allows users to train complex neural networks faster than ever before, enabling them to tackle more ambitious and data-intensive projects.

One of the key advantages of the Tesla V100 GPU is its support for Nvidia’s CUDA parallel computing platform. CUDA allows developers to harness the power of the GPU to accelerate their deep learning and HPC applications, significantly reducing processing times and improving overall performance. Additionally, the Tesla V100 GPU is compatible with popular deep learning frameworks such as TensorFlow, PyTorch, and MXNet, making it easy for users to integrate the card into their existing workflows.

In addition to its impressive computational capabilities, the Nvidia Tesla V100 GPU Accelerator Card also offers enhanced data processing and memory bandwidth. The card features NVLink technology, which allows multiple GPUs to communicate directly with each other at high speeds, enabling users to scale their deep learning and HPC applications across multiple GPUs for even greater performance gains. Furthermore, the GPU’s high-bandwidth memory ensures that data can be processed quickly and efficiently, reducing bottlenecks and improving overall system performance.

Overall, the Nvidia Tesla V100 GPU Accelerator Card is the ultimate choice for deep learning and HPC workloads due to its unparalleled computational power, support for CUDA and popular deep learning frameworks, and advanced data processing capabilities. Whether you are a researcher looking to train complex neural networks or a developer working on data-intensive applications, the Tesla V100 GPU offers the performance and reliability you need to take your work to the next level.

From Volta to Victory: How the Nvidia Tesla V100 GPU Accelerator Card is Changing the Game for AI Development

Artificial intelligence (AI) has become a crucial tool in various industries, from healthcare to finance to transportation. As the demand for AI solutions continues to grow, the need for more powerful hardware to support these applications has also increased. One of the most powerful GPU accelerators currently available for AI development is the Nvidia Tesla V100.The Tesla V100 is a flagship product in Nvidia’s GPU accelerator lineup, designed specifically for deep learning and AI workloads. It is powered by the Volta architecture, which includes features such as Tensor Cores for deep learning acceleration, NVLink for high-speed GPU-to-GPU communication, and improved memory bandwidth for faster data processing.

One of the key features of the Tesla V100 is its performance capabilities. With 5,120 CUDA cores and 640 Tensor Cores, the V100 is capable of delivering up to 125 teraflops of deep learning performance. This level of performance is crucial for training complex neural networks and processing large datasets efficiently.

In addition to its raw computational power, the Tesla V100 also offers a number of features that make it well-suited for AI development. These include support for mixed-precision computing, which allows developers to take advantage of the increased performance of lower-precision arithmetic for certain tasks. The V100 also includes support for NVIDIA’s CUDA programming model, as well as popular deep learning frameworks such as TensorFlow and PyTorch.

The Tesla V100 has already made a significant impact in the field of AI development. Companies and research institutions around the world are using the V100 to train advanced neural networks, process massive amounts of data, and accelerate their AI projects. For example, the V100 has been used in medical imaging applications to improve diagnostic accuracy, in autonomous driving systems to enhance safety and reliability, and in natural language processing tasks to enable more advanced language models.

Overall, the Nvidia Tesla V100 GPU accelerator card is changing the game for AI development by providing the performance, features, and support necessary to drive innovation in the field. As AI continues to play a larger role in our daily lives, the V100 is helping to push the boundaries of what is possible with artificial intelligence. With its powerful capabilities and cutting-edge technology, the Tesla V100 is sure to remain a key player in the world of AI development for years to come.

Maximizing Performance: Tips for Optimizing the Nvidia Tesla V100 GPU Accelerator Card for HPC Applications

High-performance computing (HPC) applications require powerful hardware to deliver fast and efficient results. The Nvidia Tesla V100 GPU accelerator card is one such piece of hardware that is known for its exceptional performance in HPC workloads. However, to truly maximize its potential, it is important to optimize its settings and configurations for specific applications. In this article, we will discuss some tips for optimizing the Nvidia Tesla V100 GPU accelerator card for HPC applications.1. Use the latest drivers and firmware updates: Nvidia regularly releases updates for its drivers and firmware to improve performance and stability. Make sure to check for updates regularly and install them to ensure that your Tesla V100 card is running on the latest software.

2. Enable ECC memory: Error-correcting code (ECC) memory helps to detect and correct errors in memory, ensuring that your computations are accurate and reliable. Enable ECC memory on your Tesla V100 card to improve the overall performance and stability of your HPC applications.

3. Adjust power settings: The Tesla V100 card comes with power management features that allow you to optimize power usage and performance. Depending on your specific workload, you may need to adjust the power settings to balance performance and energy efficiency. Experiment with different power settings to find the optimal configuration for your applications.

4. Use CUDA and cuDNN libraries: The Nvidia CUDA and cuDNN libraries provide optimized algorithms and functions for deep learning and other HPC applications. Make sure to leverage these libraries in your code to take advantage of the performance benefits they offer for the Tesla V100 card.

5. Utilize Tensor Cores: The Tesla V100 card is equipped with Tensor Cores, which are specialized hardware units designed to accelerate matrix multiplication operations commonly used in deep learning workloads. Make sure to optimize your code to leverage Tensor Cores for faster and more efficient computations.

6. Optimize memory usage: The Tesla V100 card has a large memory capacity, but inefficient memory usage can lead to performance bottlenecks. Make sure to optimize your code to minimize memory transfers and maximize memory bandwidth utilization for improved performance.

7. Profile and tune your code: Use profiling tools to identify performance bottlenecks in your code and make targeted optimizations to improve performance on the Tesla V100 card. Experiment with different optimization techniques and configurations to find the best settings for your specific workload.

By following these tips for optimizing the Nvidia Tesla V100 GPU accelerator card, you can maximize its performance and get the most out of your HPC applications. With the right configurations and settings, you can achieve faster and more efficient computations, leading to better results and productivity in your HPC workloads.

Unleashing the Potential of Machine Learning with the Nvidia Tesla V100 GPU Accelerator Card

Machine learning has become an essential tool for businesses looking to leverage data to make informed decisions and drive innovation. However, as the complexity and volume of data continue to grow, traditional computing systems are struggling to keep up with the demands of machine learning algorithms. This is where GPU accelerators like the Nvidia Tesla V100 come into play, offering businesses a powerful solution to unleash the full potential of machine learning.The Nvidia Tesla V100 GPU accelerator card is designed to supercharge machine learning workloads by providing massive parallel computing power. With 5,120 CUDA cores and 640 Tensor Cores, the Tesla V100 can perform complex calculations and process vast amounts of data at lightning speed. This allows businesses to train deep learning models faster and more efficiently, leading to improved accuracy and faster insights.

One of the key advantages of using the Nvidia Tesla V100 GPU accelerator card for machine learning is its ability to handle large datasets with ease. Traditional CPU-based systems often struggle to process massive amounts of data in a timely manner, leading to bottlenecks and slower performance. The Tesla V100, on the other hand, can handle terabytes of data without breaking a sweat, enabling businesses to train complex models on large datasets in a fraction of the time it would take with traditional computing systems.

Another important feature of the Nvidia Tesla V100 is its support for mixed-precision computing. This allows businesses to train deep learning models with higher accuracy and faster convergence rates, leading to better results in less time. By leveraging mixed-precision computing, businesses can make the most of their machine learning workloads and drive innovation at a faster pace.

In addition to its raw computing power, the Nvidia Tesla V100 also offers businesses the flexibility to scale their machine learning workloads as needed. With support for multiple GPU configurations and high-speed interconnects like NVLink, businesses can easily scale their computing resources to handle even the most demanding machine learning tasks. This scalability is crucial for businesses looking to stay ahead of the competition and unlock new opportunities for growth.

Overall, the Nvidia Tesla V100 GPU accelerator card is a game-changer for businesses looking to unleash the full potential of machine learning. With its massive parallel computing power, support for large datasets, mixed-precision computing capabilities, and scalability, the Tesla V100 is the perfect solution for businesses looking to drive innovation and make the most of their machine learning workloads. By investing in the Nvidia Tesla V100, businesses can stay ahead of the curve and unlock new possibilities for growth and success.

Nvidia Tesla V100 16GB PCIE GPU Accelerator Card Machine Learning AI HPC /xjk

Nvidia Tesla V100 16GB PCIE GPU Accelerator Card Machine Learning AI HPC /xjk

Price : 645.00

Ends on : N/A

View on eBay

Introducing the Nvidia Tesla V100 16GB PCIE GPU Accelerator Card – the ultimate machine learning, AI, and HPC solution! With its powerful performance and advanced features, this GPU accelerator card is perfect for data scientists, researchers, and developers looking to take their projects to the next level.The Nvidia Tesla V100 features 16GB of high-bandwidth memory, making it ideal for deep learning, neural network training, and other computationally intensive tasks. Its Volta architecture delivers unmatched performance, with up to 7.8 TFLOPS of double-precision and 15.7 TFLOPS of single-precision performance.

Whether you’re working on image recognition, natural language processing, or any other AI application, the Nvidia Tesla V100 has the power and versatility to handle it all. Plus, with support for popular frameworks like TensorFlow, PyTorch, and Caffe, you can get up and running quickly and easily.

Don’t settle for anything less than the best when it comes to machine learning and AI. Upgrade to the Nvidia Tesla V100 16GB PCIE GPU Accelerator Card today and experience the future of computing! #Nvidia #TeslaV100 #MachineLearning #AI #HPC

#Nvidia #Tesla #V100 #16GB #PCIE #GPU #Accelerator #Card #Machine #Learning #HPC #xjk

How the Nvidia Tesla V100 GPU Accelerator Card is Revolutionizing High Performance Computing and AI

Nvidia has long been at the forefront of cutting-edge technology, and their latest release, the Tesla V100 GPU Accelerator Card, is no exception. This powerful piece of hardware is revolutionizing the fields of high performance computing and artificial intelligence, offering unmatched performance and efficiency for data centers and research institutions around the world.One of the key features of the Tesla V100 is its use of Nvidia’s innovative Volta architecture, which delivers unprecedented levels of performance for complex computing tasks. With 640 tensor cores and 5120 CUDA cores, the V100 is capable of processing massive amounts of data at lightning-fast speeds, making it the ideal choice for AI applications such as deep learning and neural network training.

In addition to its impressive processing power, the Tesla V100 also boasts a staggering 16GB of high-bandwidth memory, allowing for seamless data transfer and reduced latency. This means that researchers and data scientists can analyze vast amounts of data in real-time, leading to faster insights and more accurate results.

The V100’s versatility is another major advantage, as it can be easily integrated into existing systems and workflows. Whether used as a standalone accelerator card or paired with multiple GPUs in a server cluster, the Tesla V100 offers unparalleled performance and scalability for a wide range of applications.

One area where the Tesla V100 is making a significant impact is in the field of healthcare, where it is being used to analyze medical imaging data and develop more accurate diagnostic tools. By leveraging the V100’s advanced capabilities, researchers are able to process images faster and more efficiently, leading to improved patient outcomes and reduced healthcare costs.

Overall, the Nvidia Tesla V100 GPU Accelerator Card is a game-changer for high performance computing and AI. Its unparalleled performance, efficiency, and versatility make it an essential tool for researchers, data scientists, and developers looking to push the boundaries of what is possible in their respective fields. As technology continues to evolve at a rapid pace, the V100 is sure to play a key role in shaping the future of computing and artificial intelligence.

A Closer Look at the Features and Performance of the Nvidia Tesla V100 GPU Accelerator Card

Nvidia has long been known for its cutting-edge graphics cards, but the company’s Tesla line of GPU accelerators is designed specifically for high-performance computing tasks. The Nvidia Tesla V100 is one of the latest additions to this lineup, boasting impressive features and performance that make it a popular choice for data centers and scientific research facilities.One of the standout features of the Nvidia Tesla V100 is its use of the Volta architecture, which provides significant improvements in performance and efficiency compared to previous generations of Nvidia GPUs. The V100 is powered by the GV100 GPU, which features a whopping 21.1 billion transistors and 5,120 CUDA cores. This allows the card to deliver up to 125 teraflops of deep learning performance, making it ideal for AI and machine learning applications.

In terms of memory, the Tesla V100 comes equipped with 16GB of HBM2 memory, providing high bandwidth and low latency for demanding workloads. The card also features Nvidia’s NVLink interconnect technology, which allows multiple V100 cards to be linked together for even greater performance scaling.

When it comes to performance, the Nvidia Tesla V100 does not disappoint. The card is capable of delivering up to 7.5 teraflops of double-precision performance, making it suitable for a wide range of scientific and engineering simulations. In addition, the V100 excels in deep learning tasks, thanks to its Tensor Cores, which are specifically designed to accelerate matrix operations commonly used in neural networks.

Overall, the Nvidia Tesla V100 GPU accelerator card offers a winning combination of features and performance that make it an attractive option for data centers and research institutions looking to harness the power of GPU computing. With its Volta architecture, high memory bandwidth, and impressive deep learning capabilities, the V100 is well-suited for a variety of demanding workloads. Whether you’re working on complex simulations, training deep learning models, or running high-performance computing applications, the Tesla V100 is sure to deliver the performance you need.