Fix today. Protect forever.

Secure your devices with the #1 malware removal and protection software

Recurrent Neural Networks (RNNs) have gained popularity in recent years for their ability to process sequential data. Among the various types of RNNs, Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) are two of the most commonly used architectures due to their effectiveness in capturing long-range dependencies in data.

LSTM and GRU architectures were designed to address the vanishing gradient problem that plagues traditional RNNs. This problem occurs when gradients become too small during backpropagation, leading to difficulties in learning long-term dependencies in the data. LSTM and GRU architectures incorporate mechanisms that allow them to retain information over long periods of time, making them well-suited for tasks such as natural language processing, speech recognition, and time series prediction.

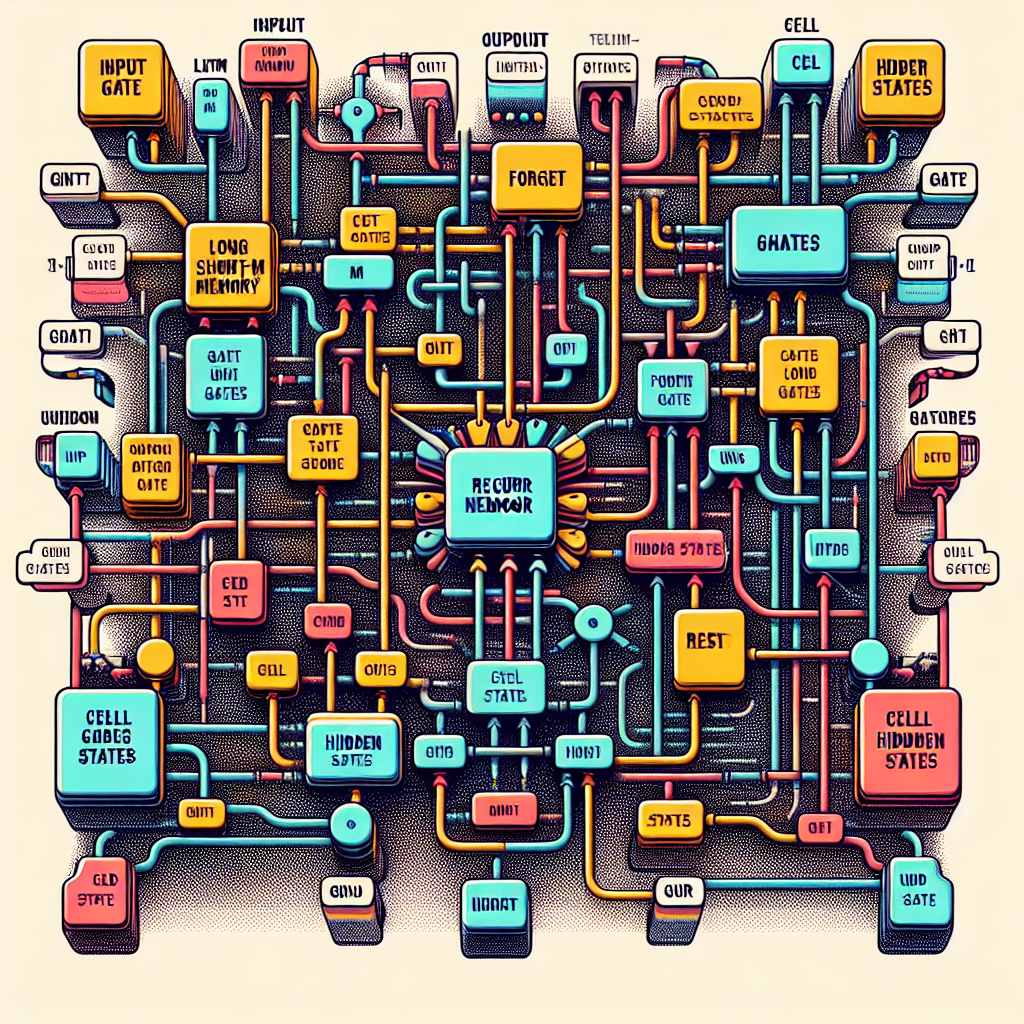

One of the key features of LSTM and GRU architectures is the use of gate mechanisms to control the flow of information within the network. In LSTM, there are three gates – the input gate, forget gate, and output gate – that regulate the flow of information by selectively updating and forgetting information at each time step. This allows LSTM to maintain long-term dependencies in the data by storing relevant information in its memory cells.

On the other hand, GRU simplifies the architecture by combining the input and forget gates into a single update gate. This makes GRU more computationally efficient compared to LSTM, while still being able to capture long-term dependencies in the data. Additionally, GRU has fewer parameters than LSTM, which can be beneficial for training on smaller datasets or limited computational resources.

Despite their differences, LSTM and GRU architectures have been shown to achieve comparable performance on a wide range of tasks. The choice between LSTM and GRU often depends on the specific requirements of the task at hand, such as the size of the dataset, computational resources available, and the complexity of the data being processed.

In conclusion, LSTM and GRU architectures have revolutionized the field of recurrent neural networks by addressing the vanishing gradient problem and enabling the modeling of long-term dependencies in sequential data. Understanding the intricacies of these architectures is essential for building effective models for tasks such as natural language processing, speech recognition, and time series prediction. By unraveling the complexities of LSTM and GRU architectures, researchers and practitioners can harness the power of recurrent neural networks to tackle a wide range of challenging tasks.

Fix today. Protect forever.

Secure your devices with the #1 malware removal and protection software

#Unraveling #Intricacies #LSTM #GRU #Architectures #Recurrent #Neural #Networks,recurrent neural networks: from simple to gated architectures