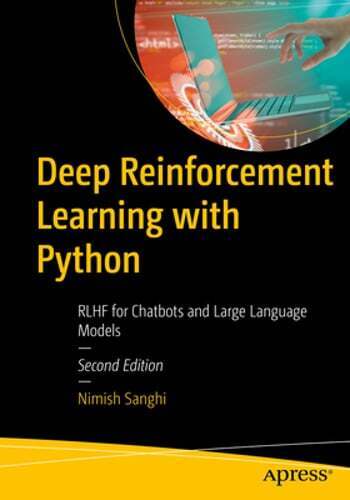

Reinforcement Learning from Human Feedback (RLHF) is a powerful technique for training advanced chatbots and language models. By leveraging human feedback, RLHF allows these models to learn and improve in real-time, leading to more accurate and natural conversations.

In this article, we will explore how to implement RLHF for advanced chatbots and language models in Python. We will walk through the steps required to set up a training environment, collect human feedback, and train the model using RLHF.

Setting up the training environment

To begin implementing RLHF for advanced chatbots and language models in Python, we first need to set up a training environment. This involves installing the necessary libraries and setting up a Python environment.

We will be using the OpenAI GPT-3 model for this tutorial, so we will need to install the OpenAI Python package. You can install the package using pip:

“`

pip install openai

“`

Collecting human feedback

The next step is to collect human feedback for training the model. This can be done through various channels such as surveys, interviews, or online platforms.

For this tutorial, let’s assume we have collected a dataset of human feedback in a CSV file. The dataset contains pairs of input text and corresponding human feedback on the quality of the response.

Training the model using RLHF

With the training environment set up and human feedback collected, we can now start training the model using RLHF. We will use the OpenAI API to interact with the GPT-3 model and train it on the collected human feedback.

Here is a simple Python script to train the model using RLHF:

“`python

import openai

import pandas as pd

# Set up OpenAI API key

api_key = ‘your_openai_api_key’

openai.api_key = api_key

# Load human feedback dataset

data = pd.read_csv(‘human_feedback.csv’)

# Train the model using RLHF

for index, row in data.iterrows():

input_text = row[‘input_text’]

feedback = row[‘feedback’]

response = openai.Completion.create(

engine=”text-davinci-002″,

prompt=input_text,

max_tokens=100,

temperature=0.5,

top_p=1,

n=1,

logprobs=10,

stop=[“\n”]

)

# Provide human feedback to the model

openai.Feedback.create(

model=”text-davinci-002″,

data_id=response[‘id’],

event=”human_feedback”,

feedback=feedback

)

“`

In this script, we first set up the OpenAI API key and load the human feedback dataset. We then iterate over the dataset and use the OpenAI API to interact with the GPT-3 model. For each input text, we generate a response from the model and provide the corresponding human feedback to train the model using RLHF.

Conclusion

Implementing RLHF for advanced chatbots and language models in Python can significantly improve the quality and naturalness of conversations. By leveraging human feedback, these models can learn and adapt in real-time, leading to more accurate and engaging interactions.

In this article, we have walked through the steps required to set up a training environment, collect human feedback, and train the model using RLHF. By following these steps and experimenting with different training techniques, you can create more advanced and intelligent chatbots and language models.

#Implementing #RLHF #Advanced #Chatbots #Language #Models #Python,deep reinforcement learning with python: rlhf for chatbots and large

language models

You must be logged in to post a comment.