Your cart is currently empty!

Tag: ShortTerm

Raiders Eye Jameis Winston as Short-Term QB Solution in 2023

The Las Vegas Raiders, currently lacking a starting quarterback, are eyeing free agent Jameis Winston as a potential solution. With options like Aidan O’Connell and Gardner Minshew deemed insufficient as starters, the Raiders may need to consider stopgap solutions. Winston, the former No. 1 pick, brings both a dynamic presence and significant turnover risks, making him a possible fit for the Raiders’ rebuilding phase. Analysts suggest that his strong arm and leadership could help energize a team that has struggled in recent seasons.

By the Numbers

- Jameis Winston threw 13 touchdowns and 12 interceptions in 12 games with the Browns this season.

- He was the No. 1 overall pick in the 2015 NFL Draft and made the Pro Bowl as a rookie.

State of Play

- The Raiders are hiring Buccaneers assistant general manager John Spytek, who previously worked with Winston.

- Winston is viewed as a viable one-year option amid uncertainty around the Raiders’ potential draft plans.

What’s Next

If agreed upon, Winston’s signing could indicate that the Raiders are willing to prioritize a capable veteran while they rebuild, potentially leading to increased competition among their quarterbacks.

Bottom Line

Jameis Winston could provide a much-needed spark for the Raiders, but his turnover history may complicate his path to becoming a starting quarterback. Ultimately, he represents a pragmatic option during a pivotal rebuild.

The Las Vegas Raiders are reportedly eyeing Jameis Winston as a short-term quarterback solution for the 2023 season. With Derek Carr set to become a free agent after the 2022 season, the Raiders are exploring their options to fill the quarterback position.Winston, who is currently a backup for the New Orleans Saints, has shown flashes of potential throughout his career. He has experience as a starting quarterback with the Tampa Bay Buccaneers, where he threw for over 5,000 yards in a single season.

The Raiders are looking for a proven quarterback who can step in and lead the team while they evaluate their long-term options. Winston could provide the stability and experience needed to keep the Raiders competitive in the tough AFC West division.

While nothing is set in stone, the Raiders are reportedly interested in Winston as a potential short-term solution. It will be interesting to see how this situation unfolds as the 2023 season approaches. Stay tuned for more updates on the Raiders’ quarterback situation.

Tags:

- Jameis Winston

- Raiders

- Short-term QB solution

- NFL

- Las Vegas Raiders

- 2023

- Quarterback

- Free agent

- Jameis Winston news

- NFL rumors

#Raiders #Eye #Jameis #Winston #ShortTerm #Solution

As Jimmy Butler trade rumors swirl, Mat Ishbia and Suns keep chasing short-term highs

Say this for the Phoenix Suns: They have one of the NBA’s most creative front offices when it comes to finding new and different ways in which to mortgage their future. Never mind that this strategy hit its peak two years ago and it has long since been time to turn this ship around; they’re still plowing full steam ahead and throwing lifejackets overboard as they go.

Sorry, I was in the midst of complimenting the Suns before I got sidetracked. In their way, Phoenix made a creative trade on Tuesday by sending an unprotected 2031 first-round pick to the Utah Jazz in return for three other first-round picks in 2025, 2027 and 2029. These picks aren’t likely to be nearly as valuable, and I’ll explain why in a minute. But in essence, the Suns broke a dollar bill into three quarters to improve their immediate trade flexibility, and The Athletic reported late Tuesday that there is rising optimism that Miami Heat star Jimmy Butler is closer to reaching his desired destination — Phoenix — as a result.

Of course, it’s that same impulsive habit under owner Mat Ishbia — chasing short-term sugar highs while burning the future to the ground — that motivates teams like Utah to enthusiastically participate in these deals.

In the last 18 months, the Brooklyn Nets, Houston Rockets, Memphis Grizzlies, Washington Wizards, Orlando Magic and Utah Jazz all have made bets of some size that the Suns will be terrible between 2026 and 2031. So far, so good: It’s early 2025, and Phoenix is an old, average team with zero cap flexibility and few draft assets.

The thing about having three quarters instead of a dollar bill, however, is that you can give one quarter to one team and one quarter to another team. The Suns essentially split the baby on their most valuable (not to mention only) remaining asset, that 2031 pick,. The obvious way that might matter is if they are involved in a multi-team trade that requires them to send draft capital to two different teams.

GO DEEPER

Suns at a crossroads: Stalled hopes, Jimmy Butler interest and Bradley Beal’s no-trade clause

In this particular case, it also allows the Suns to sidestep around the Stepien Rule, named for former Cavaliers owner Ted Stepien, who had a penchant for trading all his draft picks and leaving the team high and dry for the future. I can’t think of any other recent examples of that.

The Stepien Rule prevents teams from trading first-round picks in consecutive years by requiring that they have at least one pick certain to convey in every two-year window. However, the loophole for Phoenix is that it doesn’t have to be a team’s own picks. (Side note: We’re definitely getting an “Ishbia Rule” at some point in the next two collective bargaining agreements.)

Thus, having already traded their firsts in 2025, 2027 and 2029, and pick swaps in 2026, 2028 and 2030, the Suns couldn’t trade any future firsts aside from that 2031 choice. The picks they got from Utah will likely be at the back end of the first round — the worst of Cleveland or Minnesota’s pick in 2025 (so, likely 29th or 30th) and the worst of Cleveland, Utah or Minnesota’s in 2027 and 2029.

Sidesteppin’ Stepien means everything is back on the table now. The Suns can trade one or more of their swapped picks in 2026, 2028 and 2030, or they can trade one or more of the new picks they got from Utah in 2025, 2027 and 2029. They still can’t move picks in consecutive years, but Phoenix could conceivably mix and match and, for example, trade its swapped pick in 2026 and the pick it received in the Utah trade in 2029,

I bring this up because it could matter for trades that don’t involve Butler. As in, the Suns could send out Jusuf Nurkić and a pick in one trade to get something back, and Grayson Allen and a pick in another trade to get something back.

It’s just hard to believe that’s the actual reason they’re doing this — for two reasons. First, no team, no matter how badly run, is going to make a trade like this and then just say, “Well, now maybe let’s see what we can do?”

They already know the answer. You’re not doing a trade like this on spec; you’re doing it to satisfy a particular need that has already been communicated by another trade partner.

Second, Phoenix probably wouldn’t do this unless it was doing something big, because this is the Suns’ last chip. I can’t emphasize this enough since the Suns keep coming up with deals to squeeze more out of their diminishing draft-pick stock: This is where it ends.

Suns owner Mat Ishbia poses for a photos with Devin Booker, Kevin Durant and Bradley Beal before the start of the 2023-24 season. (Mark J. Rebilas / USA Today Sports)No, they can’t rinse, lather and repeat a year from now. Because of repeatedly going over the CBA’s second apron, the Suns’ 2032 pick will be frozen and they can’t trade it. Ditto for every pick after that until they get their payroll under control.

Sure, they’ll likely trade their 2032 second-rounder within minutes of gaining access to it, but it’s not going to bring back much. The same goes for trading “swaps of swaps” to get access to more seconds, especially now that they’ve already done this on three different picks.

At this point all roads lead to Butler, obviously, given that he is the one glittery, shiny object on the trade market, and the Ishbia-era Suns cannot resist shiny objects. The fact that Phoenix went through with this Utah trade is a sign we’re getting warm, and not necessarily on a two-team deal.

Most notably, a trade involving Bradley Beal going to Milwaukee, Butler going to Phoenix and at least one other team being involved besides Miami, seems highly plausible, based both on reporting by my intrepid colleagues at The Athletic and the common sense of looking at a cap sheet.

The logistics are hairy but not insurmountable: The Bucks have to send out at least $58 million in salary to take back Beal’s $50.2 million salary and stay below the second apron once they backfill the roster for all the empty slots on what is likely a four-for-one or five-for-one deal. Beal would also have to waive his no-trade clause; presumably the teams involved would ascertain whether this was a realistic possibility before marching headlong into a deal.

GO DEEPER

As NBA trade deadline nears, the Jimmy Butler-Heat countdown ticks louder and louder

If we assume Giannis Antetokounmpo, Damian Lillard and Brook Lopez are off limits, getting to $58 million basically requires the inclusion of Khris Middleton, Bobby Portis, Pat Connaughton and two other low-salary players, possibly MarJon Beauchamp and Chris Livingston. Backfilling the roster with three minimum deals and keeping the roster at 14 the rest of the season would leave the Bucks about a half million dollars below the threshold.

This is where the deal likely takes some time to get to the finish line: All that salary flotsam has to go somewhere, and it’s not in particularly high demand. Multiple teams would likely get involved, and the Heat might end up with only one or two of those Bucks mentioned above. (One interesting sidebar, for instance: Could the Suns possibly stuff a Nurkić-for-Portis sidebar into it? Seems unlikely, but surely they would ask.) From Miami’s perspective, most notably, a deal that ends up with the Heat under the luxury tax is probably a lot more palatable given the fairly minimal draft compensation likely coming their way; a Butler-for-Middleton swap gets them there, but the other Bucks would have to go elsewhere.

And that, in turn, is likely why the Suns made their trade in the first place. A two-team deal with Miami wouldn’t require them to break their bill into coins like this; a multi-team trade, however, likely compensates Miami with one or two of the firsts and then sends the other(s) to compensate other teams for taking unwanted contracts.

Either way, we’ll end up where every Suns deal ends up: They’ll be slightly more competitive in the short term, but they’re Stepien even deeper into the abyss in the long term. I say “long term,” but that doom cycle is basically 24 months away even if everything breaks right, and very possibly more like four months.

Butler, or some other star, would help win a few more games this year, but it won’t change the Suns’ overarching reality: Their best player is 36, they have no draft picks, they have no good young players, and they can’t sign any free agents above the minimum.

Basically, the fields have been salted through 2031. All that’s left to do now is starve. No wonder everybody wants to trade for Phoenix’s picks.

Sign up to get The Bounce, the essential NBA newsletter from Zach Harper and The Athletic staff, delivered free to your inbox.

(Top photo of Jimmy Butler, Bradley Beal and Kevin Durant: Megan Briggs / Getty Images)

The NBA world is abuzz with rumors of a potential trade involving star player Jimmy Butler, but for Mat Ishbia and the Phoenix Suns, the focus remains on chasing short-term highs.As the Suns continue to push for a playoff spot and seek to build a competitive team for the future, Ishbia and the front office are keeping their eyes on the prize. While the allure of bringing in a player like Butler is tempting, the team is staying true to its long-term vision of building a sustainable and successful franchise.

With a solid core of young talent and a bright future ahead, the Suns are confident in their ability to compete at a high level without making any drastic moves. While trade rumors may swirl, Ishbia and the Suns are focused on staying the course and building a team that can contend for championships for years to come.

So as the speculation continues to mount, one thing is certain: Mat Ishbia and the Suns are committed to chasing short-term highs while keeping their eyes on the ultimate goal of sustained success.

Tags:

- Jimmy Butler trade rumors

- Mat Ishbia

- Phoenix Suns

- Short-term highs

- NBA trade rumors

- Basketball news

- NBA trades

- NBA rumors

- Jimmy Butler trade updates

- Mat Ishbia news

#Jimmy #Butler #trade #rumors #swirl #Mat #Ishbia #Suns #chasing #shortterm #highs

Jonjo Shelvey: Burnley sign former England midfielder on short-term deal

Burnley have signed former Newcastle United and England midfielder Jonjo Shelvey until the end of the season.

The 32-year-old joins following the expiration of his contract with Turkish Super Lig club Eyupspor.

An England international with five caps, Shelvey also played in Turkey with Caykur Rizespor and arrives at Turf Moor having been on trial with the Championship club this month.

“I like the club as a whole – every time I have played against Burnley at Turf Moor it’s been tough,” he said.

“I’m part of this team now and my aim is to do my best to help this club get back into the Premier League, where it belongs.”

Having come through Charlton’s academy, he went on to join Liverpool and made 65 appearances for the Reds before joining Swansea City.

He spent three years with the Swans and then signed for Newcastle United in 2016 where he helped them win promotion from the Championship in his first full season.

After seven years at Newcastle, Shelvey joined Nottingham Forest before leaving for Turkey in September 2023.

He joins a Burnley side third in the Championship table and two points off the automatic promotion places.

Burnley FC have made a surprise move in the transfer market by signing former England midfielder Jonjo Shelvey on a short-term deal. The 29-year-old, who was a free agent after leaving Newcastle United at the end of last season, has joined the Clarets until the end of the current campaign.Shelvey, who has represented England at senior level, brings a wealth of Premier League experience to Sean Dyche’s side. Known for his passing range and ability to dictate play from midfield, the midfielder will be looking to make an immediate impact at Turf Moor.

Burnley fans will be hoping that Shelvey can provide a creative spark in the middle of the park and help the team climb up the table in the second half of the season. With his vision and technique, the former Liverpool and Swansea City player could prove to be a shrewd signing for the Lancashire club.

It remains to be seen whether Shelvey will be able to rediscover his best form in a Burnley shirt, but one thing is for certain – his arrival has certainly added some excitement to the club’s January transfer window. Welcome to Turf Moor, Jonjo Shelvey! #BurnleyFC #JonjoShelvey #TransferNews

Tags:

Jonjo Shelvey, Burnley, former England midfielder, short-term deal, transfer news, Premier League, football signing, free agent, Sean Dyche, Turf Moor, EFL Championship, Jonjo Shelvey news.

#Jonjo #Shelvey #Burnley #sign #England #midfielder #shortterm #deal

Exploring the Power of Long Short-Term Memory (LSTM) in Neural Networks

In the realm of artificial intelligence and machine learning, neural networks have become a powerful tool for solving complex problems. One type of neural network that has gained significant attention in recent years is the Long Short-Term Memory (LSTM) network.LSTM networks are a type of recurrent neural network (RNN) that is specifically designed to handle long-term dependencies in data sequences. Traditional RNNs have difficulty in learning long-range dependencies due to the vanishing gradient problem, where gradients become extremely small as they are propagated back through time. This makes it difficult for the network to remember information from earlier time steps.

LSTM networks address this issue by introducing a more complex architecture that includes memory cells, input and output gates, and forget gates. These components work together to selectively remember or forget information over time, allowing the network to capture long-range dependencies in data sequences.

One of the key advantages of LSTM networks is their ability to learn and remember patterns in sequential data, making them well-suited for tasks such as speech recognition, language translation, and time series forecasting. For example, LSTM networks have been used to achieve state-of-the-art performance in speech recognition systems, where the network must process audio signals over time to accurately transcribe spoken words.

In addition to their ability to capture long-term dependencies, LSTM networks also have the advantage of being able to handle variable-length sequences of data. This makes them highly flexible and applicable to a wide range of tasks where the length of input sequences may vary.

Overall, LSTM networks have proven to be a powerful tool in the field of neural networks, enabling the development of sophisticated models that can learn from and make predictions on sequential data. As research in this area continues to evolve, we can expect to see even more advanced applications of LSTM networks in various domains, further showcasing their power and versatility in solving complex problems.

#Exploring #Power #Long #ShortTerm #Memory #LSTM #Neural #Networks,lstm

Understanding Long Short-Term Memory Networks (LSTMs): A Comprehensive Guide

Understanding Long Short-Term Memory Networks (LSTMs): A Comprehensive GuideIn recent years, Long Short-Term Memory Networks (LSTMs) have become one of the most popular types of recurrent neural networks used in the field of deep learning. LSTMs are particularly effective in handling sequences of data, such as time series data or natural language data, where traditional neural networks struggle to capture long-term dependencies.

LSTMs were introduced by Sepp Hochreiter and Jürgen Schmidhuber in 1997 as a solution to the vanishing gradient problem that plagues traditional recurrent neural networks. The vanishing gradient problem occurs when gradients become too small during backpropagation, making it difficult for the network to learn long-range dependencies. LSTMs address this issue by introducing a more powerful mechanism to retain and selectively forget information over time.

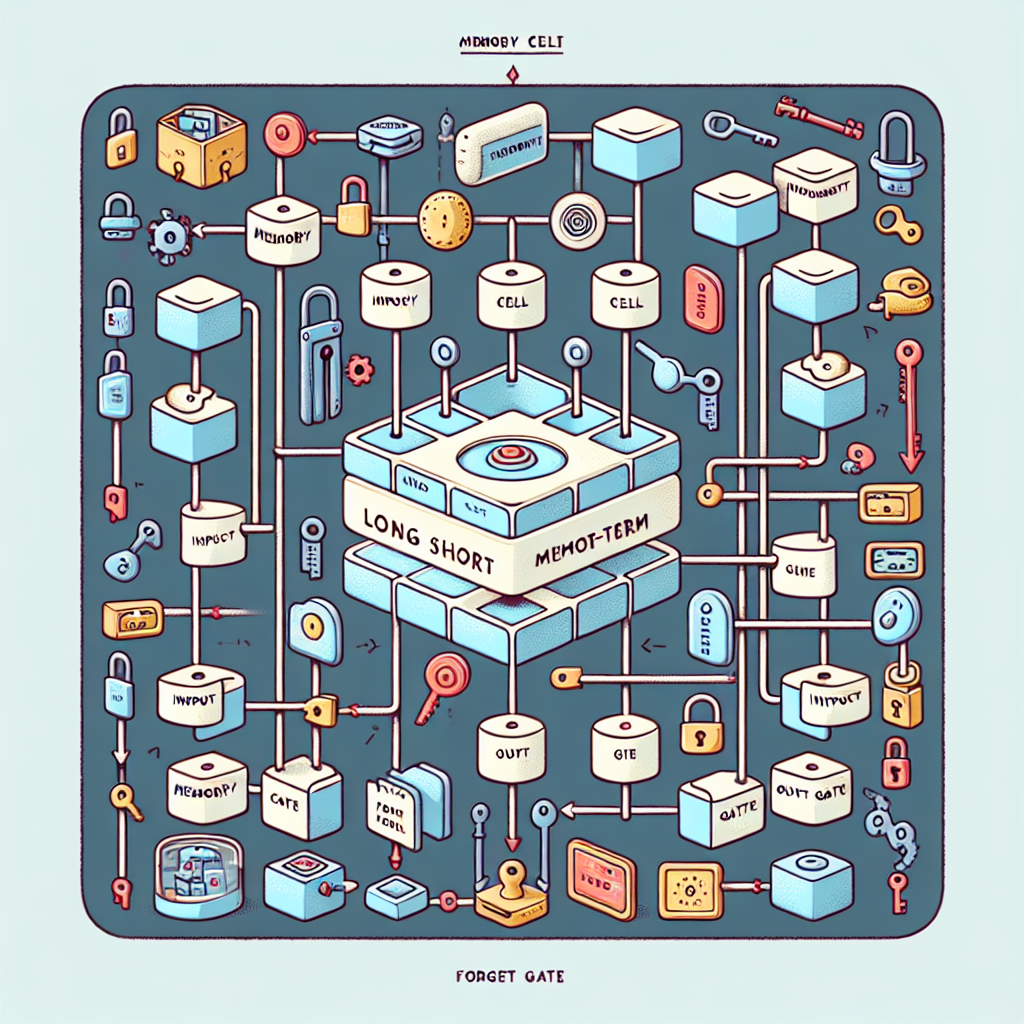

At the core of an LSTM network are memory cells, which are responsible for storing and updating information over time. Each memory cell contains three main components: an input gate, a forget gate, and an output gate. These gates control the flow of information into and out of the cell, allowing the network to selectively remember or forget information as needed.

The input gate determines how much new information should be added to the memory cell, based on the current input and the previous hidden state. The forget gate controls how much of the previous memory cell state should be retained or discarded. Finally, the output gate determines the output of the cell based on the current input and the updated memory state.

One of the key advantages of LSTMs is their ability to capture long-term dependencies in sequential data. Because of their gated structure, LSTMs are able to learn when to remember or forget information over time, making them well-suited for tasks such as speech recognition, machine translation, and sentiment analysis.

Training an LSTM network involves optimizing the parameters of the network using backpropagation through time, a variant of the standard backpropagation algorithm that takes into account the sequential nature of the data. During training, the network learns to adjust the weights of the gates in order to minimize the error between the predicted output and the ground truth.

In conclusion, Long Short-Term Memory Networks (LSTMs) are a powerful tool for modeling sequential data and capturing long-term dependencies. By incorporating memory cells with gated structures, LSTMs are able to selectively retain and forget information over time, making them well-suited for a wide range of tasks in deep learning. Understanding the inner workings of LSTMs can help researchers and practitioners harness the full potential of these networks in their own projects.

#Understanding #Long #ShortTerm #Memory #Networks #LSTMs #Comprehensive #Guide,lstm

Challenges and Advances in Training Long Short-Term Memory Networks

Long Short-Term Memory (LSTM) networks are a type of recurrent neural network (RNN) that have been widely used in various applications such as speech recognition, natural language processing, and time series prediction. These networks are designed to capture long-term dependencies in sequential data by incorporating a memory cell that can maintain information over long periods of time.Despite their effectiveness in capturing long-term dependencies, training LSTM networks can be challenging due to several factors. One of the main challenges is the vanishing gradient problem, where gradients become very small during backpropagation, leading to slow convergence or even preventing the network from learning long-term dependencies effectively. To address this issue, techniques such as gradient clipping, batch normalization, and using different activation functions like the rectified linear unit (ReLU) have been proposed.

Another challenge in training LSTM networks is overfitting, where the model performs well on the training data but fails to generalize to unseen data. Regularization techniques such as dropout, L2 regularization, and early stopping can help prevent overfitting and improve the generalization performance of LSTM networks.

In recent years, several advances have been made in training LSTM networks to address these challenges and improve their performance. One such advance is the use of attention mechanisms, which allow the network to focus on relevant parts of the input sequence while ignoring irrelevant information. This can help improve the network’s ability to capture long-term dependencies and make more accurate predictions.

Another advance in training LSTM networks is the use of larger and deeper architectures, such as stacked LSTM layers or bidirectional LSTM networks. These architectures can capture more complex patterns in the data and improve the network’s performance on challenging tasks.

Furthermore, the use of more advanced optimization algorithms such as Adam, RMSprop, and Nadam can help accelerate the training process and improve the convergence of LSTM networks.

Overall, while training LSTM networks can be challenging due to issues such as the vanishing gradient problem and overfitting, recent advances in techniques and algorithms have helped improve the performance of these networks and make them more effective in capturing long-term dependencies in sequential data. By leveraging these advances, researchers and practitioners can continue to push the boundaries of what LSTM networks can achieve in various applications.

#Challenges #Advances #Training #Long #ShortTerm #Memory #Networks,rnn

A Beginner’s Guide to Long Short-Term Memory (LSTM) Networks

Long Short-Term Memory (LSTM) networks are a type of recurrent neural network that is well-suited for sequence prediction problems, such as speech recognition, language modeling, and time series forecasting. LSTMs are designed to capture long-range dependencies in sequences by maintaining an internal memory state that can be updated and manipulated over time.If you’re new to the world of deep learning and want to learn more about LSTM networks, this beginner’s guide is for you. In this article, we’ll cover the basics of LSTM networks, how they work, and how you can implement them in your own projects.

Understanding LSTM Networks

At their core, LSTM networks are composed of a series of LSTM cells that are connected in a chain-like fashion. Each LSTM cell has three main components: an input gate, a forget gate, and an output gate. These gates control the flow of information into and out of the cell, allowing the network to learn and remember important patterns in the input data.

The input gate determines how much of the new input data should be stored in the cell’s memory, while the forget gate decides which information to discard from the memory state. Finally, the output gate regulates how much of the memory state should be passed on to the next cell or output layer.

Training an LSTM Network

To train an LSTM network, you need to provide it with a sequence of input data and the corresponding target output sequence. The network then learns to predict the next element in the sequence based on the patterns it has observed in the training data.

During training, the network adjusts the weights of the gates and the memory state to minimize the difference between its predictions and the ground truth labels. This process is known as backpropagation through time, where the network’s error is propagated back through the sequence to update the parameters of the LSTM cells.

Implementing LSTM Networks

Implementing an LSTM network in code is relatively straightforward using deep learning libraries such as TensorFlow or PyTorch. These libraries provide high-level APIs that allow you to define the architecture of your network, train it on your data, and make predictions using the trained model.

To get started with LSTM networks, you can follow online tutorials and documentation provided by the deep learning community. These resources typically include example code snippets and datasets that you can use to practice building and training your own LSTM models.

In conclusion, Long Short-Term Memory (LSTM) networks are a powerful tool for sequence prediction tasks in deep learning. By understanding the basics of how LSTM networks work and how to implement them in code, you can start experimenting with your own projects and explore the capabilities of this advanced neural network architecture.

#Beginners #Guide #Long #ShortTerm #Memory #LSTM #Networks,lstm

The Role of Long Short-Term Memory (LSTM) in Enhancing Recurrent Neural Networks

Recurrent Neural Networks (RNNs) have been widely used in various applications such as language modeling, speech recognition, and time series prediction. However, traditional RNNs suffer from the vanishing gradient problem, which makes it difficult for them to capture long-range dependencies in sequential data. This limitation has led to the development of Long Short-Term Memory (LSTM) networks, which have been shown to significantly enhance the performance of RNNs in handling sequential data.LSTM networks were first introduced by Hochreiter and Schmidhuber in 1997 as a solution to the vanishing gradient problem in RNNs. The key innovation of LSTM networks is the introduction of a memory cell that can store information over long periods of time. This memory cell is controlled by three gates: the input gate, the forget gate, and the output gate. The input gate regulates the flow of new information into the cell, the forget gate controls the retention of information in the cell, and the output gate determines the output of the cell.

By using these gates, LSTM networks are able to selectively remember or forget information over long sequences, allowing them to capture long-range dependencies in sequential data. This makes LSTM networks particularly effective in tasks such as speech recognition, where the context of previous words is crucial for understanding the current word.

In addition to handling long-range dependencies, LSTM networks also have the ability to learn complex patterns in sequential data. This is because the memory cell in LSTM networks can store different types of information separately, allowing the network to learn multiple levels of abstraction in the data.

Furthermore, LSTM networks are also capable of learning to make predictions based on incomplete sequences of data. This is achieved through the use of a technique called teacher forcing, where the network is trained to predict the next element in a sequence based on the previous elements. This helps the network learn to make accurate predictions even when some elements of the sequence are missing.

Overall, LSTM networks play a crucial role in enhancing the performance of RNNs in handling sequential data. By addressing the vanishing gradient problem and enabling the capture of long-range dependencies, LSTM networks have significantly improved the capabilities of RNNs in various applications. Their ability to learn complex patterns and make predictions based on incomplete data further demonstrates their effectiveness in handling sequential data. With their unique architecture and capabilities, LSTM networks are expected to continue to play a key role in advancing the field of deep learning and artificial intelligence.

#Role #Long #ShortTerm #Memory #LSTM #Enhancing #Recurrent #Neural #Networks,rnn

A Beginner’s Guide to LSTM: Understanding Long Short-Term Memory Networks

Long Short-Term Memory (LSTM) networks are a type of recurrent neural network (RNN) that are designed to overcome the limitations of traditional RNNs in capturing long-term dependencies in sequential data. LSTM networks have gained popularity in recent years due to their ability to effectively model and predict sequences in various domains such as language modeling, speech recognition, and time series forecasting.If you are new to LSTM networks and want to understand how they work, this beginner’s guide will provide you with a comprehensive overview of LSTM networks and their key components.

1. What is an LSTM Network?

LSTM networks are a special type of RNN that are able to learn long-term dependencies in sequential data by maintaining a memory of past inputs and selectively updating and forgetting information over time. This memory mechanism allows LSTM networks to effectively capture patterns and relationships in sequences that traditional RNNs struggle to learn.

2. Key Components of an LSTM Network

An LSTM network consists of several key components, including:

– Cell State: The cell state is the memory of the LSTM network and is responsible for storing and passing information across time steps. The cell state can be updated, added to, or removed through a series of gates that control the flow of information.

– Input Gate: The input gate regulates the flow of new information into the cell state by controlling how much of the new input should be added to the cell state.

– Forget Gate: The forget gate controls which information in the cell state should be discarded or forgotten. This gate helps the LSTM network to selectively remember or forget past inputs based on their relevance.

– Output Gate: The output gate determines which information in the cell state should be passed on to the next time step as the output of the LSTM network.

3. Training and Tuning an LSTM Network

Training an LSTM network involves optimizing the network’s parameters (weights and biases) to minimize a loss function that measures the difference between the predicted output and the ground truth. This process typically involves backpropagation through time (BPTT) to update the network’s parameters based on the gradient of the loss function.

To improve the performance of an LSTM network, hyperparameters such as the number of hidden units, learning rate, and sequence length can be tuned through experimentation and validation on a separate validation set. Regularization techniques such as dropout and batch normalization can also be used to prevent overfitting and improve generalization.

4. Applications of LSTM Networks

LSTM networks have been successfully applied to a wide range of tasks, including:

– Language Modeling: LSTM networks are commonly used to generate text, translate languages, and perform sentiment analysis.

– Speech Recognition: LSTM networks are used in speech recognition systems to transcribe spoken words into text.

– Time Series Forecasting: LSTM networks are effective in predicting future values of time series data such as stock prices, weather patterns, and sales figures.

In conclusion, LSTM networks are a powerful tool for modeling and predicting sequential data with long-term dependencies. By understanding the key components and training principles of LSTM networks, beginners can start to explore the potential applications of this advanced neural network architecture in various domains.

#Beginners #Guide #LSTM #Understanding #Long #ShortTerm #Memory #Networks,lstm

Understanding Long Short-Term Memory (LSTM) Networks in RNNs

Recurrent Neural Networks (RNNs) are a type of artificial neural network that is designed to handle sequential data, making them ideal for tasks like speech recognition, language translation, and time series prediction. One of the key components of RNNs is the Long Short-Term Memory (LSTM) network, which is specifically designed to address the vanishing gradient problem that can occur in traditional RNNs.The vanishing gradient problem refers to the issue of gradients becoming increasingly small as they are backpropagated through the network, which can prevent the network from learning long-range dependencies in sequential data. This can be particularly problematic in tasks that require the model to remember information from earlier time steps, such as predicting the next word in a sentence or forecasting future stock prices.

LSTM networks were proposed in 1997 by Sepp Hochreiter and Jürgen Schmidhuber as a solution to the vanishing gradient problem. The key innovation of LSTM networks is the addition of a memory cell that can store information over long periods of time. The memory cell is controlled by three gates: the input gate, the forget gate, and the output gate.

The input gate controls how much new information is stored in the memory cell, the forget gate controls how much old information is discarded, and the output gate controls how much information is passed on to the next time step. By carefully regulating the flow of information through these gates, LSTM networks are able to learn long-range dependencies in sequential data more effectively than traditional RNNs.

In RNNs, the basic unit is the simple neuron, which has a state that is updated at each time step based on the input and the previous state. In contrast, the basic unit in an LSTM network is the memory cell, which has a more complex structure that allows it to store and update information over time.

LSTM networks have been widely used in a variety of applications, including speech recognition, natural language processing, and time series forecasting. They have been shown to outperform traditional RNNs on tasks that require learning long-range dependencies, making them a valuable tool for researchers and practitioners working with sequential data.

In conclusion, understanding Long Short-Term Memory (LSTM) networks is essential for anyone working with RNNs and sequential data. By incorporating a memory cell with input, forget, and output gates, LSTM networks are able to overcome the vanishing gradient problem and learn long-range dependencies more effectively. As a result, LSTM networks have become a popular choice for tasks that require modeling sequential data, and are likely to remain an important tool in the field of deep learning for years to come.

#Understanding #Long #ShortTerm #Memory #LSTM #Networks #RNNs,rnn