Your cart is currently empty!

Tag: Understanding

Understanding Data Center Cabling: A Comprehensive Guide for IT Professionals

In today’s digital age, data centers play a crucial role in storing, processing, and managing vast amounts of information for businesses and organizations. The cabling infrastructure in these data centers is a critical component that ensures data is transmitted efficiently and reliably. Understanding data center cabling is essential for IT professionals to optimize performance and maintain uptime.Data center cabling refers to the network of cables and connectors that connect servers, storage devices, networking equipment, and other hardware within a data center. The cabling infrastructure is responsible for carrying data between devices, ensuring communication between different components, and providing power to devices.

There are several key factors to consider when designing and implementing data center cabling. These include the type of cables and connectors used, cable management, cable routing, and cable labeling. IT professionals must also consider factors such as data transmission speeds, compatibility with existing equipment, and future scalability when choosing cabling solutions.

When it comes to data center cabling, there are several types of cables and connectors to choose from. Some of the most common types include:

– Copper cables: Copper cables are widely used in data centers for their affordability and reliability. They are suitable for short-distance connections and support data transmission speeds of up to 10 Gbps.

– Fiber optic cables: Fiber optic cables are ideal for long-distance connections and high-speed data transmission. They are more expensive than copper cables but offer greater bandwidth and faster data transfer speeds.

– Ethernet cables: Ethernet cables are commonly used for connecting networking equipment within a data center. They come in various categories, such as Cat5e, Cat6, and Cat7, each offering different data transmission speeds and performance.

Proper cable management is crucial in data centers to ensure cables are organized, secure, and easily accessible for maintenance and troubleshooting. IT professionals should use cable trays, racks, and cable ties to keep cables organized and prevent tangling. Proper cable routing is also essential to ensure cables are not bent or twisted, which can cause signal interference and data loss.

In addition to cable management and routing, IT professionals should also label cables to easily identify connections and troubleshoot issues. Labeling cables with unique identifiers can save time and effort when tracing cables and making changes to the cabling infrastructure.

Understanding data center cabling is essential for IT professionals to ensure optimal performance, reliability, and scalability of data center infrastructure. By choosing the right cables and connectors, implementing proper cable management and routing practices, and labeling cables effectively, IT professionals can build a robust cabling infrastructure that meets the demands of today’s data centers.

Understanding the Role of Cooling in Data Center Efficiency

In today’s digital age, data centers play a crucial role in storing and processing vast amounts of information. As the demand for data storage continues to grow, so does the need for efficient cooling systems in data centers. Understanding the role of cooling in data center efficiency is essential in ensuring that these facilities operate smoothly and effectively.Cooling systems are a critical component of data center infrastructure, as they help to maintain the optimal operating temperature of the servers and other equipment housed within the facility. Without proper cooling, the heat generated by the servers can quickly build up, leading to overheating and potential equipment failure. This can result in costly downtime and data loss, making it imperative for data center operators to invest in efficient cooling solutions.

There are several factors that contribute to the efficiency of cooling systems in data centers. One key consideration is the design of the facility itself. Data centers are typically housed in large, purpose-built buildings that are equipped with sophisticated cooling systems, such as precision air conditioning units and containment solutions. These systems are designed to regulate the temperature and humidity levels within the facility, ensuring that the servers operate at their optimum performance levels.

Another important factor in data center cooling efficiency is the layout of the servers and other equipment within the facility. By arranging the servers in a way that promotes efficient airflow, data center operators can help to minimize hot spots and improve overall cooling efficiency. This can be achieved through the use of hot and cold aisle containment systems, as well as strategic placement of cooling units and air vents.

In addition to the physical layout of the data center, the type of cooling system used also plays a significant role in determining efficiency. There are several different types of cooling systems available for data centers, including air-based systems, water-based systems, and hybrid systems that combine both air and water cooling. Each of these systems has its own advantages and disadvantages, and the choice of system will depend on factors such as the size of the data center, the types of equipment being used, and the local climate.

It is also important for data center operators to regularly monitor and maintain their cooling systems to ensure optimal performance. This includes conducting regular inspections of the cooling equipment, cleaning air filters, and checking for any signs of wear or damage. By staying proactive in their maintenance efforts, data center operators can help to prevent costly downtime and ensure that their cooling systems continue to operate efficiently.

In conclusion, understanding the role of cooling in data center efficiency is essential for ensuring the smooth and reliable operation of these critical facilities. By investing in efficient cooling systems, optimizing the layout of servers, and maintaining their equipment properly, data center operators can help to minimize downtime, reduce energy costs, and improve overall performance. By prioritizing cooling efficiency, data center operators can ensure that their facilities are able to meet the growing demands of the digital age.

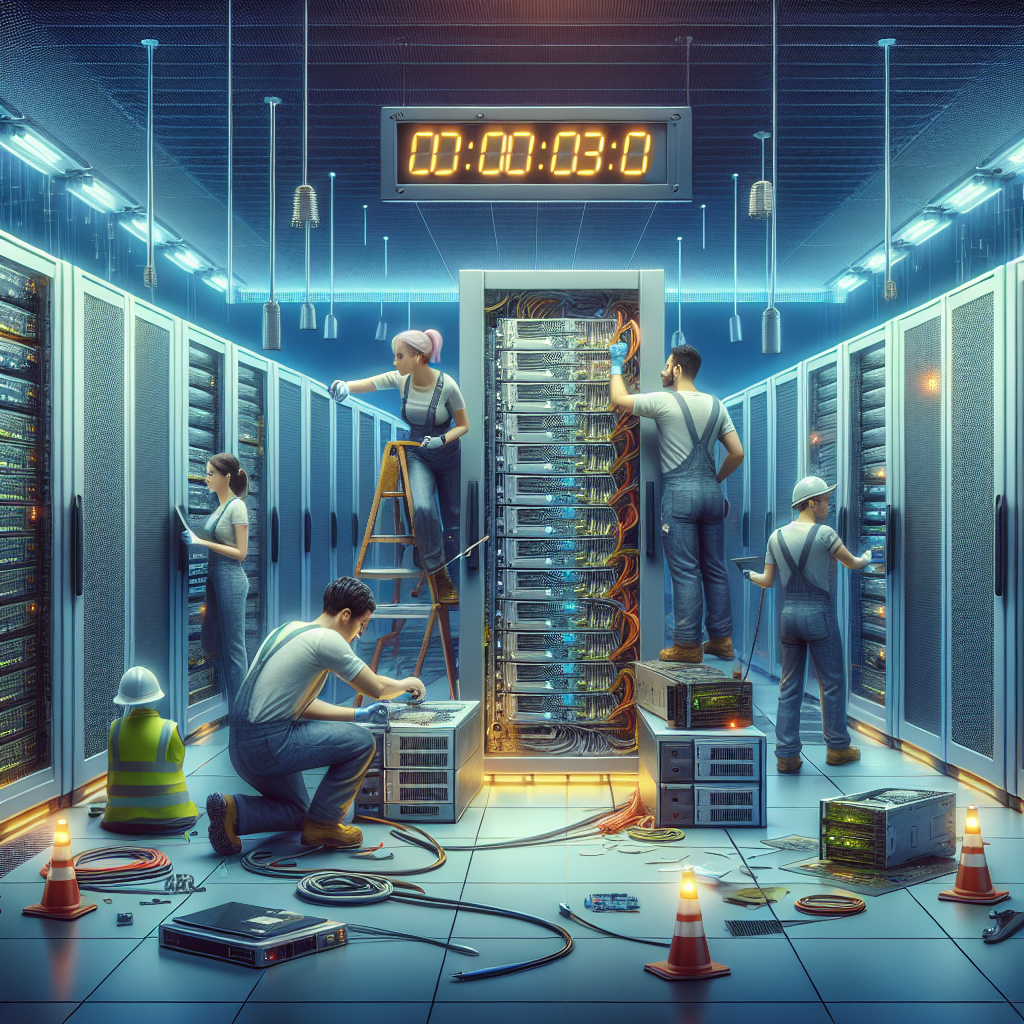

Understanding the Benefits of Data Center Servicing: How it Impacts Business Operations

In today’s digital age, data centers have become an essential component of business operations. These facilities house the critical infrastructure that supports the storage, processing, and distribution of data for organizations of all sizes. As such, ensuring that data center equipment is properly serviced and maintained is crucial to the overall success of a business.Data center servicing involves regular inspections, maintenance, and repairs to ensure that all equipment is functioning properly and efficiently. This includes servers, storage devices, networking equipment, and cooling systems, among others. By investing in data center servicing, businesses can reap a number of benefits that directly impact their operations.

One of the key benefits of data center servicing is improved reliability and uptime. Downtime can be extremely costly for businesses, resulting in lost productivity, revenue, and customer trust. By regularly servicing data center equipment, businesses can minimize the risk of unexpected outages and ensure that their systems are running smoothly at all times.

In addition to improving reliability, data center servicing can also help businesses optimize their infrastructure for better performance. By identifying and addressing potential bottlenecks, inefficiencies, and other issues, businesses can ensure that their data center is operating at peak efficiency. This can lead to faster processing speeds, reduced latency, and improved overall performance for users.

Furthermore, data center servicing can also help businesses extend the lifespan of their equipment. By performing regular maintenance and repairs, businesses can prevent costly failures and extend the life of their hardware. This can help businesses save money in the long run by avoiding the need for premature equipment replacements.

Lastly, data center servicing can also help businesses stay compliant with industry regulations and standards. Many industries, such as healthcare and finance, have strict requirements for data storage and security. By maintaining a well-serviced data center, businesses can ensure that they are meeting these requirements and avoid potential fines or penalties.

In conclusion, understanding the benefits of data center servicing is crucial for businesses looking to optimize their operations and ensure the reliability and performance of their infrastructure. By investing in regular maintenance and repairs, businesses can improve uptime, performance, and reliability, while also saving money and staying compliant with industry regulations. Overall, data center servicing is a critical component of a successful business operation in today’s digital world.

Understanding the Role of Electrical Infrastructure in Data Center Operations

Data centers play a crucial role in today’s digital world, serving as the backbone of the internet and powering the technology that we rely on every day. However, behind the scenes of these massive facilities lies a complex network of electrical infrastructure that is essential for their operation.Understanding the role of electrical infrastructure in data center operations is key to ensuring the reliable and efficient performance of these facilities. From powering servers and cooling systems to ensuring uninterrupted operation during power outages, electrical infrastructure is the lifeblood of data centers.

One of the primary functions of electrical infrastructure in data centers is to provide power to the servers and networking equipment that store and process data. This requires a robust electrical system capable of delivering high levels of power to support the demanding requirements of modern data centers. This includes redundant power supplies, backup generators, and uninterruptible power supply (UPS) systems to ensure continuous operation in the event of a power outage.

In addition to providing power to the equipment, electrical infrastructure also plays a crucial role in maintaining the optimal operating conditions within the data center. This includes powering the cooling systems that regulate the temperature and humidity levels to prevent overheating and ensure the reliability of the equipment. Proper ventilation and air conditioning systems are essential to keeping the servers running smoothly and preventing downtime due to overheating.

Furthermore, the electrical infrastructure in data centers must be designed to accommodate the growing demand for power as data center operations continue to expand. This requires careful planning and design to ensure that the electrical system can support the increasing power requirements of new servers and networking equipment.

Another important aspect of electrical infrastructure in data centers is the implementation of energy-efficient technologies to reduce power consumption and minimize the environmental impact of these facilities. This includes the use of energy-efficient servers, cooling systems, and lighting, as well as the implementation of power management systems to optimize energy usage and reduce operating costs.

In conclusion, the role of electrical infrastructure in data center operations cannot be overstated. From providing power to the equipment and maintaining optimal operating conditions to accommodating the growing demand for power and implementing energy-efficient technologies, electrical infrastructure is essential for the reliable and efficient operation of data centers. By understanding the importance of electrical infrastructure in data centers, organizations can ensure the performance and reliability of their facilities and meet the ever-increasing demands of the digital world.

Understanding Data Center MTTR: How to Minimize Downtime

In today’s digital age, data centers play a crucial role in storing and processing vast amounts of information for businesses and organizations. With the increasing reliance on data centers, minimizing downtime has never been more critical. Mean Time to Repair (MTTR) is a key metric used to measure the efficiency of data center operations and the time it takes to fix any issues that may arise.MTTR is a metric that measures the average time it takes to repair a system or component after a failure has occurred. It is an important indicator of how quickly a data center can recover from an outage and resume normal operations. The lower the MTTR, the better the data center’s ability to minimize downtime and ensure continuous availability of services.

There are several ways to minimize downtime and improve MTTR in a data center:

1. Implement proactive monitoring: One of the most effective ways to minimize downtime is to proactively monitor the health and performance of the data center infrastructure. By using monitoring tools and analytics, IT teams can identify potential issues before they escalate into full-blown outages. This allows for timely intervention and swift resolution of problems, reducing the overall MTTR.

2. Regular maintenance and updates: Regular maintenance and updates are essential to keep the data center infrastructure running smoothly. By performing routine checks, upgrades, and patches, IT teams can prevent unexpected failures and minimize downtime. Keeping hardware and software up to date can also improve performance and reliability, reducing the likelihood of outages.

3. Create a comprehensive disaster recovery plan: Having a comprehensive disaster recovery plan in place is essential for minimizing downtime in the event of a catastrophic failure. A well-thought-out plan should include backup and recovery procedures, failover mechanisms, and clear roles and responsibilities for all stakeholders. By practicing and testing the plan regularly, data center operators can ensure a swift and effective response to any outage, reducing the MTTR.

4. Implement redundancy and failover mechanisms: Redundancy and failover mechanisms are key components of a resilient data center infrastructure. By implementing redundant systems, such as backup power supplies, network connections, and storage devices, data center operators can ensure continuous availability of services even in the event of a hardware failure. Failover mechanisms can automatically redirect traffic to a backup system, minimizing downtime and reducing the MTTR.

5. Train and empower IT staff: Investing in training and empowering IT staff is crucial for improving MTTR in a data center. By providing employees with the necessary skills and knowledge to troubleshoot and resolve issues quickly, organizations can significantly reduce downtime and improve overall operational efficiency. Empowered and knowledgeable staff can make informed decisions and take swift action to address any problems that may arise.

In conclusion, understanding data center MTTR and implementing strategies to minimize downtime are essential for ensuring the continuous availability of services and maintaining business continuity. By proactively monitoring, maintaining, and updating the data center infrastructure, creating a comprehensive disaster recovery plan, implementing redundancy and failover mechanisms, and training and empowering IT staff, organizations can improve their MTTR and reduce the impact of outages on their operations. Ultimately, investing in measures to minimize downtime not only enhances the reliability and performance of the data center but also helps to protect the organization’s reputation and bottom line.

Understanding Data Center MTBF: How to Improve Reliability and Efficiency

Data centers play a critical role in today’s digital world, serving as the backbone for storing, processing, and managing vast amounts of data. With the increasing reliance on data centers for business operations, it is essential to ensure their reliability and efficiency. One way to measure the reliability of a data center is through Mean Time Between Failures (MTBF), which calculates the average time between failures.Understanding Data Center MTBF

MTBF is a key metric used to assess the reliability of a data center infrastructure. It measures the average time a system or component operates before experiencing a failure. A higher MTBF indicates a more reliable system, as it means that the system is less likely to experience downtime due to failures.

To calculate MTBF, data center operators need to track the number of failures that occur over a specific period and divide it by the total operational time. This calculation provides a baseline for measuring the reliability of the data center infrastructure.

Improving Reliability and Efficiency

To improve the reliability and efficiency of a data center, there are several strategies that data center operators can implement:

1. Regular Maintenance: Regular maintenance of data center equipment is essential to prevent failures and ensure optimal performance. This includes conducting routine inspections, cleaning, and testing of hardware components.

2. Redundancy: Implementing redundant systems and components can help mitigate the impact of failures and minimize downtime. Redundancy can include backup power supplies, cooling systems, and network connections.

3. Monitoring and Analytics: Utilizing monitoring tools and analytics software can help data center operators proactively identify potential issues and address them before they lead to failures. Monitoring systems can track performance metrics, temperature levels, and power consumption to optimize data center operations.

4. Energy Efficiency: Improving energy efficiency in the data center can not only reduce operating costs but also enhance reliability. Implementing energy-efficient cooling systems, server virtualization, and power management strategies can help optimize energy usage and minimize the risk of system failures.

5. Disaster Recovery Planning: Developing a comprehensive disaster recovery plan is essential to ensure business continuity in the event of a data center failure. This plan should include backup and recovery procedures, data replication strategies, and offsite storage solutions.

By focusing on improving reliability and efficiency through these strategies, data center operators can enhance the overall performance and uptime of their data center infrastructure. Implementing regular maintenance, redundancy, monitoring, energy efficiency, and disaster recovery planning can help minimize downtime, reduce costs, and ensure the reliability of the data center operation.

The Cost of Data Center Downtime: Understanding the Impact on Businesses

Data centers are the backbone of modern businesses, supporting critical operations such as data storage, computing power, and network connectivity. However, when these data centers experience downtime, the impact on businesses can be severe. Not only can downtime result in financial losses, but it can also damage a company’s reputation and customer trust.The cost of data center downtime is staggering. According to a recent study by the Ponemon Institute, the average cost of downtime for a business is around $9,000 per minute. This means that even a short outage can result in significant financial losses for a company. In fact, the same study found that the average total cost of a data center outage is over $740,000.

But the financial impact is just the tip of the iceberg. Downtime can also have a ripple effect on a business’s reputation and customer trust. Customers expect businesses to be available 24/7, and any downtime can lead to frustration and dissatisfaction. This can result in customers taking their business elsewhere, leading to long-term revenue losses.

In addition, downtime can also have legal implications for businesses. For example, if a company fails to meet its service level agreements due to downtime, it can face legal action from customers or business partners. This can result in costly legal fees and damage to the company’s reputation.

So, what can businesses do to minimize the impact of data center downtime? The key is to invest in robust backup and disaster recovery solutions. By implementing redundant systems and backup protocols, businesses can ensure that their data center operations can continue even in the event of a failure. Additionally, regular maintenance and monitoring of data center equipment can help identify and address potential issues before they lead to downtime.

Ultimately, the cost of data center downtime can be significant for businesses. By understanding the impact of downtime and taking proactive steps to prevent it, businesses can minimize financial losses, protect their reputation, and ensure the continued trust of their customers.

Understanding the Importance of Data Center Service Level Agreements

In today’s digital age, data centers are critical for businesses to store, manage, and secure their data. With the increasing reliance on technology and the growing amount of data being generated, it is crucial for organizations to have a reliable data center service provider. One key aspect that businesses should consider when choosing a data center provider is the Service Level Agreement (SLA).A Service Level Agreement is a contract between a data center provider and a customer that outlines the level of service that the provider will deliver. SLAs typically include key performance indicators (KPIs) such as uptime, response times, and security measures. By defining these metrics in the SLA, both parties have a clear understanding of the expectations and responsibilities.

One of the most important aspects of a data center SLA is uptime. Uptime refers to the amount of time that a data center is operational and available to customers. Downtime can have a significant impact on a business, causing disruption to operations and potentially leading to financial losses. A reliable data center provider will guarantee a certain level of uptime in their SLA, ensuring that their customers’ data is always accessible when needed.

Response times are another critical element of a data center SLA. In the event of an issue or outage, the data center provider should have a defined response time for addressing and resolving the issue. A prompt response can help minimize downtime and prevent further disruptions to the business.

Security measures are also a key component of a data center SLA. With the increasing number of cyber threats and data breaches, it is essential for businesses to ensure that their data is stored securely. A data center provider should outline the security measures that they have in place to protect their customers’ data, such as firewalls, encryption, and access controls.

Overall, a well-defined SLA is crucial for businesses to ensure that their data center provider meets their needs and expectations. By clearly outlining the level of service that is expected, businesses can hold their provider accountable and ensure that their data is secure and accessible at all times. Understanding the importance of a data center SLA can help businesses make informed decisions when choosing a data center provider and ultimately safeguard their valuable data.