Your cart is currently empty!

Tag: Visualization

How NVIDIA is Leading the Way in Deep Learning Innovation

NVIDIA has been at the forefront of deep learning innovation, revolutionizing the field with its cutting-edge technology and advancements. The company’s commitment to pushing the boundaries of artificial intelligence has positioned it as a leader in the industry, with its GPUs (graphics processing units) playing a pivotal role in accelerating the training and deployment of deep learning models.One of the key reasons NVIDIA has been able to establish itself as a leader in deep learning innovation is due to its focus on developing specialized hardware that is optimized for AI workloads. The company’s GPUs are designed to handle the complex computations required for training deep neural networks, providing significant speedups over traditional CPUs. This has allowed researchers and developers to train larger and more complex models, leading to breakthroughs in areas such as image recognition, natural language processing, and autonomous driving.

In addition to its hardware advancements, NVIDIA has also made significant contributions to the software side of deep learning. The company’s CUDA platform, which allows developers to program GPUs for parallel computing, has become the industry standard for deep learning applications. NVIDIA has also developed libraries and frameworks, such as cuDNN and TensorRT, that optimize deep learning algorithms for its GPUs, further improving performance and efficiency.

NVIDIA’s commitment to driving innovation in deep learning is evident in its partnerships and collaborations with leading research institutions and companies. The company works closely with academia to advance the field of AI, funding research projects and providing access to its hardware and software tools. NVIDIA has also partnered with companies in various industries, such as healthcare, finance, and automotive, to develop customized deep learning solutions that address specific challenges and opportunities.

One of the most notable examples of NVIDIA’s deep learning innovation is its work in the field of autonomous driving. The company’s DRIVE platform is used by leading car manufacturers to develop self-driving vehicles, utilizing deep learning algorithms to enable advanced perception, planning, and control capabilities. NVIDIA’s advancements in this area have helped accelerate the development of autonomous vehicles, bringing us closer to a future where cars can drive themselves safely and efficiently.

Overall, NVIDIA’s leadership in deep learning innovation is driven by its relentless pursuit of technological advancements and its commitment to pushing the boundaries of what is possible with AI. The company’s specialized hardware, software tools, and strategic partnerships have positioned it as a driving force in the field, shaping the future of artificial intelligence and revolutionizing industries across the globe.

Unlocking the Potential of Deep Learning with NVIDIA AI Technology

Unlocking the Potential of Deep Learning with NVIDIA AI TechnologyDeep learning has revolutionized the field of artificial intelligence, enabling machines to learn from vast amounts of data and make predictions or decisions without being explicitly programmed. This technology has the potential to transform industries ranging from healthcare to finance, and NVIDIA is at the forefront of this revolution with its powerful AI technology.

NVIDIA has been a pioneer in developing hardware and software solutions that enable deep learning algorithms to run efficiently and at scale. The company’s GPUs (graphics processing units) are known for their superior processing power and ability to handle complex computations required for deep learning models. NVIDIA’s CUDA parallel computing platform and cuDNN deep learning library provide developers with the tools they need to build and deploy cutting-edge AI applications.

One of the key advantages of NVIDIA’s AI technology is its versatility. Whether you are working on image recognition, natural language processing, or autonomous driving, NVIDIA’s hardware and software solutions can be tailored to meet your specific needs. The company’s deep learning frameworks like TensorFlow, PyTorch, and MXNet are widely used by researchers and developers for training and deploying deep learning models.

NVIDIA’s AI technology is also making it easier for businesses to harness the power of deep learning. The company’s DGX systems are pre-configured with all the necessary hardware and software components for running AI workloads, allowing organizations to quickly get up and running with their AI projects. Additionally, NVIDIA’s deep learning tools and libraries are constantly being updated and improved, ensuring that developers have access to the latest advancements in AI technology.

In conclusion, NVIDIA’s AI technology is unlocking the full potential of deep learning, enabling researchers and developers to build cutting-edge AI applications that were once thought to be impossible. With its powerful GPUs, deep learning frameworks, and pre-configured systems, NVIDIA is paving the way for a future where AI is seamlessly integrated into every aspect of our lives. Whether you are a researcher, a developer, or a business looking to leverage the power of AI, NVIDIA has the technology and expertise to help you succeed.

The Future of AI: A Closer Look at NVIDIA’s Innovations

Artificial intelligence (AI) is rapidly transforming the way we live, work, and interact with technology. As AI continues to advance, companies like NVIDIA are at the forefront of developing innovative solutions that are shaping the future of AI.NVIDIA, a leading technology company known for its graphics processing units (GPUs), has been making significant strides in the field of AI with its cutting-edge innovations. The company’s GPUs are widely used in data centers and cloud computing environments to power AI applications such as deep learning, machine learning, and autonomous driving.

One of NVIDIA’s most notable contributions to the AI space is its development of the Tensor Core technology, which is designed to accelerate AI workloads and improve performance. Tensor Cores are specialized processing units that are optimized for deep learning tasks, making it possible to train complex neural networks at a much faster rate than traditional CPUs or GPUs.

In addition to developing hardware solutions, NVIDIA has also been actively involved in the development of software tools and frameworks that make it easier for developers to build and deploy AI applications. The company’s CUDA programming platform and cuDNN library are widely used in the AI community for developing high-performance AI applications.

NVIDIA’s commitment to advancing AI technology is also evident in its investment in research and development. The company has established research centers around the world dedicated to exploring the potential of AI in various fields, such as healthcare, finance, and robotics. By collaborating with leading researchers and universities, NVIDIA is able to stay at the forefront of AI innovation and drive the development of new technologies.

Looking ahead, the future of AI looks promising with NVIDIA leading the way in developing innovative solutions that are shaping the way we live and work. With advancements in hardware, software, and research, NVIDIA is well-positioned to continue driving the evolution of AI and pushing the boundaries of what is possible.

In conclusion, NVIDIA’s innovations in AI are paving the way for a future where intelligent machines and systems will play an increasingly important role in our lives. As AI continues to evolve, companies like NVIDIA will continue to push the boundaries of what is possible and drive innovation in the field of artificial intelligence.

Exploring the Power of NVIDIA CUDA for Parallel Computing

NVIDIA CUDA is a parallel computing platform and application programming interface (API) model created by NVIDIA. It allows developers to harness the power of NVIDIA graphics processing units (GPUs) to accelerate computing tasks, particularly those that require a high level of parallel processing. CUDA has become a popular choice for developers looking to improve the performance of their applications by taking advantage of the massive parallel processing capabilities of modern GPUs.One of the key features of CUDA is its ability to parallelize tasks across hundreds or thousands of GPU cores. This allows developers to significantly increase the speed of their applications by offloading computationally intensive tasks to the GPU, rather than relying solely on the CPU. This is especially useful for applications that require complex simulations, data processing, or machine learning algorithms, as these tasks can be divided into smaller, parallelizable subtasks that can be executed simultaneously on the GPU.

Another advantage of using CUDA for parallel computing is its support for a wide range of programming languages, including C, C++, and Fortran. This makes it accessible to a broad audience of developers who may already be familiar with these languages, and allows them to easily integrate CUDA into their existing codebases.

In addition to its performance benefits, CUDA also offers a number of tools and libraries that make it easier for developers to optimize their applications for parallel processing. This includes the CUDA Toolkit, which provides a set of libraries, compilers, and debugging tools that help developers write efficient, parallelized code for the GPU. There are also libraries such as cuBLAS for linear algebra computations, cuFFT for fast Fourier transforms, and cuDNN for deep learning algorithms, which can further accelerate the development of GPU-accelerated applications.

Overall, CUDA has become a powerful tool for developers looking to unlock the full potential of modern GPU hardware for parallel computing. By leveraging the parallel processing capabilities of NVIDIA GPUs, developers can significantly improve the performance and efficiency of their applications, leading to faster processing times and better overall user experiences. As GPU technology continues to advance, CUDA is likely to play an increasingly important role in the world of parallel computing, empowering developers to push the boundaries of what is possible with their applications.

How NVIDIA RTX is Revolutionizing Gaming Graphics

NVIDIA’s RTX series of graphics cards have been making waves in the gaming industry since their release, with their cutting-edge technology and impressive performance capabilities revolutionizing gaming graphics as we know it.One of the key features that sets the NVIDIA RTX series apart from its predecessors is its use of ray tracing technology. Ray tracing is a rendering technique that simulates the way light interacts with objects in a virtual environment, creating incredibly realistic lighting effects and reflections. This results in games that look more lifelike than ever before, with stunning visuals that bring virtual worlds to life in ways that were previously only possible in movies.

In addition to ray tracing, NVIDIA RTX cards also feature deep learning super sampling (DLSS) technology, which uses artificial intelligence to upscale lower resolution images to higher resolutions in real time. This not only improves the overall image quality of games but also boosts performance by allowing games to run smoother at higher resolutions without sacrificing frame rates.

Furthermore, NVIDIA RTX cards also come equipped with real-time ray tracing cores and tensor cores, which further enhance the overall gaming experience by providing more realistic lighting, shadows, and reflections, as well as improved AI-driven effects and performance.

The impact of NVIDIA RTX technology on gaming graphics cannot be overstated. With its unparalleled level of realism and performance, games are now able to achieve levels of visual fidelity that were previously thought to be impossible. This has opened up a whole new world of possibilities for game developers, who are now able to create immersive and visually stunning gaming experiences that were once only seen in the realm of science fiction.

In conclusion, NVIDIA’s RTX series of graphics cards have truly revolutionized gaming graphics, pushing the boundaries of what is possible in terms of visual fidelity and performance. With its innovative technology and impressive capabilities, the NVIDIA RTX series is setting a new standard for gaming graphics and paving the way for even more immersive and realistic gaming experiences in the future.

The Evolution of NVIDIA GeForce: From Graphics Cards to GPU Technology

NVIDIA GeForce is a brand of graphics processing units (GPUs) developed by NVIDIA Corporation. Since its inception in 1999, NVIDIA GeForce has been at the forefront of graphics card technology, pushing the boundaries of visual computing and gaming experiences. Over the years, the GeForce brand has evolved from being just a graphics card to a comprehensive GPU technology that powers not only gaming but also artificial intelligence, data centers, and autonomous vehicles.In the early days of GeForce, NVIDIA focused primarily on developing high-performance graphics cards for PC gaming. The GeForce 256, released in 1999, was the world’s first GPU and set a new standard for 3D graphics and gaming performance. As gaming technology advanced, so did NVIDIA GeForce, with each new generation of graphics cards delivering improved graphics quality, faster frame rates, and more realistic visual effects.

One of the key milestones in the evolution of NVIDIA GeForce was the introduction of the CUDA (Compute Unified Device Architecture) parallel computing platform in 2006. CUDA allowed developers to harness the power of NVIDIA GPUs for general-purpose computing tasks, enabling a wide range of applications beyond gaming, such as scientific simulations, image processing, and machine learning.

In 2012, NVIDIA launched the GeForce GTX Titan, a GPU that not only delivered cutting-edge gaming performance but also introduced the concept of GPU computing for deep learning and artificial intelligence. The GTX Titan series paved the way for NVIDIA to become a leader in AI and machine learning technology, with its GPUs being used in research labs, data centers, and autonomous vehicles.

Today, NVIDIA GeForce continues to innovate and push the boundaries of GPU technology. The latest GeForce RTX series features real-time ray tracing and AI-enhanced graphics, delivering unparalleled visual fidelity and realism in games. NVIDIA’s Ampere architecture, introduced in 2020, further enhances the performance and efficiency of GeForce GPUs, making them ideal for a wide range of applications, from gaming to data centers.

In conclusion, the evolution of NVIDIA GeForce from graphics cards to GPU technology has been nothing short of remarkable. What started as a brand focused on gaming has now become a powerhouse in the world of AI, machine learning, and high-performance computing. With each new generation of GeForce GPUs, NVIDIA continues to push the boundaries of what is possible in visual computing, making GeForce a brand that is synonymous with innovation and cutting-edge technology.

A Comparison of NVIDIA Graphics Cards: Which One is Right for You?

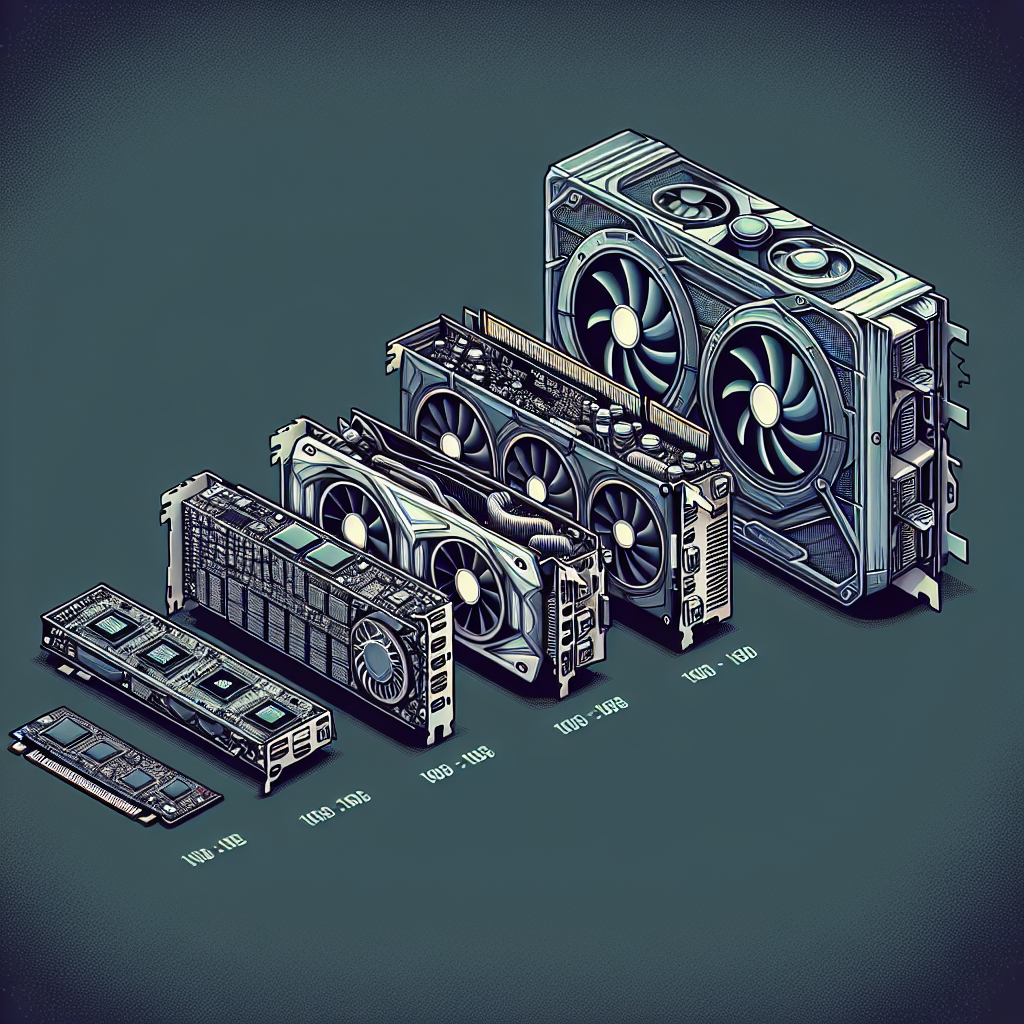

If you’re in the market for a new graphics card, chances are you’ve come across the name NVIDIA. NVIDIA is a leading manufacturer of graphics cards, known for their high performance and cutting-edge technology. With so many options available, it can be overwhelming to choose the right graphics card for your needs. In this article, we’ll compare some of the most popular NVIDIA graphics cards to help you make an informed decision.GeForce GTX 1660 Ti:

The GeForce GTX 1660 Ti is a mid-range graphics card that offers solid performance at an affordable price. It features 1536 CUDA cores and 6GB of GDDR6 memory, making it suitable for gaming at 1080p resolution. The GTX 1660 Ti is a great option for budget-conscious gamers who want a balance of performance and value.

GeForce RTX 2060:

The GeForce RTX 2060 is a step up from the GTX 1660 Ti, offering better performance and ray tracing capabilities. It features 1920 CUDA cores and 6GB of GDDR6 memory, making it ideal for gaming at 1440p resolution. The RTX 2060 is a good choice for gamers who want to experience ray tracing technology without breaking the bank.

GeForce RTX 3070:

The GeForce RTX 3070 is a high-end graphics card that offers top-tier performance for demanding games and applications. It features 5888 CUDA cores and 8GB of GDDR6 memory, making it suitable for gaming at 4K resolution. The RTX 3070 is a great option for gamers who want the best possible performance and are willing to invest in a more expensive graphics card.

GeForce RTX 3090:

The GeForce RTX 3090 is NVIDIA’s flagship graphics card, offering unparalleled performance for gaming, content creation, and other demanding tasks. It features a massive 10496 CUDA cores and 24GB of GDDR6X memory, making it the ultimate choice for gamers and professionals who require the best of the best. The RTX 3090 is a high-end option that comes with a high price tag, but its performance is unmatched.

In conclusion, choosing the right NVIDIA graphics card depends on your budget and performance needs. The GeForce GTX 1660 Ti is a good option for budget-conscious gamers, while the RTX 2060 and RTX 3070 offer better performance for those willing to spend a bit more. The GeForce RTX 3090 is the ultimate choice for gamers and professionals who demand top-tier performance. Consider your needs and budget carefully before making a decision, and you’ll be sure to find the perfect NVIDIA graphics card for you.

NVIDIA GPUs vs. Competitors: A Comparative Analysis

When it comes to choosing a graphics processing unit (GPU) for your computer, NVIDIA is often considered as one of the top choices in the market. However, there are several competitors out there that offer similar products. In this article, we will compare NVIDIA GPUs with some of its main competitors to see how they stack up against each other.NVIDIA is known for its high-performance GPUs that are used in gaming, professional graphics, artificial intelligence, and data center applications. The company’s flagship products, such as the GeForce RTX and Quadro series, are widely praised for their performance and efficiency.

One of NVIDIA’s main competitors is AMD, which also offers a range of GPUs for gaming and professional applications. AMD’s Radeon series is popular among gamers for its competitive pricing and performance. However, when it comes to raw performance and features, NVIDIA GPUs tend to outshine AMD’s offerings.

Another competitor of NVIDIA is Intel, a company that is better known for its CPUs but has recently entered the GPU market with its Xe series of graphics cards. While Intel’s GPUs are still in the early stages of development, they have shown promise in terms of performance and efficiency. However, they still have a long way to go to catch up with NVIDIA in terms of market share and popularity.

In terms of performance, NVIDIA GPUs are often praised for their superior processing power and efficiency. The company’s use of cutting-edge technologies, such as ray tracing and DLSS, has helped to push the boundaries of what is possible in terms of graphics rendering. This has made NVIDIA GPUs the top choice for many professional users who require high-performance computing power.

On the other hand, competitors like AMD and Intel have been catching up in recent years, with their GPUs offering comparable performance at a lower price point. AMD’s Radeon series, in particular, has gained a strong following among budget-conscious gamers who are looking for a good balance of performance and affordability.

In conclusion, while NVIDIA GPUs remain the top choice for many users who require high-performance graphics processing power, competitors like AMD and Intel are beginning to close the gap. Each company has its own strengths and weaknesses, and the choice of GPU will ultimately depend on your specific needs and budget. As technology continues to evolve, it will be interesting to see how these companies continue to innovate and push the boundaries of what is possible in the world of graphics processing.

Exploring the Possibilities of Augmented Reality in Education

Augmented reality (AR) is a technology that superimposes computer-generated images, sounds, or other data onto a user’s view of the real world. This emerging technology has the potential to revolutionize the way we learn and teach in the education sector. By blending the physical and digital worlds, AR can create immersive and interactive learning experiences that engage students in ways that traditional methods cannot.One of the key benefits of AR in education is its ability to bring abstract concepts to life. For example, instead of reading about the solar system in a textbook, students can use AR to see a 3D model of the planets orbiting the sun right in front of them. This hands-on approach makes learning more dynamic and engaging, helping students better understand complex topics.

AR also has the potential to cater to different learning styles. Visual learners can benefit from interactive simulations and visual aids, while auditory learners can listen to audio explanations and instructions. This personalized approach to learning can help students grasp concepts more easily and retain information better.

Furthermore, AR can make learning more accessible to students with disabilities. For example, students with visual impairments can use AR to access visual information through audio descriptions or tactile feedback. This can level the playing field for all students and ensure that everyone has the opportunity to learn and succeed.

Another advantage of AR in education is its ability to provide real-time feedback and assessment. Teachers can use AR apps to track students’ progress, identify areas where they may be struggling, and provide targeted support. This data-driven approach can help teachers tailor their instruction to meet the individual needs of each student, leading to better learning outcomes.

In addition, AR can make learning more engaging and fun. By gamifying educational content, students can explore virtual worlds, solve puzzles, and complete challenges to reinforce their learning. This element of play can motivate students to stay engaged and focused, making learning a more enjoyable experience.

While AR is still in its early stages of adoption in education, the possibilities are endless. As technology continues to advance, we can expect to see even more innovative applications of AR in the classroom. From virtual field trips to historical reenactments, the potential for AR to enhance learning experiences is truly exciting.

In conclusion, augmented reality has the potential to transform education by creating immersive, interactive, and personalized learning experiences. By exploring the possibilities of AR in education, we can unlock new ways of teaching and learning that will benefit students of all ages and abilities. It’s time to embrace this cutting-edge technology and revolutionize the way we educate future generations.

How Augmented Reality is Revolutionizing the Way We Experience the World

Augmented reality (AR) is a technology that has been gaining popularity in recent years for its ability to enhance the way we experience the world around us. By overlaying digital information onto the real world through the use of a smartphone or other device, AR has the potential to revolutionize the way we interact with our surroundings and access information.One of the most common applications of AR is in the realm of entertainment and gaming. Apps like Pokemon Go have taken the world by storm, allowing users to catch virtual creatures in real-world locations. This blending of the digital and physical worlds creates a truly immersive experience that has captivated millions of users worldwide.

But AR is not just limited to gaming – it has the potential to revolutionize a wide range of industries, from education to healthcare to retail. In the field of education, AR can be used to create interactive learning experiences that engage students in a way that traditional methods cannot. For example, students can use AR to explore a virtual anatomy model or to take a virtual field trip to a historical site.

In healthcare, AR has the potential to revolutionize the way medical professionals diagnose and treat patients. Surgeons can use AR to overlay important information onto a patient’s body during a procedure, enabling them to see vital information in real-time without taking their eyes off the patient. This can lead to more accurate diagnoses and better patient outcomes.

In the retail industry, AR can be used to create immersive shopping experiences that allow customers to try on clothes virtually or see how furniture will look in their home before making a purchase. This can help retailers increase sales and reduce returns by giving customers a better sense of how products will fit into their lives.

Overall, AR has the potential to revolutionize the way we experience the world by blending the digital and physical realms in new and innovative ways. As the technology continues to evolve and become more widespread, we can expect to see even more exciting applications of AR that will change the way we live, work, and play.