Your cart is currently empty!

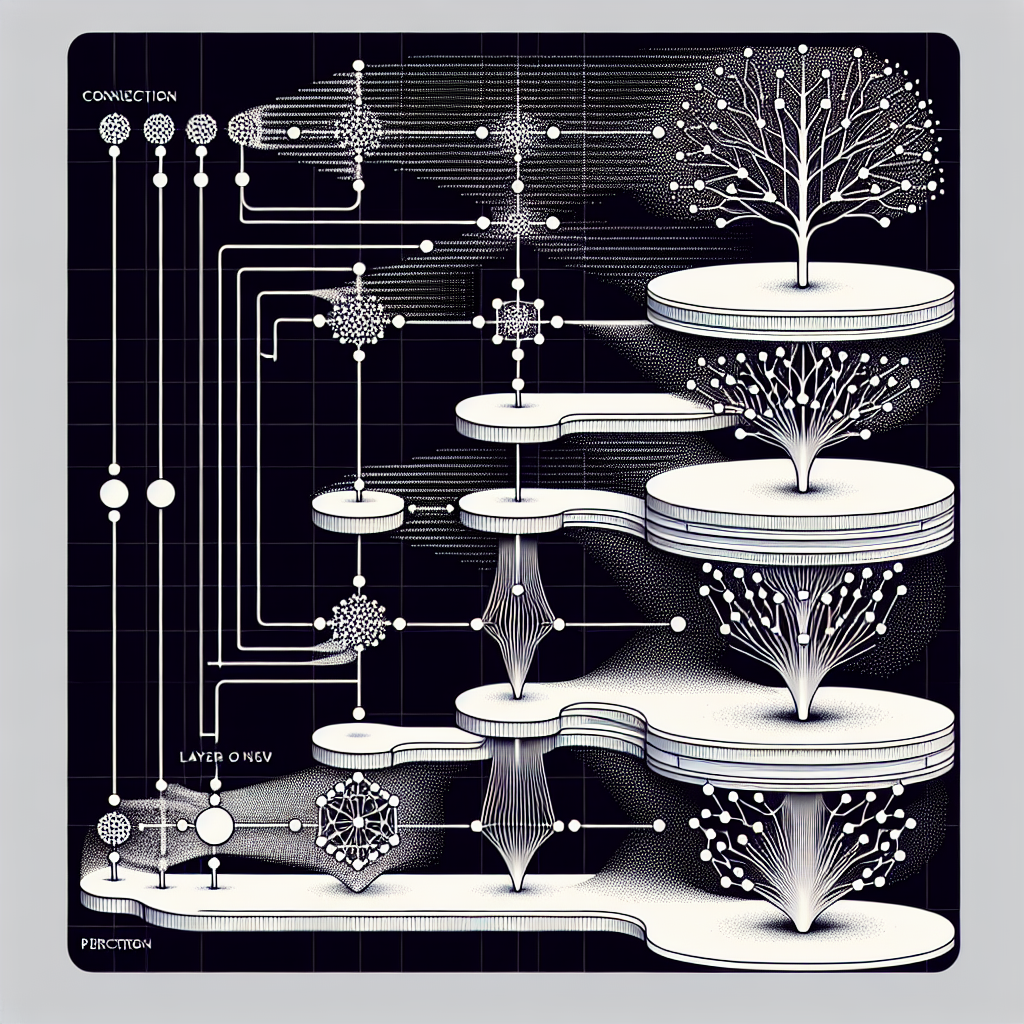

The Evolution of DNN: From Perceptrons to Deep Learning

Deep Neural Networks (DNNs) have come a long way since the early days of artificial intelligence research. The roots of DNNs can be traced back to the concept of perceptrons, which were introduced in the 1950s by Frank Rosenblatt. Perceptrons were simple models of a single neuron that could make binary decisions based on input data.

Over the years, researchers continued to develop more complex neural network architectures, such as multi-layer perceptrons and convolutional neural networks. These advancements allowed for more sophisticated learning algorithms and the ability to process more complex data, such as images and natural language.

One of the key breakthroughs in the evolution of DNNs was the development of backpropagation in the 1980s. Backpropagation is a method for training neural networks by adjusting the weights of connections between neurons in order to minimize the error in the network’s output. This allowed for more efficient learning and better performance of neural networks.

In the early 2000s, the concept of deep learning emerged as researchers began to explore neural networks with many layers. These deep neural networks were able to learn complex patterns in data and achieve state-of-the-art performance on tasks such as image recognition and speech recognition.

Today, deep learning has become a dominant force in artificial intelligence research and has led to breakthroughs in a wide range of applications, including autonomous driving, natural language processing, and medical imaging. The growing availability of large datasets and powerful computing resources has fueled the rapid advancement of deep learning technology.

One of the most popular deep learning architectures is the deep convolutional neural network (CNN), which has revolutionized computer vision tasks. CNNs are able to automatically learn features from raw pixel data and have been used to achieve human-level performance on tasks such as image classification and object detection.

In recent years, researchers have also made significant progress in the field of recurrent neural networks (RNNs) and long short-term memory (LSTM) networks, which are particularly well-suited for sequential data such as text and speech. These networks have been used to build powerful language models and achieve impressive results in machine translation and speech recognition.

The evolution of DNNs from simple perceptrons to deep learning has been driven by a combination of theoretical advances, algorithmic improvements, and the availability of large-scale datasets. As deep learning continues to advance, we can expect to see even more breakthroughs in artificial intelligence research and applications in the years to come.

#Evolution #DNN #Perceptrons #Deep #Learning,dnn

Leave a Reply