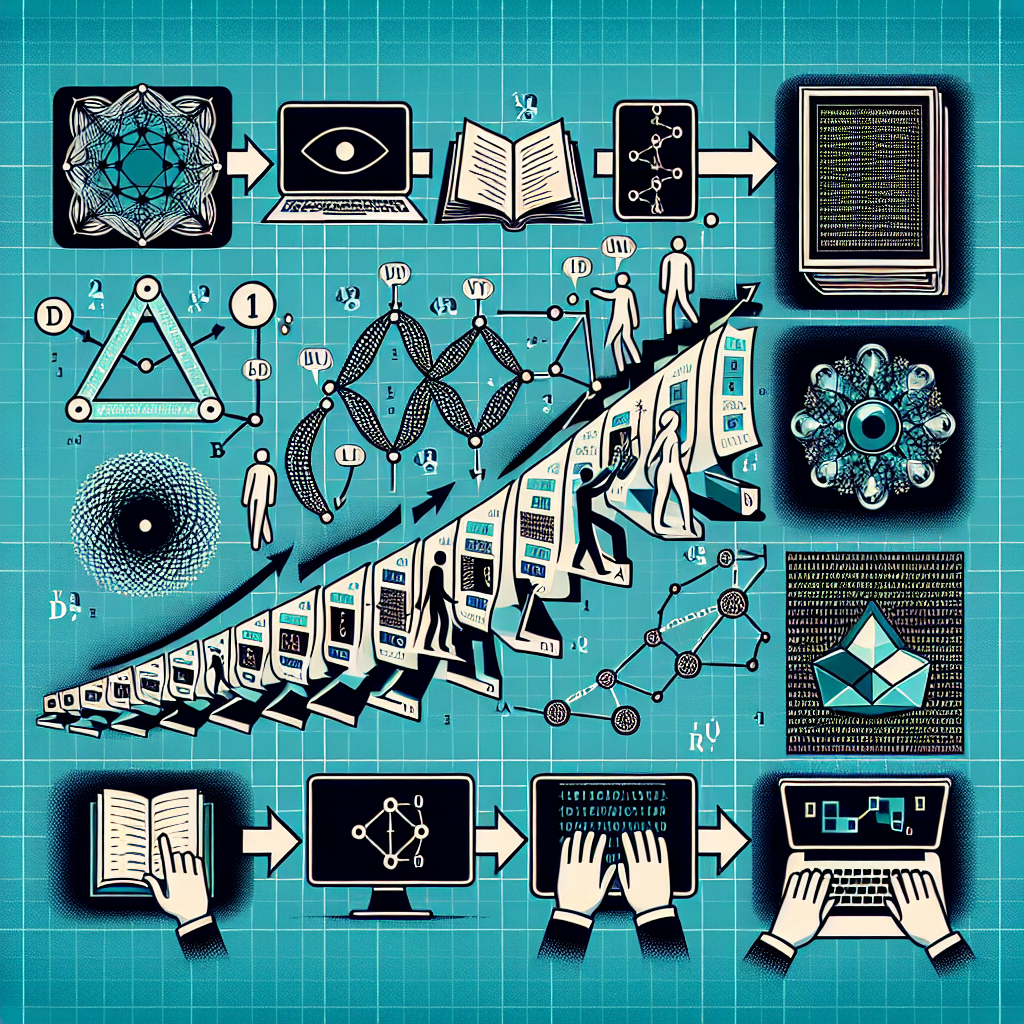

Generative Adversarial Networks (GANs) have been a game-changer in the field of machine learning and artificial intelligence. Originally proposed by Ian Goodfellow in 2014, GANs have since been widely adopted in various domains, including natural language processing (NLP). In this article, we will explore the evolution of GANs in NLP, from theory to practice.

GANs are a type of neural network architecture that consists of two models: a generator and a discriminator. The generator generates new data samples, while the discriminator evaluates how realistic these samples are. The two models are trained simultaneously in a competitive process, where the generator tries to fool the discriminator, and the discriminator tries to distinguish between real and generated data.

In the context of NLP, GANs have been used for various tasks, such as text generation, style transfer, and machine translation. One of the first applications of GANs in NLP was for text generation, where the generator learns to generate realistic text samples, such as sentences or paragraphs, based on a given input. This has been particularly useful for tasks like dialogue generation, story generation, and poetry generation.

Another popular application of GANs in NLP is style transfer, where the generator learns to transfer the style of one text to another text while preserving the content. This can be used for tasks like sentiment transfer, where the sentiment of a text is changed while maintaining the original meaning. Style transfer has also been applied to tasks like paraphrasing and summarization, where the style of a text is modified to achieve a specific goal.

Machine translation is another area where GANs have shown promise. By training a GAN on a parallel corpus of texts in two languages, the generator can learn to generate translations from one language to another. This has been used to improve the quality of machine translation systems and to generate more fluent and natural-sounding translations.

In recent years, researchers have made significant advancements in the field of GANs in NLP. This includes developing more sophisticated architectures, such as conditional GANs, which allow for more control over the generated samples. Researchers have also explored techniques for improving the stability and convergence of GAN training, such as using different training objectives and regularization techniques.

Overall, the evolution of GANs in NLP has been a fascinating journey, from the theoretical foundations laid out by Goodfellow to the practical applications seen in various NLP tasks. As researchers continue to push the boundaries of what is possible with GANs, we can expect to see even more exciting developments in the future.

#Evolution #GANs #NLP #Theory #Practice,gan)

to natural language processing (nlp) pdf

Leave a Reply

You must be logged in to post a comment.