Your cart is currently empty!

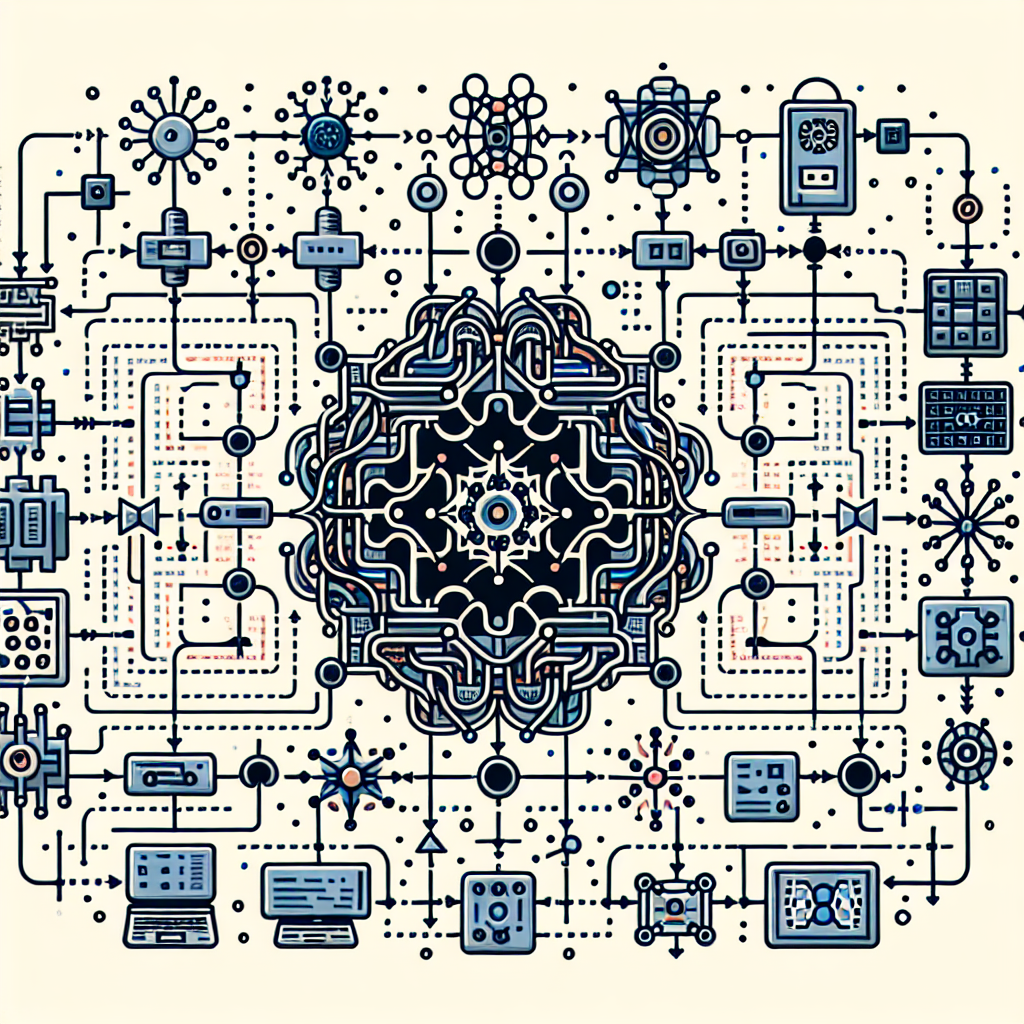

The Evolution of Gated Recurrent Units (GRUs) in Neural Networks

Gated Recurrent Units (GRUs) are a type of neural network architecture that has become increasingly popular in recent years for sequence modeling tasks such as natural language processing and speech recognition. GRUs were first introduced in 2014 by Kyunghyun Cho et al., as a simpler and more efficient alternative to the more complex Long Short-Term Memory (LSTM) units.

The main idea behind GRUs is to address the vanishing gradient problem that often occurs in traditional recurrent neural networks (RNNs). The vanishing gradient problem arises when the gradients become very small during backpropagation, making it difficult for the network to learn long-term dependencies in sequential data. GRUs tackle this issue by using gating mechanisms to control the flow of information through the network.

The key components of a GRU unit are the reset gate and the update gate. The reset gate determines how much of the previous state should be forgotten, while the update gate determines how much of the new state should be added to the current state. By dynamically updating these gates during each time step, GRUs are able to capture long-term dependencies in the data more effectively than traditional RNNs.

One of the main advantages of GRUs over LSTMs is their simplicity and efficiency. GRUs have fewer parameters and computations compared to LSTMs, making them easier to train and faster to converge. This has made GRUs a popular choice for researchers and practitioners working on sequence modeling tasks.

Since their introduction, GRUs have undergone several improvements and variations. For example, researchers have proposed different activation functions for the gates, as well as modifications to the gating mechanisms to improve performance on specific tasks. Some studies have also explored incorporating attention mechanisms into GRUs to further enhance their ability to capture long-term dependencies in the data.

Overall, the evolution of GRUs in neural networks has been driven by the need for more effective and efficient models for sequence modeling tasks. As researchers continue to explore new architectures and techniques for improving the performance of GRUs, we can expect to see even more advancements in this area in the future.

#Evolution #Gated #Recurrent #Units #GRUs #Neural #Networks,recurrent neural networks: from simple to gated architectures

Leave a Reply