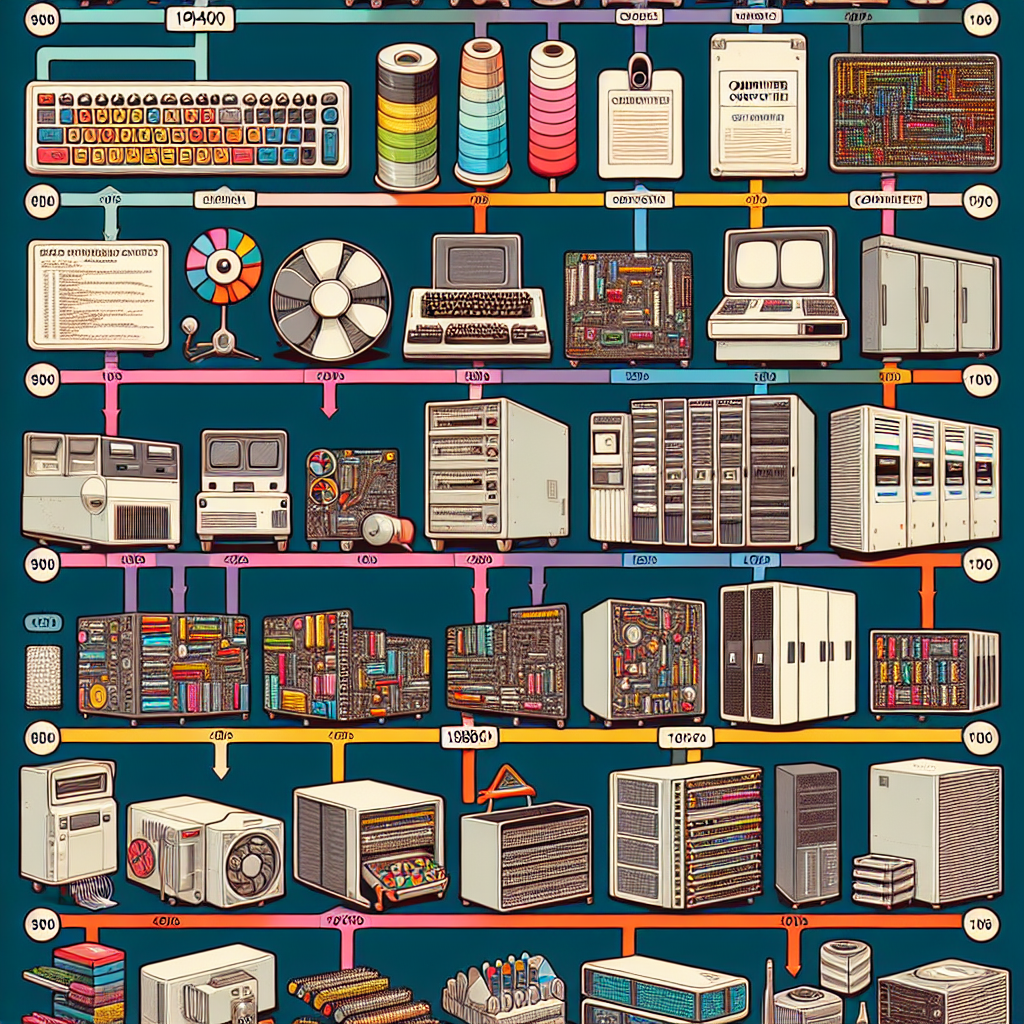

High performance computing (HPC) has come a long way since its inception in the 1960s. From the early days of supercomputers to today’s advanced systems, the evolution of HPC has been marked by significant advancements in technology and computing power. Let’s take a look at the timeline of HPC and how it has transformed over the years.

1960s: The Birth of Supercomputers

The 1960s saw the birth of supercomputers, which were large, powerful machines designed to handle complex calculations and simulations. One of the first supercomputers, the CDC 6600, was introduced in 1964 by Control Data Corporation. It was the fastest computer of its time, capable of processing up to 3 million instructions per second.

1970s: The Rise of Vector Processing

In the 1970s, vector processing became a key feature of supercomputers. Vector processors were designed to perform multiple calculations simultaneously, making them ideal for scientific and engineering applications. The Cray-1, introduced in 1976 by Cray Research, was one of the first supercomputers to use vector processing technology.

1980s: Parallel Processing and Distributed Computing

The 1980s marked the introduction of parallel processing and distributed computing in supercomputers. Parallel processing allows multiple processors to work together on a task, while distributed computing involves connecting multiple computers to work on a single problem. These advancements led to increased computing power and faster processing speeds.

1990s: The Era of Beowulf Clusters

In the 1990s, Beowulf clusters emerged as a cost-effective alternative to traditional supercomputers. Beowulf clusters are made up of commodity hardware connected by high-speed networks, allowing for parallel processing at a fraction of the cost of proprietary supercomputers. These clusters became popular in research institutions and universities for scientific computing applications.

2000s: The Rise of GPU Computing

The 2000s saw the rise of graphics processing units (GPUs) as a powerful tool for high performance computing. GPUs are designed to handle complex calculations in parallel, making them ideal for HPC applications. The introduction of CUDA programming language by NVIDIA in 2007 further accelerated the adoption of GPUs in supercomputing.

2010s: Exascale Computing and AI

In the 2010s, the focus shifted towards achieving exascale computing, which refers to systems capable of performing one quintillion (10^18) calculations per second. The race to exascale computing led to the development of new technologies, such as heterogeneous computing and advanced interconnects, to push the boundaries of HPC.

Today: The Future of HPC

Today, high performance computing continues to evolve at a rapid pace. Advances in artificial intelligence, machine learning, and quantum computing are driving new possibilities for HPC applications. With the increasing demand for computing power in fields such as weather forecasting, drug discovery, and climate modeling, the future of HPC looks promising.

In conclusion, the evolution of high performance computing has been marked by significant advancements in technology and computing power. From the early days of supercomputers to today’s advanced systems, HPC has transformed the way we approach complex problems and drive innovation in science and technology. As we look towards the future, the possibilities for HPC are endless, and we can expect even greater advancements in the years to come.

Leave a Reply