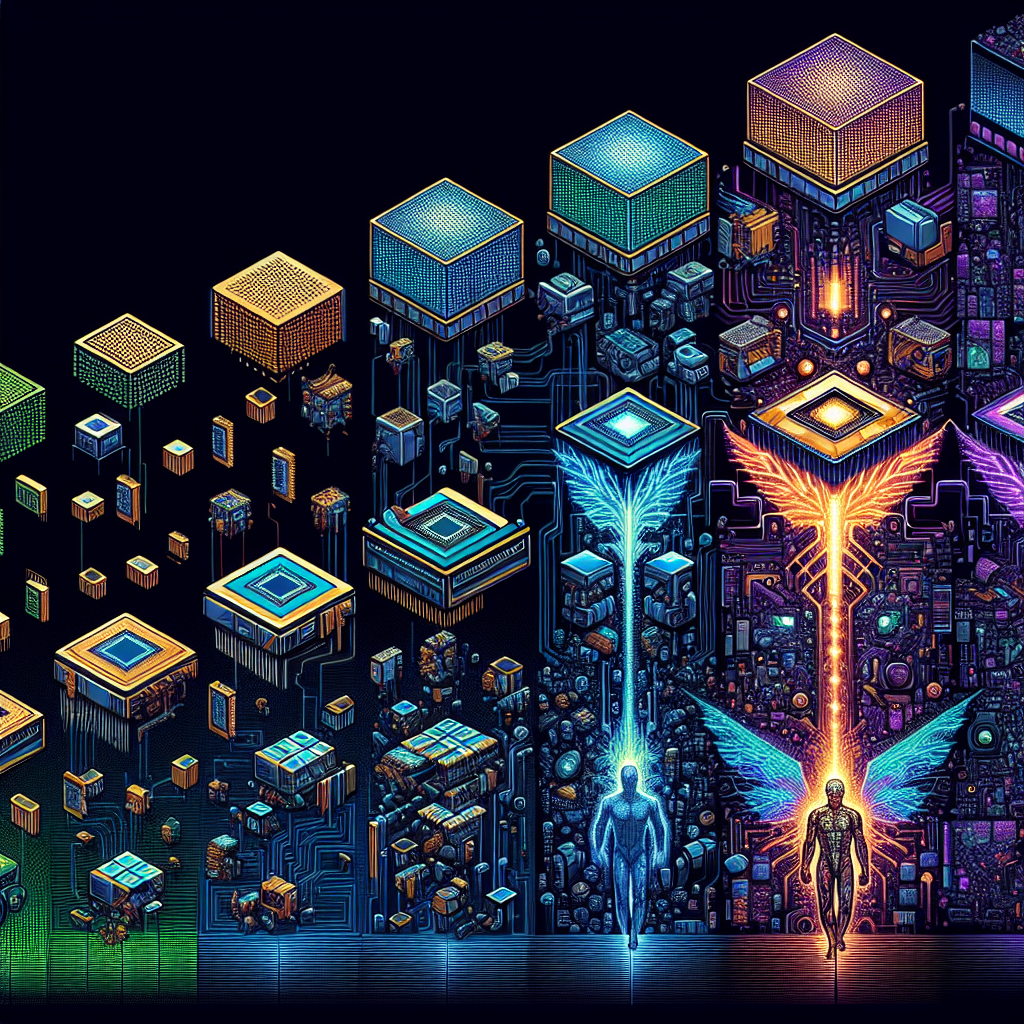

NVIDIA has come a long way since its inception in 1993. Originally founded as a graphics chip manufacturer for gaming computers, the company has evolved to become a powerhouse in the world of artificial intelligence (AI) and beyond.

NVIDIA’s journey began with the release of the NV1 graphics processing unit (GPU) in 1995. This marked the company’s foray into the gaming industry, offering high-performance graphics cards for PC gamers. Over the years, NVIDIA continued to innovate and push the boundaries of GPU technology, introducing groundbreaking products like the GeForce series that revolutionized gaming graphics.

As the demand for more powerful GPUs grew, NVIDIA saw an opportunity to expand beyond the gaming market. In 2006, the company introduced the Tesla GPU, specifically designed for high-performance computing and scientific research. This marked the beginning of NVIDIA’s venture into the world of AI and machine learning.

In recent years, NVIDIA has become a key player in the field of AI, with its GPUs powering some of the most advanced AI systems in the world. The company’s CUDA platform has become the go-to tool for developers looking to harness the power of GPUs for AI applications, enabling breakthroughs in areas like autonomous vehicles, healthcare, and finance.

But NVIDIA is not stopping there. The company is constantly pushing the boundaries of GPU technology, with innovations like the Volta and Turing architectures that offer unprecedented levels of performance and efficiency. These advancements are not only benefiting gamers and AI researchers but also opening up new possibilities in fields like virtual reality, robotics, and computer vision.

As NVIDIA continues to evolve and expand its reach, one thing is clear: the future of GPU technology is bright. With its commitment to innovation and cutting-edge research, NVIDIA is shaping the way we interact with technology and paving the way for a more intelligent and connected world. Whether you’re a gamer, a researcher, or a developer, NVIDIA’s GPUs are sure to play a key role in shaping the future of computing and AI.

Leave a Reply