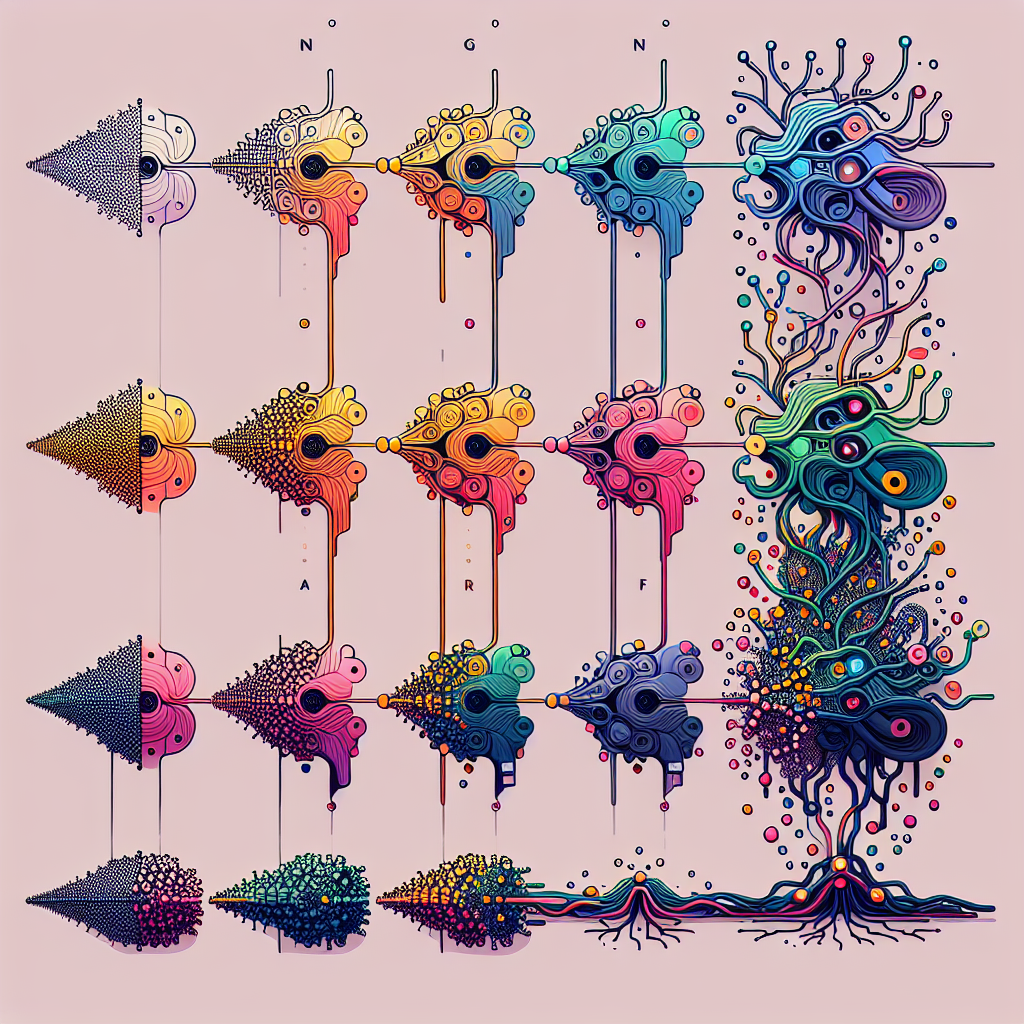

Recurrent Neural Networks (RNNs) have been a popular choice for sequential data processing tasks such as natural language processing, speech recognition, and time series prediction. However, traditional RNNs suffer from the vanishing gradient problem, where gradients diminish exponentially as they are backpropagated through time, leading to difficulties in learning long-term dependencies.

To address this issue, Long Short-Term Memory (LSTM) networks were introduced by Hochreiter and Schmidhuber in 1997. LSTMs are a type of RNN architecture that includes memory cells and gating mechanisms to better capture long-range dependencies in sequential data. The memory cells can store information for long periods of time, and the gating mechanisms control the flow of information in and out of the cells. This allows LSTMs to learn long-term dependencies more effectively than traditional RNNs.

Another variation of RNNs that has gained popularity is Gated Recurrent Units (GRUs), introduced by Cho et al. in 2014. GRUs are similar to LSTMs in that they also include gating mechanisms, but they have a simpler architecture with fewer parameters. This makes GRUs faster to train and more computationally efficient than LSTMs while still being able to capture long-term dependencies in sequential data.

Both LSTMs and GRUs have been shown to outperform traditional RNNs in a variety of tasks, including language modeling, machine translation, and speech recognition. Their ability to learn long-term dependencies has made them essential tools in the field of deep learning.

In conclusion, the evolution of recurrent neural networks from traditional RNNs to LSTMs and GRUs has significantly improved their ability to capture long-term dependencies in sequential data. These advancements have led to breakthroughs in a wide range of applications and have established LSTMs and GRUs as state-of-the-art models for sequential data processing tasks.

#Evolution #Recurrent #Neural #Networks #RNNs #LSTMs #GRUs,rnn

Leave a Reply

You must be logged in to post a comment.