Fix today. Protect forever.

Secure your devices with the #1 malware removal and protection software

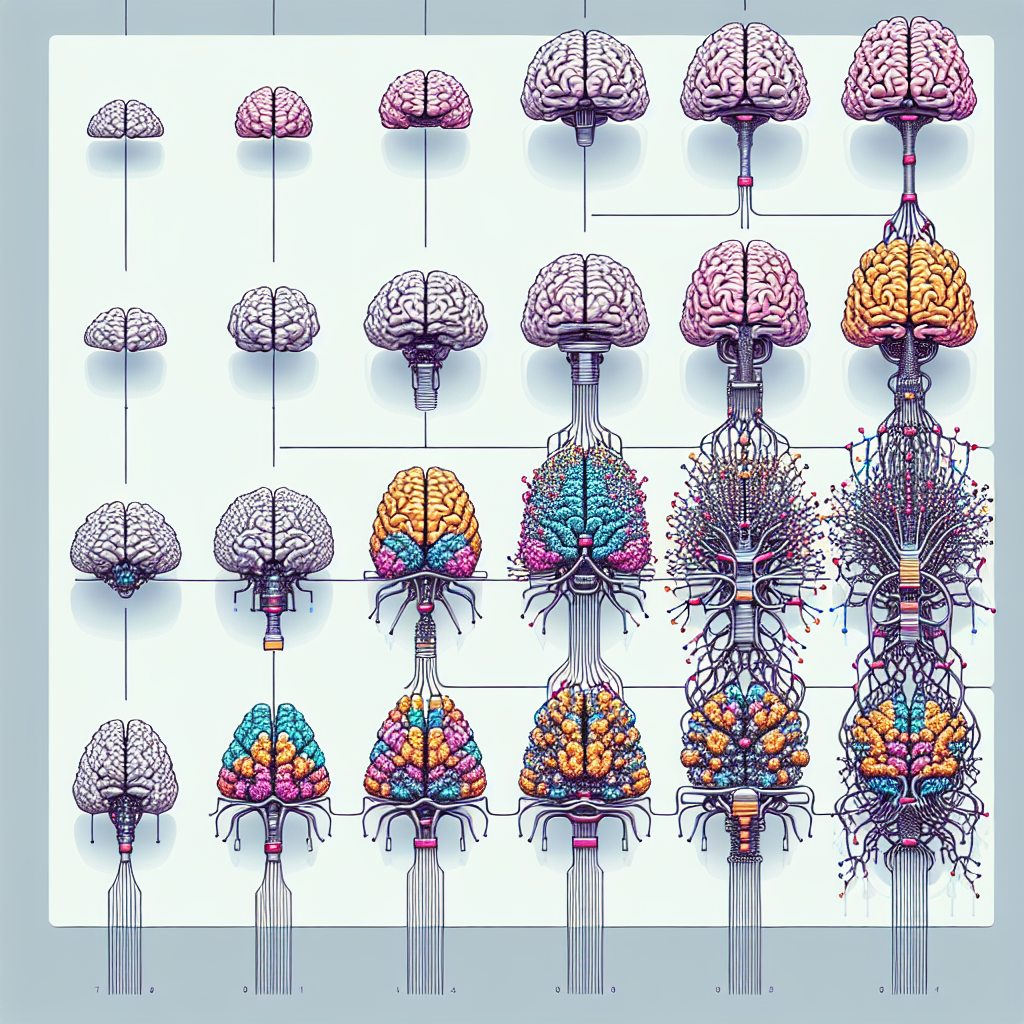

Recurrent Neural Networks (RNNs) have become an essential tool in the field of machine learning and artificial intelligence. These networks are designed to handle sequential data, making them ideal for tasks such as natural language processing, speech recognition, and time series prediction. Over the years, RNNs have evolved from simple architectures to more sophisticated and powerful models known as gated architectures.

The concept of RNNs dates back to the 1980s, with the introduction of the Elman network and the Jordan network. These early RNNs were able to capture sequential dependencies in data by maintaining a hidden state that was updated at each time step. However, they struggled to effectively capture long-term dependencies in sequences due to the vanishing gradient problem.

To address this issue, researchers introduced the Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) architectures in the early 2000s. These gated architectures incorporate mechanisms that allow the network to selectively update and forget information in the hidden state, making it easier to capture long-range dependencies in sequences. The LSTM architecture, in particular, introduced the concept of input, output, and forget gates, which control the flow of information through the network.

Since the introduction of LSTM and GRU, researchers have continued to explore and develop new variations of gated architectures. One notable example is the Gated Feedback Recurrent Neural Network (GF-RNN), which incorporates feedback connections in addition to the standard input and recurrent connections. This architecture has been shown to improve performance on tasks such as speech recognition and language modeling.

Another recent development in the evolution of RNNs is the introduction of attention mechanisms. These mechanisms allow the network to focus on specific parts of the input sequence, making it easier to capture dependencies between distant elements. Attention mechanisms have been successfully applied to tasks such as machine translation, where the network needs to align words in different languages.

Overall, the evolution of RNNs from simple architectures to gated architectures has significantly improved the performance of these networks on a wide range of tasks. By incorporating mechanisms that allow the network to selectively update and forget information, gated architectures have made it easier to capture long-range dependencies in sequential data. As researchers continue to explore new variations and enhancements, the capabilities of RNNs are likely to continue to expand, making them an increasingly powerful tool in the field of machine learning.

#Evolution #Recurrent #Neural #Networks #Simple #RNNs #Gated #Architectures,recurrent neural networks: from simple to gated architectures

Leave a Reply

You must be logged in to post a comment.